Chapter 11

Machine Learning API [#]

This chapter describes a new machine learning layer in GATE. The current implementation are mainly for the three types of NLP learning, namely chunk recognition (e.g. named entity recognition), text classification and relation extraction, which cover many NLP learning problems. The implementation for chunk recognition is based on our work using the support vector machines (SVM) for information extraction [Li et al. 05a]. The text classification is based on our works on opinionated sentence classification and patent document classification (see [Li et al. 07a] and [Li et al. 07b], respectively). The relation extraction is based on our work on named entity relation extraction [Wang et al. 06].

The machine learning API, given a set of documents, can also produce several feature files containing linguistic features and feature vectors, respectively, and labels if there are any in the documents. It can also produce the so-called document-term matrix and n-gram based language model. Those features files are in text format and can be used outside of the GATE. Hence user can use those features off-line for her/his own purpose, e.g. evaluating the new learning algorithms.

The learning API also provides the facilities for active learning based on the learning algorithm Support Vector Machines (SVM), mainly ranking the unlabelled documents according to the confidence scores of the current SVM models for those documents.

The primary learning algorithm implemented is SVM, which has achieved state of the art performances for many NLP learning tasks. The training of the SVM uses the Java version of the SVM package LibSVM [CC001]. The application of the SVM is implemented by ourselves. Moreover, the ML implementation provides an interface to the open-source machine learning package Weka [Witten & Frank 99]. Therefore it can use the machine learning algorithms implemented in Weka. The three widely used learning algorithms, naive Bayes method, KNN and the decision tree algorithm C4.5 are available in the current implementation.

In order to use the machine learning (ML) API, user mainly has to do three things. First user has to annotate some documents with the labels that s/he wants the learning system to annotate in new documents. Those label annotations should be the GATE annotations. Secondly, user may need to pre-process the documents to obtain the linguistic features for the learning. Again these features should be in the form of the GATE annotations. The GATE’s plug-in ANNIE would be very helpful for producing the linguistic features. Other plug-ins such as NP Chunker and parser may also be very helpful. Finally user has to create a configuration file for setting the ML API, e.g. selecting the learning algorithm and defining the linguistic features used by learning. Note that user may not need to create the configuration file from scratch. Instead user can copy one of the three example files presented below and make modifications on it for one particular problem.

The rest of the chapter is organised as follows. Section 11.1 explains the ML in general and the specifications in GATE. Section 11.2 describes all the configuration settings of the ML API one by one, in particular all the elements in the configuration file for setting the ML API (e.g. the learning algorithm to be used and the options for the learning) and defining the NLP features for the user’s particular problem. Section 11.3 presents three exemplary settings respectively for the three types of NLP learning problems to illustrate the usage of this ML plug-in. Section 11.4 lists the steps of using the ML API. Finally Section 11.5 explains the outputs of the ML API for the four usage modes, namely the training, application, evaluation and producing feature files only, respectively, and in particular the format of the feature files and label list file produced by the ML API.

11.1 ML Generalities

There are two main types of ML, supervised learning and unsupervised learning. The supervised learning is more effective and much more widely used in the NLP. Classification is a particular example of supervised learning in which the set of training examples is split into multiple subsets (classes) and the algorithm attempts to distribute the new examples into the existing classes. This is the type of ML that is used in GATE and all further references to ML actually refer to classification.

An ML algorithm “learns” about a phenomenon by looking at a set of occurrences of that phenomenon that are used as examples. Based on these, a model is built that can be used to predict characteristics of future (and unforeseen) examples of the phenomenon.

An ML implementation has two modes of functioning: training and application. The training phase consists of building a model (e.g. statistical model, a decision tree, a rule set, etc.) from a dataset of already classified instances. During application, the model built during training is used to classify new instances.

The ML API in GATE is designed particularly for NLP learning. It can be used for the three types of NLP learning, text classification, chunk recognition, and relation extraction, which cover many NLP learning tasks.

- Text classification classifies text into pre-defined categories. The text can be at different level, such as document, sentence, or token. Some typical examples of text classification are document classification, opinionated sentence recognition, token’s POS tagging, and word sense disambiguation.

- Chunk recognition often consists of two steps. First it identifies the interested chunks from text. It then assigns some label(s) to the extracted chunks. However it may just need the first step only in some cases. The examples of chunk recognition includes named entity recognition (and generally information extraction), NP chunking, and Chinese word segmentation.

- Relation extraction determines if or not a pair of terms from text has some type(s) of pre-defined relations. Two examples are named entity relation extraction and co-reference resolution.

From the ML’s point of view, typically the three types of NLP learning use different linguistic features and feature representations. For example, it has been recognised that for text classification the so-called tf - idf representation of the n-grams in the text is very effective by using some learning algorithms such as SVM. For chunk recognition identifying the first token and the end token of chunk by using the linguistic features of the token itself and the surrounding tokens is effective and efficient. Relation extraction needs considering both the linguistic features from each of the two terms involved in the relation and those features combined from the two terms.

The ML API implements the suitable feature representations for the three types of NLP learning. It also implements the Java codes or wrappers for the widely used ML algorithms including SVM, KNN, Naive Bayes, and the decision tree algorithm C4.5. In addition, given some documents, it can produce the NLP features and the feature vectors, which will be stored in the files. Hence, the users can use those features for evaluating the learning algorithms of their own for some NLP learning task or for any other further processing.

The rest of this section explains some basic definitions in ML and their specification in this GATE plug-in.

11.1.1 Some definitions

- instance: an example of the studied phenomenon. An ML algorithm learns a model from a set of known instances, called a (training) dataset. It can then apply the learned model to another (application) dataset.

- attribute: a characteristic of the instances. Each instance is defined by the values of its attributes. The set of possible attributes is well defined and is the same for all instances in the training and application datasets.

- class: an attribute for which the values are available in the training dataset for learning and need to be found in the application dataset through the ML mechanism.

11.1.2 GATE-specific interpretation of the above definitions

- instance: an annotation. In order to use ML in GATE the users will need to choose the type of annotations used as instances. Token annotations are a good candidate for many NLP learning such as information extraction and POS tagging, but any type of annotation could be used (e.g. things that were found by a previously run JAPE grammar, such as the sentence annotations and document annotations for sentence and document classifications, respectively).

- attribute: an attribute is the value of a named feature of a particular annotation type, which can either (partially) cover the instance annotation considered or another instance annotation which is related to the instance annotation considered. The value of the attribute can refer to the current instance or to an instance either situated at a specified location relative to the current instance or having special relation with the current instance.

- class: any attribute referring to the current instance can be marked as class attribute.

11.2 The Batch Learning PR in GATE

Access to ML implementations is provided in GATE by the “Batch Learning PR” that handles the four usage modes, the training and application of ML model, the evaluation of learning on GATE documents1, and producing the feature files only. This PR is a Language Analyser so it can be used in all default types of GATE controllers.

In order to allow for more flexibility, all the configuration parameters for the PR are set through one external XML file, except the three learning modes which are selected through the normal PR parameterisation. The XML file contains both the configuration parameters of the ML API itself and the linguistic data (namely the definitions of the instance and attributes) used by the ML API. The XML file would be required to be specified when loading ML API plug-in into GATE.

The parent directory of the XML configuration file is called as working directory. A subdirectory of the working directory, named as “savedFiles”, will be created (if it does not exist when loading the ML API). All the files produced by the ML API, including the NLP features files, label list file, feature vector file and learned model file, will be stored in that subdirectory. The log file recording the information of one learning session is also in this directory.

In the following we first describe a few settings which are the parameters of the ML API plug-in. Then we explain those settings specified in the configuration file.

11.2.1 The settings not specified in the configuration file

For the sake of convenience, a few settings are not specified in the configuration file. Instead the user should specify them as loading or run-time parameters of the ML API plug-in, as in many other PRs.

- URL (or path and name) of the configuration file. The user is required to give the URL of the configuration file when loading the ML API into GATE GUI. The configuration file should be in the XML format with the extension name .xml. It contains most of learning settings and will be explained in detail in the next sub-section.

- Corpus. It is a run-time parameter, meaning that the user should specify it before running the ML API in one session. Corpus contains the documents as the learning or application data. The documents should include all the annotations specified in the configuration file, except the annotation as the class attribute. The annotations for class attribute should be available in the documents used for training or evaluation, and may not be presented in the documents which the ML API is applying the learned model to.

- inputASName is the annotation set containing the annotations for the linguistic features used and the class labels.

- outputASName is the annotation set in which the result annotations of applying the models will be put. Note that it should be set as the same as the inputASName when doing the evaluation (namely setting the learningMode as “EVALUATION”).

- learningMode. It is a run-time Enum type parameter. It can be set as one of the following

values, “TRAINING”, “APPLICATION”, “EVALUATION”, “ProduceFeatureFilesOnly”,

“MITRAINING”, and “VIEWPRIMALFORMMODELS”. The first four values correspond

to the common learning modes of the ML API. Other learning modes are for the specific

purpose only. The default learning mode is “TRAINING”.

- In TRAINING mode, the ML API learns from the data provided and saves the models into a file called “learnedModels.save” under the sub-directory “savedFiles” of the working directory.

- If user wants to apply the learned model to the data, s/he should select the APPLICATION mode. In the application mode, the ML API reads the learned model from the file “learnedModels.save” in the subdirectory “savedFiles” and then applies the model to the data.

- In EVALUATION mode, the ML API will do the k-fold or hold-out test set evaluation on the corpus provided (the method of the evaluation is specified in the configuration file, see below), and output the evaluation results to the Messages Window of the GATE GUI and into the log file. Note that when using the “EVALUATION” mode, please make sure that the outputASName is set with the same annotation set as the inputASName.

- If user only wants ML API to produce the NLP feature data and feature vectors data but does not want the training or the application of the learned model, select the ProduceFeatureFilesOnly mode. The feature files that the ML API produces will be explained in detail in Sub-section 11.5.4. Otherwise (namely user wants to use the whole procedure of learning), select one of other learning modes.

- MITRAINING mode is a specific training mode. In this mode, the training data obtained in the session are appended to the end of the feature file. In contrast, in training mode the training data obtained in the session overrides the previous data (if there any) in the feature file. Consequently, the MITRAINING mode uses both the training data obtained in this session and the data existed in the feature file before starting the session for training. Hence, the TRAINING mode is for batch learning, while the MITRAINING mode can be used for on-line (or adaptive, or mixed-initiative) learning. There is one parameter for the MITRAINING mode specifying the minimal number of newly added documents before starting the learning procedure to update the learned model. The parameter can be defined in the configuration file.

- VIEWPRIMALFORMMODELS mode is used for displaying the most salient NLP features in the learned modes. In the current implementation the mode is only valid for linear SVM model in which the most salient NLP features correspond to the biggest (absolute values of) weights in the weight vector. In the configuration file one can specify two parameters to determine the number of displayed NLP features for positive and negative weights, respectively. Note that if e.g. the number for negative weight is set as 0, then no NLP feature is displayed for negative weight.

- RankingDocsForAL applies the current learned SVM models (namely in the sub-directory “savedFiles”) on the feature vectors storing in the file fvsDataSelecting.save in the sub-directory “savedFiles” and ranks the documents stored in the data file according to the margins of the examples in one document to the SVM models. The ranked list of documents will be put into the file ALRankedDocs.save.

Please note that, if the ML API is added into a GATE application as corpus pipeline, it does not process the documents in the corpus until the last document goes through the corpus pipeline, except in the APPLICATION mode2. When the last document in the corpus is going through the pipeline, the ML PR processes all the documents in the corpus. This kind of batch processing is necessary for batch learning algorithms such as the SVM. It also makes the application of learned model much more faster than the normal behaviour of a PR in corpus pipeline, namely every PR in the pipeline process the same document in the corpus before processing the next document. One important consequence of the batch implementation is that one cannot put some post-processing PRs directly after the ML PR in the same corpus pipeline. Instead, s/he should use the ML PR as the last PR in the corpus pipeline, and put all the post-processing PRs into another GATE application which post-processes the documents processed by the ML. However, this kind of limitation can be alleviated for the APPLICATION mode (see Footnote above for more details).

11.2.2 All the settings in the XML configuration file

The root element of the XML configuration file needs to be called “ML-CONFIG” and it must contain two basic elements, “DATASET” and “ENGINE”, and optionally other optional settings. In the following we first describe the optional settings, then the “ENGINE” element, and finally the “DATASET” element. In next section some examples of the XML configuration file are given for illustration.

The optional settings in the configuration file

The ML API provides a variety of optional settings, which facilitates different tasks. Every optional setting has a default value — if one optional setting is not specified in the configuration file, the ML API will adopt its default value. Each of the following optional settings can be set as an element in the XML configuration file.

- Surround mode. Set its value as “true” if user wants ML API to learn the start

token and the end token of chunk, which often results in better performance than

learning every token of chunk for chunk learning such as named entity recognition.

For the classification problem and relation extraction, set its value as “false”. The

corresponding element in the configuration file is as

<SURROUND VALUE=”X”/>

where the variable X has two possible values: “true” or “false”. The default value is “false”. - FILTERTING. In some applications user may want to filter out some training

examples from the original training data before running learning algorithm. The

filtering option allows user to remove some examples without class label (called as

negative examples) from training set. Those negative examples were selected according

to their distances to the SVM classification hyper-plane which is learned from the

original training data for separating the positive examples from the negative ones. If

the item dis is set as “near”, the ML API selects and removes the negative examples

which are closest to the SVM hyper-plane. If it is set as “far”, those negative examples

that are furthest from the SVM hyper-plane are removed. The value of the item ratio

determines how many (namely the ratio) of negative examples will be filtered out. The

element in the configuration file is as

< FILTERING ratio=”X” dis=”Y”/>

where X represents a number between 0 and 1 and Y can be set as “near” or “far”. If the filtering element is not presented in the configuration file, or the value of ratio is set as 0.0, the ML API would not do the filtering. The default value of ratio is 0.0. The default value of dis is “far”. - Evaluation setting. As said above, if the learning mode parameter learningMode is

set as “EVALUATION”, ML API will do evaluation on the corpus. Basically it will

split the documents in the corpus into two parts, training dataset and testing dataset,

learn a model from the training dataset, apply the model to the testing dataset, and

finally compare the annotations assigned by the model with the true annotations of

the testing data and output some evaluation results such as the overall F-measures.

The evaluation setting element specifies the method of splitting the corpus. The item

method determines which method to use for evaluation. Currently two commonly

used methods are implemented, namely the k-fold cross-validation and the hold-out

test3.

The value of the item runs specifies the number “k” for the k-fold cross-validation or

the number of runs for hold-out test. The value of the item ratio specifies the ratio of

the data used for training in the hold-out test method. The element in the configuration

file is as

<EVALUATION method=”X” runs=”Y” ratio=”Z”/>

where the variable X has two possible values “kfold” and “holdout”, Y is a positive integer, and Z is a float number between 0 and 1. The default value of method is “holdout”. The default value of runs is “1”. The default value of ratio is “0.66”. - multiClassification2Binary. In many cases an NLP learning problem can be

transformed into a multi-class classification problem. On the other hand, some

learning algorithm such as the SVM is often used as a binary classifier. ML

API implements two common methods for converting a multi-class problem into

several binary class problems, namely one against others and one against another

one4.

User can select one of the two methods by specifying the value of the item method of

the element. The element is as

<multiClassification2Binary method=”X” thread-pool-size=”N”/>

where the variable X has two values, “one-vs-others” and “one-vs-another’. The default method is one-vs-others method. If the configuration file does not have the element or the item method is missed, then ML API will use the one-vs-others method in the evaluation mode. Since the derived binary classifiers are independent it is possible to learn several of them in parallel. The “thread-pool-size” attribute gives the number of threads that will be used to learn and apply the binary classifiers - if omitted, a single thread will be used to process all the classifiers in sequence. - Parameter thresholdProbabilityBoundary sets the threshold of the probability of

start (or end) token for chunk learning. It is used in the post-processing of the learning

results. Only those boundary tokens which confidence level is above the threshold are

selected as candidates of the entities. The element in configuration file is as

<PARAMETER name=”thresholdProbabilityBoundary” value=”X”/>

The value X is between 0 and 1. The default value is 0.4. - Parameter thresholdProbabilityEntity set the threshold of the probability of a

chunk (which is the multiplication of the probabilities of the start token and end token

of the chunk) for chunk learning. Only those entities which confidence level is above

the threshold are selected as candidates of the entities for further post-processing. The

element in configuration file is as

<PARAMETER name=”thresholdProbabilityEntity” value=”X”/>

The value X is between 0 and 1. The default value is 0.2. - The threshold parameter thresholdProbabilityClassification is

for the classification (e.g. text classification and relation extraction tasks. In contrast,

the above two probabilities are for the chunking recognition task.) The corresponding

element in configuration file is as

<PARAMETER name=”thresholdProbabilityClassification” value=”X”/>

The value X is between 0 and 1. The default value is 0.5. - IS-LABEL-UPDATABLE is a Boolean parameter. If its value is set as “true”, the

label list is updated from the labels in the training data. Otherwise, a pre-defined label

list will be used and cannot be updated from the training data. The configuration

element is as

<IS-LABEL-UPDATABLE value=”X”/>

The value X is “true” or “false”. The default value is “true”. - IS-NLPFEATURELIST-UPDATABLE is a Boolean parameter. If its value is

set as “true”, the NLP feature list is updated from the features in the training or

application data. Otherwise, a pre-defined NLP feature list will be used and cannot be

updated. The configuration element is as

<IS-NLPFEATURELIST-UPDATABLE value=”X”/>

The value X is “true” or “false”. The default value is “true”. - The parameter VERBOSITY specifies the maximal verbosity level of the output of

the system, both to the Message Window of the GATE GUI and into the log file.

Currently there are three verbosity levels. Level 0 only allows the output of warning

messages. Level 1 outputs some important setting information and the results for

evaluation mode. Level 2 is used for debug purpose. The element in configuration file

is as

<VERBOSITY level=”X”/>

The value X can be set as 0, 1 or 2. The default value is 1. - Option MI-TRAINING-INTERVAL specifies the minimal number of newly added

documents needed for triggering the learning, which is used in the MITRAINING mode

of the ML API. The number is specified by the value of the feature “num” as showed

in the following.

<MI-TRAINING-INTERVAL num=”X”/>

The default value of X is 1. - Option BATCH-APP-INTERVAL is used in the APPLICATION mode and

specifies the number of documents in the corpus processed by batch application. Please

refer to Section 11.2.1 for detailed explanation about the option. The corresponding

element in the configuration file is as

<BATCH-APP-INTERVAL num=”X”/>

The default value of X is 1. - Option DISPLAY-NLPFEATURES-LINEARSVM specifies two numbers of the

NLP features respectively for the positive and negative weights of a linear model for

displaying. It is used in the VIEWPRIMALFORMMODELS mode. For more detailed

about the mode see Section 11.2.1. It has the following form in configuration file

<DISPLAY-NLPFEATURES-LINEARSVM numP=”X” numN=”Y”/>

where X and Y represent the numbers for the positive and negative weights, respectively. The default values of X and Y are 10 and 0, respectively. - Optin ACTIVELEARNING specifies the settings for active learning. It has the

following form

<ACTIVELEARNING numExamplesPerDoc=”X”/>

where X represents the number of examples in one document used for obtaining the confidence score of the document (by averaging) with respect to the learned model. The default value of numExamplesPerDoc is 3.

The ENGINE element

The ENGINE element specifies which particular ML algorithm will be used and also allows the setting of options for that algorithm.

The ENGINE element in the configuration file is as

<ENGINE nickname=”X” implementationName=”Y” options=”Z”/>

It has three items:

- nickname can be the normal name of the learning algorithm or whatever user wants it to be.

- implementationName refers to the implementation of the particular learning algorithm

that user wants to use. Its value should be strictly the same as the one defined in ML API for

the particular algorithm. Currently it can be specified as one of the following

values.

- SVMLibSvmJava, the binary classification SVM algorithm implemented in the Java version of the SVM package LibSVM.

- NaiveBayesWeka, the Naive Bayes learning algorithm implemented in Weka.

- KNNWeka, the K nearest neighbour (KNN) algorithm implemented in Weka.

- C4.5Weka, the decision tree algorithm C4.5 implemented in Weka.

- Options: the value of this item, which is dependent on the particular learning algorithm, will

be passed verbatim to the ML engine used. If one option is missed in the specification or

some options items are not presented in the configuration file at all, the default settings of

the learning algorithm will be used.

- The options for the SVMLibSvmJava are similar as that for the LibSVM but has some

specifications as described in follows. Moreover SVMLibSvmJava implements the

uneven margins SVM algorithms (see [Li & Shawe-Taylor 03]). Hence it also

has the uneven margins parameter as one option. The specifications of the

LibSVM options for the SVMLibSvmJava and some other important settings are

as

- -s svm_type, type of the SVM (default value is 0). Since we only implement the binary classification of the SVM algorithm, always set it as 0 (or do not specify this item and use the default value).

- -t kernel_type, the kernel_type should be 0 for linear kernel, and 1 for polynomial kernel. Default value is 0. Note that the current implementation does not support other kernel types such as radial kernel and sigmoid function kernel.

- -d degree, the degree in polynomial kernel, e.g. 2 for quadratic kernel. Default value is 3.

- -c cost, the cost parameter C in the SVM. Default value is 1.

- -m cachesize, set the cache memory size in MB (default 100).

- -tau value, setting the value of uneven margins parameter of the SVM. τ = 1 corresponds to the standard SVM. If the training data has just a small number of positive examples and a big number of negative examples, which may occur in many NLP learning problems particularly when using a few documents for training, set the parameter τ as a value less than 1 (e.g. τ = 0.4) often results in better F-measure than the standard SVM (see [Li & Shawe-Taylor 03]).

- The KNN algorithm has one option, the number of neighbours used. It is set via “-k X”. The default value is 1.

- There is no option currently for Naive Bayes and C4.5 algorithms.

- The options for the SVMLibSvmJava are similar as that for the LibSVM but has some

specifications as described in follows. Moreover SVMLibSvmJava implements the

uneven margins SVM algorithms (see [Li & Shawe-Taylor 03]). Hence it also

has the uneven margins parameter as one option. The specifications of the

LibSVM options for the SVMLibSvmJava and some other important settings are

as

The DATASET element

The DATASET element defines the type of annotation to be used as instance and the set of attributes that characterise all the instances.

An “INSTANCE-TYPE” sub-element is used to select the annotation type to be used for instances, and the attributes are defined by a sequence of attribute elements.

For example, if an “INSTANCE-TYPE” has a “Token” as its value, there will be one instance in the document per “Token”. This also means that the positions (see below) are defined in relation to Tokens. The “INSTANCE-TYPE” can be seen as the basic unit to be taken into account for machine learning.

Different NLP learning tasks may have different instance types and use different kinds of attribute elements. Chunking recognition often uses the token as instance type and the linguistic features of “Token” and other annotations as features. Text classification’s instance type is the text unit for classification, e.g. the whole document, or sentence, or token. If classifying a sequence of tokens, the n-grams representation of the tokens is often a good feature representation for many statistical learning algorithms. For relation extraction, the instance type is a pair of terms for the relation, and the features come from not only the linguistic features of each of the two terms but also those related to both terms.

A DATASET element should define an instance type sub-element, and an “ATTRIBUTE” sub-element or an “ATRRIBUTE_REL” sub-element as “Class”, and some linguistic feature related sub-elements. All the annotation types involved in the data set definition should be in the same Annotation Set. Each sub-element defining the linguistic features or class label is associated with one or more annotation types. Each annotation type used should specify one of its annotation feature — the values of that annotation feature are the linguistic features used as input to learning algorithm or the class labels for learning5. Note that if blank spaces are contained in the values of the annotation features, they will be replaced by the character “_” in each occurrence. So it is advisable that the values of the annotation features used, in particular for the class label, do not contain any blank space.

In the following we explain all the sub-elements one by one. Please also refer to the examples of configuration files presented in next section for the examples of the dataset definition. Note that each sub-element should have a unique name (which is different from other sub-element’s names) if it requires name, unless we state explicitly the other case.

- The instance type sub-element is

defined as <INSTANCE-TYPE>X</INSTANCE-TYPE> where X is the annotation

type used as instance unit for learning. For relation extraction, user has to specify the

two arguments of the relation too, as

<INSTANCE-ARG1>A</INSTANCE-ARG1>

<INSTANCE-ARG2>B</INSTANCE-ARG2>

which are the two features of the Instance Type. The values of A and B should be some identification of the first and second terms of the relation, respectively. - An ATTRIBUTE element has the following sub-elements:

- NAME, the name of the attribute. Its value should not end with “gram”(see below for the name of NGRAM feature).

- SEMTYPE, type of the attribute value, it can be “NOMINAL” or “NUMERIC”. Currently only nominal is implemented.

- TYPE, the annotation type used to extract the attribute.

- FEATURE, the value of the attribute will be the value of the named feature on the annotation of specified type.

- POSITION: the position of an instance annotation relative to the current instance annotation. The instance annotation is used for extracting the feature defined in this element6. The default value of the parameter is 0.

- <CLASS/>: an empty element used to mark the class attribute. There can only be one attribute marked as class in a dataset definition.

Note that, in the current implementation, the component value in the feature vector for one attribute feature is 1 if the attribute’s position p is 0. Otherwise its value is 1.0∕|p|.

- An ATTRIBUTELIST element is similar to ATTRIBUTE except that it has no POSITION sub-element but a RANGE element. This will be converted into several ATTRIBUTEs with position ranging from the value of the attribute “from” to the value of the attribute “to”. Actually it defines a window containing several consecutive examples. We often call the window as context window. The ATTRIBUTELIST should be used when defining a context window for features, because not only it can avoid the duplication of ATTRIBUTE elements, but also for speeding up the processing (see the discussion for the element WINDOWSIZE below).

- An WINDOWSIZE element specifies the size of context window, which will override the

context window size defined in every ATTRIBUTELIST. In detail, if the element is

not presented in the configuration file, the window size defined in each element

ATTRIBUTELIST will be used for that feature. Otherwise, the window size specified by this

element will be used for each ATTRIBUTELIST if the latter contains one ATTRIBUTE

at position 0 (otherwise the ATTRIBUTELIST will be ignored). This element

can be used for speeding up the process of extracting the feature vectors from

documents speficied by ATTRIBUTELIST. The element has two features specifying the

length of left and right sides of context window, respectively. It has the following

form

/ / / / / / <WINDOWSIZE windowSizeLeft=”X” windowSizeRight=”Y”/>

where X and Y represent the the length of left and right sides of context window, respectively. For example, if X = 2 and Y = 1, then the context window will be from the position -2 to 1 ( e.g. from the second token in the left through the current token to the first token in the right). - An NGRAM feature is used for characterising the annotation which consists of more than

one instance annotations, e.g. a sentence consists of many tokens. It has the following

sub-elements.

- NAME, name of the n-gram. Its value should end with “gram” to denote the n-gram feature.

- NUMBER, the “n” of the n-gram, with value 1 for uni-gram, and 2 for bi-gram, etc.

- CONSNUM, number of the features for each of the n-grams. Given a value “k” of the CONSUM, the NGRAM element should have the “k” CONS-X sub-elements, where X= 1, ..., k. Each CONS-X element has one TYPE sub-element and one FEATURE sub-element, which define one feature of the term in the n-gram.

- The WEIGHT sub-element specifies a weight for the n-gram feature. The n-gram part of the feature vector for one instance is normalised. If a user want to adjust the contributions of the n-gram to the whole feature vector, s/he can do it by setting this WEIGHT parameter7. Then every component of the n-gram part of the feature vector would be multiplied by the parameter. The default value of the parameter is 1.0.

- The ValueTypeNgram element specifies the type of value used in the n-gram. Currently it

can take one of the three types, binary, tf, and tf-idf, which are explained in Section 11.5.4.

The value is specified by the X in

<ValueTypeNgram>X</ValueTypeNgram>

X = 1 for binary, = 2 for tf, and = 3 for tf-idf. The default value is 3. - FEATURES-ARG18 element defines the features related to the first argument of relation for relation learning. It should include one ARG sub-element referring to the GATE annotation of the argument (see below for detailed explanation). It may include other sub-elements, such as ATTRIBUTE, ATTRIBUTELIST and/or NGRAM, to define the linguistic features related to the argument.

- FEATURES-ARG2 element defines the features related to the second argument of relation. Like the element FEATURES-ARG1, it should include one ARG sub-element. It may also include some other sub-elements for linguistic feature. Note that, the ARG sub-element in the FEATURES-ARG2 should have a unique name which is different from the name for the ARG sub-element in the FEATURES-ARG1. However, the other sub-elements could have the same name as the corresponding ones in the FEATURES-ARG1, if they refer to the same annotation type and feature in the text.

- ARG element is used in both FEATURES-ARG1 and FEATURES-ARG2. It specifies the

annotation corresponding to one argument of relation. It has four sub-elements as the

following

- NAME, an unique name of the argument.

- SEMTYPE: type of the arg value, it can be “NOMINAL” or “NUMERIC”. Currently only nominal is implemented.

- TYPE, the annotation type for the argument.

- FEATURE, the value of the named feature on the annotation of specified type is the identification of the argument. Only if the value of the feature is same as the value of the feature specified in the sub-element <INSTANCE-ARG1>A</INSTANCE-ARG1> (or <INSTANCE-ARG2>B</INSTANCE-ARG2>), the argument is regarded as one argument of the relation instance considered.

- ATTRIBUTE_REL element is similar to the ATTRIBUTE element. But it does not have the POSITION sub-element, and has two other sub-elements ARG1 and ARG2, relating to the two argument features of the (relation) instance type. In other words, if and only if the value X in the sub-element <ARG1>X</ARG1> is same as the value A in the first argument instance <INSTANCE-ARG1>A</INSTANCE-ARG1> and the value Y in the sub-element <ARG2>Y</ARG2> is same as the value B in the second argument instance <INSTANCE-ARG2>B</INSTANCE-ARG2>, the feature defined in this ATTRIBUTE_REL sub-element is assigned to the instance considered. Note that for relation learning a ATTRIBUTE_REL is used as the class attribute by having an empty element <CLASS/>.

11.3 Examples of configuration file for the three learning types

The following are three illustrated examples of configuration file for information extraction, sentence classification, and relation extraction, respectively. Note that the configuration file is in the XML format and should be stored in a file with name having “XML” extension.

The first example is for information extraction. The optional settings are in the first part. It first specifies the surround mode as “true”, as it is a kind of chunk learning. Then it specifies the filtering settings. The ratio’s value is “0.1” and the dis’s value is “near”, meaning that the 10% of negative examples which are closest to the learned SVM hyper-plane will be removed in the filtering stage before the learning for the information extraction. The thresholds of probabilities for the boundary tokens and information entity are set as “0.4” and “0.2”,respectively. The threshold of probability for the classification is also set as “0.5”, which, however, will not be used in the problem for chunk learning with the surround mode set as “true”. The multiClassification2Binary is set as “one-vs-others”, meaning that the ML API will convert the multi-class classification problem into some binary classification problems by using the one against all others approach. For the EVALUATION setting, the “2-fold” cross-validation will be used.

The second part is the sub-element ENGINE specifying the learning algorithm. The ML API will use the SVM learning implemented in the LibSVM. From the option settings it will use the linear kernel with the cost C as 0.7 and the cache memory as 100M. Additionally it will use the uneven margins SVM with the uneven margins parameter τ as 0.4.

The last part is the DATASET sub-element, defining the linguistic features used. It first specifies the “Token” annotation as instance type. The first ATTRIBUTELIST allows the token’s string as the feature of an instance. The range from “-5” to “5” means that the strings of the current token instance as well as its five preceding tokens and its five following tokens would be used as features for the current token instance. The next two attribute lists define the features based on the token’s capitalisation information and types, respectively. The ATTRIBUTELIST named as “Gaz” uses as features the values of the feature “majorType” of the annotation type “Lookup”. Finally the ATTRIBUTE feature defines the attribute class, because it has a sub-element <CLASS/>. The values of the feature “class” of the annotation type “Mention” are the class labels.

<?xml version="1.0"?>

<ML-CONFIG> <SURROUND value="true"/> <FILTERING ratio="0.1" dis="near"/> <PARAMETER name="thresholdProbabilityEntity" value="0.2"/> <PARAMETER name="thresholdProbabilityBoundary" value="0.4"/> <PARAMETER name="thresholdProbabilityClassification" value="0.5"/> <multiClassification2Binary method="one-vs-others"/> <EVALUATION method="kfold" runs="2"/> <ENGINE nickname="SVM" implementationName="SVMLibSvmJava" options=" -c 0.7 -t 0 -m 100 -tau 0.4 "/> <DATASET> <INSTANCE-TYPE>Token</INSTANCE-TYPE> <ATTRIBUTELIST> <NAME>Form</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Token</TYPE> <FEATURE>string</FEATURE> <RANGE from="-5" to="5"/> </ATTRIBUTELIST> <ATTRIBUTELIST> <NAME>Orthography</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Token</TYPE> <FEATURE>orth</FEATURE> <RANGE from="-5" to="5"/> </ATTRIBUTELIST> <ATTRIBUTELIST> <NAME>Tokenkind</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Token</TYPE> <FEATURE>kind</FEATURE> <RANGE from="-5" to="5"/> </ATTRIBUTELIST> <ATTRIBUTELIST> <NAME>Gaz</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Lookup</TYPE> <FEATURE>majorType</FEATURE> <RANGE from="-5" to="5"/> </ATTRIBUTELIST> <ATTRIBUTE> <NAME>Class</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Mention</TYPE> <FEATURE>class</FEATURE> <POSITION>0</POSITION> <CLASS/> </ATTRIBUTE> </DATASET> </ML-CONFIG> |

The following is a configuration file for sentence classification. It first specifies the surround mode as “false”, because it is a text classification problem. The next two options allows the label list and the NLP feature list being updated from the training data. It also specifies the thresholds for entity and boundary of entity. Note that these two specifications will not be used in the text classification problems. However, the presences of them in the configuration file is not harmful to the ML API. The threshold of probability for classification is set as “0.5”, which would be used in the application of the learned model. The evaluation will use the hold-out test method. It will randomly select 66% documents from the corpus for training and other 34% documents for testing. It will have two runs of evaluation and the results are averaged over the two runs. Note that it does not specify the method of converting a multi-class classification problem into several binary class problem, meaning that it will adopt the default one (namely one against all others) if it needs.

The configuration file specifies the KNN as learning algorithm. It also specifies the number of neighbours used as 5. Of course other learning algorithms can be used as well. For example, the ENGINE element in the previous example, which specifies the SVM as learning algorithm, can be put into this configuration file to replace the current one.

In the DATASET element, the annotation “Sentence” is used as instance type. Two kinds of linguistic features are defined. One is the NGRAM. Another is the ATTRIBUTE. The n-gram is based on the annotation “Token”. It is uni-gram as its NUMBER element has the value 1. It is based on the two features “root” and “category” of the annotation “Token”. In another words, two tokens will be considered as the same term of the uni-gram if and only if they have the same “root” feature and the same “category” feature. The weight of the ngram was set as 10.0, meaning its contribution is ten times of the contribution of another feature, the sentence length. By the ATTRIBUTE the feature “sent_size” of the annotation “Sentence” is used as another NLP feature. Finally the values of the feature “class” of the annotation “Sentence” are the class labels.

<?xml version="1.0"?>

<ML-CONFIG> <SURROUND value="false"/> <IS-LABEL-UPDATABLE value="true"/> <IS-NLPFEATURELIST-UPDATABLE value="true"/> <PARAMETER name="thresholdProbabilityEntity" value="0.2"/> <PARAMETER name="thresholdProbabilityBoundary" value="0.42"/> <PARAMETER name="thresholdProbabilityClassification" value="0.5"/> <EVALUATION method="holdout" runs="2" ratio="0.66"/> <ENGINE nickname="KNN" implementationName="KNNWeka" options = " -k 5 "/> <DATASET> <INSTANCE-TYPE>Sentence</INSTANCE-TYPE> <NGRAM> <NAME>Sent1gram</NAME> <NUMBER>1</NUMBER> <CONSNUM>2</CONSNUM> <CONS-1> <TYPE>Token</TYPE> <FEATURE>root</FEATURE> </CONS-1> <CONS-2> <TYPE>Token</TYPE> <FEATURE>category</FEATURE> </CONS-2> <WEIGHT>10.0</WEIGHT> </NGRAM> <ATTRIBUTE> <NAME>Class</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Sentence</TYPE> <FEATURE>sent_size</FEATURE> <POSITION>0</POSITION> </ATTRIBUTE> <ATTRIBUTE> <NAME>Class</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Sentence</TYPE> <FEATURE>class</FEATURE> <POSITION>0</POSITION> <CLASS/> </ATTRIBUTE> </DATASET> </ML-CONFIG> |

The last configuration file is for relation extraction. It does not specify any optional setting, meaning that it uses all the default values of those settings (see Section 11.2.2 for the default values of all possible settings). For example,

- it sets the surround mode as “false”;

- both the label list and NLP feature list are updatable.

- the threshold of the probability for classification is set as 0.5.

- it uses the one against all others method for converting the multi-class problem into binary class problems for the SVM learning.

- for evaluation it uses the hold-out testing with ratio as 0.66 and only one run.

The configuration file specifies the learning algorithm as the Naive Bayes method implemented in Weka. However, other learning algorithms can be used as well.

The linguistic features used for relation extraction are more complicated than other two types of NLP learning. For relation learning, user must specify an annotation type covering the two arguments of relation as the instance type. User may also need to specify the annotation type for one argument if s/he wants to use the feature related only to the argument. In the DATASET sub-element, the instance type is specified as the annotation type “RE_INS”. Two arguments of the instance are specified by the two features “arg1” and “arg2” of the annotation type “RE_INS”, which should refer to the same values of the argument IDs as those specified in the ARG sub-elements of the sub-element FEATURE-ARG1 and FEATURE-ARG2. The linguistic features related to the first argument of relation are defined in the sub-element FEATURE-ARG1, in which the feature “string” of the annotation type “Token” of the first argument is used as one NLP feature for relation learning. Similarly the token’s form feature related to the second argument is also used as another NLP feature, as specified in the sub-element FEATURE-ARG2. By the first ATTRIBUTE_REL the feature “t12” of the annotation type RE_INS related to the relation instance defines another feature for relation learning. The second ATTRIBUTE_REL defines the label. It specifies the class labels as the values of the feature “Relation_type” of the annotation type ACERelation. The feature “MENTION_ARG1” of the type ACERelation should refer to the identification feature (namely the values of feature “MENTION_ID” of the annotation type “ACEEntity”) of the first argument annotation. The feature “MENTION_ARG2” should refer to the second argument annotation of the relation.

<?xml version="1.0"?>

<ML-CONFIG> <ENGINE nickname="NB" implementationName="NaiveBayesWeka"/> <DATASET> <INSTANCE-TYPE>RE_INS</INSTANCE-TYPE> <INSTANCE-ARG1>arg1</INSTANCE-ARG1> <INSTANCE-ARG2>arg2</INSTANCE-ARG2> <FEATURES-ARG1> <ARG> <NAME>ARG1</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>ACEEntity</TYPE> <FEATURE>MENTION_ID</FEATURE> </ARG> <ATTRIBUTE> <NAME>Form</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Token</TYPE> <FEATURE>string</FEATURE> <POSITION>0</POSITION> </ATTRIBUTE> </FEATURES-ARG1> <FEATURES-ARG2> <ARG> <NAME>ARG2</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>ACEEntity</TYPE> <FEATURE>MENTION_ID</FEATURE> </ARG> <ATTRIBUTE> <NAME>Form</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>Token</TYPE> <FEATURE>string</FEATURE> <POSITION>0</POSITION> </ATTRIBUTE> </FEATURES-ARG2> <ATTRIBUTE_REL> <NAME>EntityCom1</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>RE_INS</TYPE> <ARG1>arg1</ARG1> <ARG2>arg2</ARG2> <FEATURE>t12</FEATURE> </ATTRIBUTE_REL> <ATTRIBUTE_REL> <NAME>Class</NAME> <SEMTYPE>NOMINAL</SEMTYPE> <TYPE>ACERelation</TYPE> <ARG1>MENTION_ARG1</ARG1> <ARG2>MENTION_ARG2</ARG2> <FEATURE>Relation_type</FEATURE> <CLASS/> </ATTRIBUTE_REL> </DATASET> </ML-CONFIG> |

11.4 How to use the ML API

The ML API implements the procedure of using supervised machine learning for NLP, which generally has two steps, training and application. The training step learns some models from labelled data. The application step applies the learned models to the unlabelled data to add labels. Therefore, in order to use supervised ML for NLP, one should have some labelled data, which can be obtained either by manually annotating or from other resources. One also needs determine which linguistic features are used in the training (and the same features should be used in the application as well). Note that in the implementation all those features are based some GATE annotation type and one annotation feature of that type. Finally one should determine which learning algorithm will be used.

Based on the general procedure, we explain how to use the ML API step by step in the following.

- Annotate some documents with labels that you want ML API to learn. The labels should be represented by the values of some feature of one GATE annotation type.

- Determine the linguistic features that you want the ML API to use for learning.

- Use the PRs of the GATE to obtain those linguistic features so that the documents for training or application indeed contain the features. By using the ANNIE you can have many useful features. The other PRs such as GATE morphological analyser and the parsers may produce useful features as well. Sometimes you may need to write some Jape scripts to produce the features you want. Note that all the features are represented as values of the features of some GATE’s annotation types, which should be specified in the DATASET element of the configuration file.

- Create an XML configuration file for your learning problem. The file should contain one DATASET element specifying the NLP features used, one ENGINE element specifying the learning algorithm, and some optional settings as necessary. (Tip: it may be easier of copying one of the configuration files presented above and modifying them for your problem than writing a configuration file from scratch.)

- Load the training documents containing the required annotations (in one annotation set) representing the linguistic feautures and the label, and put them into a corpus.

- Load the ML API into GATE GUI. First you need load the plugin with name “learning” into GATE using the tool Manage CREOLE Plugins, if the GATE you are using haven’t done it yet. Then you can create a PR for the plugin from the “Batch Learning PR” in the existing PR list by providing a configuration file for the ML plugin. After that you can put the PR into a Corpus Pipeline application to use it. Add the corpus containing training documents into the application too. Set the inputASName as the annotation set containing the annotations for linguistic features and labels.

- Select the run-time parameter learningMode as “TRAINING” to learn a model from the training data. Or select the learningMode as “EVALUATION” to do evaluation on the training data and get some results. Note that when using the “EVALUATION” mode, please make sure that the outputASName is set with the same annotation set as the inputASName. (Tip: it may save your time if you first try the “EVALUATION” mode on a small number of documents to make sure that the ML API works well on your problem and outputs some reasonable results before training on the large data.)

- If you want to apply the learned model to the new documents, load those new document into GATE, and pre-process them using exactly the same PRs as those used for pre-processing the training documents. Then load the ML API with the same configuration file as for training into the GATE, select the learningMode as “APPLICATION”, and run the ML API on the corpus containing the new documents. The application results, namely the new annotations containing the labels, will be added into the annotation set specified by the outputASName.

- If you just want the feature files produced by the system and do not want to do any learning or application, select the learning mode “ProduceFeatureFilesOnly”.

11.5 The outputs of the ML API

There are several different types of the outputs of the ML API. First the ML API outputs some information about the learning settings. Those information will be printed in the Messages Window of the GATE GUI and also into the log file “logFileForNLPLearning.save”. The amount of the information displayed can be set via the VERBORSITY parameter in the XML configuration file. The main output of the learning system are the results of the ML API, which are different for different usage modes. In training mode the system produce the learned models. In applicaiton mode it annotates the documents using the learned model. In the evaluation mode it displays the evaluation results. Finally in ProduceFeatureFilesOnly mode it produces several feature files for the current corpus. In the following we explain the outputs for different learning modes, respectively.

Note that all the files produced by the ML API, including the log file, are in the sub-directory “savedFiles” of the working directory where the XML configuration file is in.

11.5.1 Training results

When the ML API is in the training mode, its main output is the learned model stored in one file named “learnedModels.save”. For the SVM, the learned model file is a text file. For the learning algorithms implemented in Weka, the model file is a binary file. The output also includes the feature files described in subsection 11.5.4.

11.5.2 Application results

The main application result is the annotations added into the documents. Those annotations are the results of applying the ML model to the documents. The type of the annotations is specified in the class element in the configuration file. The class label of one annotation is the value of the annotation feature also specified in the class element. The annotation has another feature with name as “prob” which presents the confidence level of the learned model on this annotation.

11.5.3 Evaluation results

The ML API outputs the evaluation results for each run of the evaluation and also the averaged results over all the runs. For each run, it first prints the message about the names of documents in corpus for training and testing, respectively. Then it displays the evaluation results of this run — first the results for each class label and then the micro-averaged results over all labels. For each label, it presents the name of label, the number of instances belonging to the label in the training data, and the F-measure results on the testing data — the numbers of correct, partial correct, spurious and missing instances in the testing data, and the two types of F-measures (Precision, Recall and F1): the strict and the lenient. The F-measure results are obtained by using the AnnotationDiff Tool which is described in Chapter 13. Finally the system presents the means of the results of all runs for each label and the micro-averaged results, respectively.

11.5.4 Feature files

The ML API produces several feature files from the documents with the GATE annotations. These feature files could be used by user for evaluating the learning algorithms not implemented in this plug-in. In the following we describe the formats of those feature files. Note that all the data files described in the following can be obtained by selecting the run time parameter learningMode as “ProduceFeatureFilesOnly”. But you may get some of data files when you use other learning mode.

NLP feature file. This file, named as NLPFeatureData.save, contains the NLP features of the instances, which are defined in the configuration file. The first few lines of an NLP feature file for information extraction are as

Class(es) Form(-1) Form(0) Form(1) Ortho(-1) Ortho(0) Ortho(1)

0 ft-airlines-27-jul-2001.xml 512 1 Number_BB _NA[-1] _Form_Seven _Form_UK[1] _NA[-1] _Ortho_upperInitial _Ortho_allCaps[1] 1 Country_BB _Form_Seven[-1] _Form_UK _Form_airlines[1] _Ortho_upperInitial[-1] _Ortho_allCaps _Ortho_lowercase[1] 0 _Form_UK[-1] _Form_airlines _Form_including[1] _Ortho_allCaps[-1] _Ortho_lowercase _Ortho_lowercase[1] 0 _Form_airlines[-1] _Form_including _Form_British[1] _Ortho_lowercase[-1] _Ortho_lowercase _Ortho_upperInitial[1] 1 Airline_BB _Form_including[-1] _Form_British _Form_Airways[1] _Ortho_lowercase[-1] _Ortho_upperInitial _Ortho_upperInitial[1] 1 Airline _Form_British[-1] _Form_Airways _Form_[1], _Ortho_upperInitial[-1] _Ortho_upperInitial _NA[1] 0 _Form_Airways[-1] _Form_, _Form_Virgin[1] _Ortho_upperInitial[-1] _NA _Ortho_upperInitial[1] |

The first line of the NLP feature file lists the names of all features used. The number in the parenthesis following a feature name indicates the position of the feature. For example, “Form(-1)” means the form of token which is immediately before the current token, and “Form(0)” means the form of the current token. The NLP features for all instances are listed for one document following another. For one document, the first line shows the index of the document, the document’s name and the number of instances in the document, as shown in the second line above. Then each of the following lines is for each of the instances in the document, with the order of their occurrences in the document. For each line for one instance, the first item is a number n, representing the number of class labels of the instance. Then the following n items are the labels. If the current instance is the first instance of an entity, its corresponding label has a suffix “_BB”. The other items following the label item(s) are the NLP features of the instance, in the order as listed in the first line of the file. Each NLP feature contains the feature’s name and value, separated by “_”. At the end of one NLP feature, there may be an integer in the parenthesis “[]”, which represents the position of the feature relative to the current instance. If there is no such an integer in “[]” at the end of one NLP feature, then the feature is at the position 0.

Feature vector file. It has the file name featureVectorsData.save and stores the feature vector in the sparse format for each instance. The first few lines of the feature vector file corresponding to the NLP feature file shown above are as

0 512 ft-airlines-27-jul-2001.xml

1 2 1 2 439:1.0 761:1.0 100300:1.0 100763:1.0 2 2 3 4 300:1.0 763:1.0 50439:1.0 50761:1.0 100440:1.0 100762:1.0 3 0 440:1.0 762:1.0 50300:1.0 50763:1.0 100441:1.0 100762:1.0 4 0 441:1.0 762:1.0 50440:1.0 50762:1.0 100020:1.0 100761:1.0 5 1 5 20:1.0 761:1.0 50441:1.0 50762:1.0 100442:1.0 100761:1.0 6 1 6 442:1.0 761:1.0 50020:1.0 50761:1.0 100066:1.0 7 0 66:1.0 50442:1.0 50761:1.0 100443:1.0 100761:1.0 |

The feature vectors are also listed for one document after another. For one document, the first line shows the index of the document, the number of instances in the document, and the document’s name. Each of the following lines is for each of the instances in the document. The first item in the line of one instance is the index of the instance in the document. The second item is a number n, representing the number of labels the instance has. The following n items are the labels’ indexes of the instance.

For the text classification and relation learning, the label’s index comes directly from the label list file described below. For the chunk learning, the label’s index presented in the feature vector file is a bit complicated, as explained in the following. If an instance (e.g. token) is the first one of a chunk with a label k, then the instance has the label’s index as 2 * k - 1, as shown in the fifth instance. If it is the last instance of the chunk, it has the label’s index as 2 * k, as shown in the sixth instance. If the instance is both the first one and the last one of the chunk (namely the chunk consists of one instance), it has two label indexes, 2 * k - 1 and 2 * k, as shown in the first (and second) instance.

The items following the label item are the non-zero components of the feature vector. Each component is represented by two numbers separated by “:”. The first number is the dimension of the component in the feature vector, and the second one is the value of the component.

Label list file. It has the name LabelsList.save and stores a list of labels and their indexes. The following is a part of label list. Each line shows one label name and its index in the label list.

Airline 3

Bank 13 CalendarMonth 11 CalendarYear 10 Company 6 Continent 8 Country 2 CountryCapital 15 Date 21 DayOfWeek 4 |

NLP feature list. It has the name NLPFeaturesList.save and contains a list of NLP features and their indexes in the list. The following are the first few lines of an NLP feature list file.

totalNumDocs=14915

_EntityType_Date 13 1731 _EntityType_Location 170 1081 _EntityType_Money 523 3774 _EntityType_Organization 12 2387 _EntityType_Person 191 421 _EntityType_Unknown 76 218 _Form_’’ 112 775 _Form_\$ 527 74 _Form_’ 508 37 _Form_’s 63 731 _Form_( 526 111 |

The first line of the file shows the number of instances from which the NLP features were collected. The number of instances will be used for the computation of the so-called idf in document or sentence classification. The following lines are for the NLP features. Each line is for one unique feature. The first item in the line represents the NLP feature, which is a combination of the feature’s name defined in the configuration file and the value of the feature. The second item is a positive integer, representing the index of the feature in the list. The last item is the number of instances that the feature occurs, which is needed for computing the idf.

N-grams (or language model) file. The file has the name NgramList.save, which only can be produced by selecting the learning mode as “ProduceFeatureFilesOnly”. In order to produce the n-gram data, user may just use a very simple configuration file, e.g. it could only contain the DATASET element, and the data element could only contain an NGRAM element to specify the type of n-gram and the INSTANCE-TYPE element to define the part of document from which the n-gram data are created. The NGRAM element in configuration file specifies what type of n-grams the ML PR produces (see Section 11.2.2 for the explanation of the n-gram definition). For example, if you specify a bi-gram based on the string form of Token, you will obtain a list of bi-gram from the corpus you used. The following are the first lines of a bi-gram list based on the token’s form and was obtained from 3 documents.

## The following 2-gram were obtained from 3 documents or examples

Aug<>, 3 Female<>; 3 Human<>; 3 2004<>Aug 3 ;<>Female 3 .<>The 3 of<>a 3 )<>: 3 ,<>and 3 to<>be 3 ;<>Human 3 |

The two terms in one bi-gram are separated by “<>”. The number following one n-gram is the number of occurrences of that n-gram in the corpus. The n-gram list is ordered according to the numbers of occurrence of n-gram terms. The most frequent terms in the corpus are in the beginning of the list.

The n-gram data produced can be based on any features of annotations available in the documents. Hence it can not only produce the conventional n-gram data based on the token’s form or lemma, but also the n-gram based on e.g. token’s POS, or combination of token’s POS and form, or any feature of sentence annotation (see Section 11.2.2 for how to define different types of n-gram). Therefore one can regard the n-gram defined and produced by the ML PR as some kind of generalised n-gram.

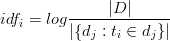

Document-term matrix file. The file has the name documentByTermMatrix.save, which only can be produced by selecting the learning mode as “ProduceFeatureFilesOnly”. Document-term matrix presents the weights of terms appearing in each of documents (see Section 9.19 for more explanations). Currently three types of weight are implemented, the binary, term frequency (tf), and tf-idf. The binary weight is the simple one – it is 1 for one term appearing in document and 0 if one term is not in document. tf refers to the number of occurrences of one term in document. tf-idf is popular in information retrieval and text mining. It is a multiplication of tf and idf. idf refers to inverse document frequency, defining as

where |D| is the total number of documents in the corpus, and |{dj : ti  dj}| is the

number of documents in which the term ti appears. The type of weight is specified by the

sub-element ValueTypeNgram in the DATASET element in configuration file (see Section

11.2.2).

dj}| is the

number of documents in which the term ti appears. The type of weight is specified by the

sub-element ValueTypeNgram in the DATASET element in configuration file (see Section

11.2.2).

Like the n-gram data, in order to produce the document-term matrix, user may just use a very simple configuration file, e.g. it could only contain the DATASET element, and the data element could only contain two elements, the INSTANCE-TYPE element to define the part of document from which the terms are counted, and a NGRAM element to specify the type of n-gram. As said above, the element ValueTypeNgram specifies the type of value used in the matrix. If it is not presented, the default type tf-idf will be used. The conventional document-term matrix can be produced by the uni-gram based on token’s form or lemma and the instance-type covering the whole document. Any other choices for the n-gram and the instance type will result in some generalised document-term matrix.

The following was extracted from the beginning of a document-term matrix file, produced by uni-gram based on token’s form. It presents a part of terms and their tf values in the document named “27.xml”. One term and its tf are separated by “:”. The terms are ranked in alphabetic order.

0 Documentname="27.xml", has 1 parts:

":2 (:6 ):6 ,:14 -:1 .:16 /:1 124:1 2004:1 22:1 29:1 330:1 54:1 8:2 ::5 ;:11 Abstract:1 Adaptation:1 Adult:1 Atopic:2 Attachment:3 Aug:1 Bindungssicherheit:1 Cross-:1 Dermatitis:2 English:1 F-SOZU:1 Female:1 Human:1 In:1 Index:1 Insecure:1 Interpersonal:1 Irrespective:1 It:1 K-:1 Lebensqualitat:1 Life:1 Male:1 NSI:2 Neurodermitis:2 OT:1 Original:1 Patients:1 Psychological:1 Psychologie:1 Psychosomatik:1 Psychotherapie:1 Quality:1 Questionnaire:1 RSQ:1 Relations:1 Relationship:1 SCORAD:1 Scales:1 Sectional:1 Securely:1 Severity:2 Skindex-:1 Social:1 Studies:1 Suffering:1 Support:1 The:1 Title:1 We:3 [:1 ]:1 a:4 absence:1 affection:1 along:2 amount:1 an:1 and:9 as:1 assessed:1 association:2 atopic:5 attached:7 |

A list of names of documents processed. The file has the name docsName.save, which only can be produced by selecting the learning mode as “ProduceFeatureFilesOnly”. It contains the names of all the documents processed. The first line shows the number of documents in the list. Then each line list one document’s name. The first lines of one file are showed in the following.

##totalDocs=3

ft-bank-of-england-02-aug-2001.xml ft-airtours-08-aug-2001.xml ft-airlines-27-jul-2001.xml |

A list of names of the selected documents for active learning purpose. The file has the name ALSelectedDocs.save. It is pure text file. It will be produced by selecting the learning mode as “ProduceFeatureFilesOnly”. The file contain the names of documents which have been selected for annotating and training in the active learning process. It is used by the “RankingDocsForAL” learning mode to exclude those selected documents from the ranked documents for active learning purpose. When one or more documents are selected for annotating and training, their names should be put into this file, one line per document’s name.

A list of names of ranked documents for active learning purpose. The file has the name ALRankedDocs.save. It can only be produced by selecting the learning mode as “RankingDocsForAL”. The file contains the list of names of the documents ranked for active learnig, according to their usefulness for learning. Those in the front of the list are the most useful documents for learning. The first line in the file shows the total number of documents in the list. Each of other lines in the fils lists one document and the averaged confidence score for classifiying the documents. An example of the file are showed in the following.

##numDocsRanked=3

ft-airlines-27-jul-2001.xml_000201 8.61744 ft-bank-of-england-02-aug-2001.xml_000221 8.672693 ft-airtours-08-aug-2001.xml_000211 9.82562 |

1For the evaluation mode the system divides the corpus into two parts, uses one part to learn a model, applies the model to another part, and finally output the results on the testing part (see Sub-section 11.2.2 for the evaluation methods and Section 11.5 for an explanation of the evaluation results

2In the APPLICATION mode the number of documents processed by one time of application can be specified by one parameter in the configuration file and the default value of the parameter is 1. If the parameter is set as 1, then the ML API applies the learned model to the documents in the corpus one by one. In other word, in this case the ML API has the normal behaviour of a GATE PR and can be followed by other PRs in a pipeline. On the other hand, if assigning the parameter a larger value, usually the application would be faster but may probably consume more computer memory.

3For the k-fold cross-validation the system first gets a ranked list of documents in alphabetic order of the documents’ names, then segments the document list into k partitions of equal size, and finally uses each of the partitions as testing set and other documents as training set. For hold-out test, the system randomly selected some documents as testing data and uses all other documents as training data.

4Probably the two methods have different names in some publications. But the methods are the same. Suppose we have a multi-class classification problem with n classes. For the one against others method, one binary classification problem is derived for each of the n classes, which has the examples belonging to the class considered as positive examples and all other examples in training set as negative examples. In contrast, for the one against another one method, one binary classification problem is derived for each pair (c1,c2) of the n classes, in which the training examples belonging to the class c1 are the positive examples and those belonging to another class c2 are the negative examples.

5For an “‘ATTRIBUTE” sub-element, if you do not specify the annotation feature, this sub-element will be ignored by the ML API. Therefore, if one annotation type you want to use does not have any annotation feature, you should add one annotation feature to it and assign one and the same value to the feature for all annotations of that type.

6Given the current instance annotation A, the position information is used to determine another instance

annotation B. The linguistic feature for the instance A obtained by this element is the feature from the

annotation C which is defined by this element and overlaps with the annotation B. The position 0 means

that the instance annotation B is the A itself, -1 means that B is the first preceding annotation of A, and

1 means that B is the first annotation following A.

Please note that, if the annotation A contains more than one annotation C, e.g. C refers to token and A

refers to named entity which contains more than one tokens, then there are more than one annotations C

in the position 0 relative to the annotation A, in which case the current implementation just picks the first

annotation of C. Therefore, if there is a possibility that the annotation A contains more than one annotations

C, then it would be better of using e.g. a NGRAM type feature to characterise the feature than using an

ATTRIBUTE feature.

7For example, if a user does sentence classification and s/he uses two features, the uni-gram of tokens in one sentence and the length of sentence and thinks that the uni-gram feature is more important than the length of sentence, then s/he can set the weight sub-element of the n-gram element with a number bigger than 1.0 (like 10.0).

8If a feature of a relation instance is determined only by one argument of the relation but not the two arguments, then the feature could be defined in the element FEATURES-ARG1 or the element FEATURES-ARG2. On the other hand, if a feature is related to both arguments, then it should be defined in the element ATTRIBUTE_REL.