Chapter 13

Performance Evaluation of Language Analysers [#]

When you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meager and unsatisfactory kind: it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the stage of science. (Kelvin)

Not everything that counts can be counted, and not everything that can be counted counts. (Einstein)

GATE provides two useful tools for automatic evaluation: the AnnotationDiff tool and the Benchmarking Tool. These are particularly useful not just as a final measure of performance, but as a tool to aid system development by tracking progress and evaluating the impact of changes as they are made. The evaluation tool (AnnotationDiff) enables automated performance measurement and visualisation of the results, while the benchmarking tool enables the tracking of a system’s progress and regression testing.

13.1 The AnnotationDiff Tool [#]

The AnnotationDiff tool enables two sets of annotations on a document to be compared, in order either to compare a system-annotated text with a reference (hand-annotated) text, or to compare the output of two different versions of the system (or two different systems). For each annotation type, figures are generated for precision, recall, F-measure and false positives. Each of these can be calculated according to 3 different criteria - strict, lenient and average. The reason for this is to deal with partially correct responses in different ways.

- The Strict measure considers all partially correct responses as incorrect (spurious).

- The Lenient measure considers all partially correct responses as correct.

- The Average measure allocates a half weight to partially correct responses (i.e. it takes the average of strict and lenient).

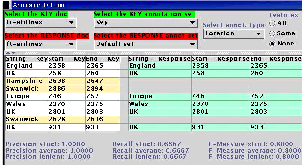

It can be accessed both from GUI or from the API. Annotation Diff compares sets of annotations with the same type. When performing the diff, the annotation offsets and their features will be taken into consideration. and after that, the diff process is triggered. Figure 13.1 shows a part of the AnnotationDiff viewer.

All annotations from the key set are compared with the ones from the response set, and those found to have the same start and end offsets are displayed on the same line in the table. Next, Annotation Diff evaluates if the features of each annotation from the response set subsume those features from the key set, as specified by the keyFeatureNamesSet parameter. To understand this in more detail, see section 3.23, which describes the Annotation Diff parameters.

13.2 The six annotation relations explained

Coextensive

Two annotations are coextensive if they hit the same span of text in a document. Basically, both their start and end offsets are equal.

Overlaps

Two annotations overlap if they share a common span of text.

Compatible

Two annotations are compatible if they are coextensive and if the features of one (usually the ones from the key) are included in the features of the other (usually the response).

Partially Compatible

Two annotations are partially compatible if they overlap and if the features of one (usually the ones from the key) are included in the features of the other (response).

Missing This applies only to the key annotations. A key annotation is missing if either it is not coextensive or overlapping, orif one or more features are not included in the response annotation.

Spurious

This applies only to the response annotations. A response annotation is spurious if either it is not coextensive or overlapping, or if one or more features from the key are not included in the response annotation.

13.3 Benchmarking tool [#]

The benchmarking tool differs from the AnnotationDiff in that it enables evaluation to be carried out over a whole corpus rather than a single document. It also enables tracking of the system’s performance over time. The tool can be run in either GUI mode or standalone mode. For more information on how to run the tool, see 3.24.

The tool requires a clean version of a corpus (with no annotations) and an annotated reference corpus. First of all, the tool is run in generation mode to produce a set of texts annotated by the system. These texts are stored for future use. The tool can then be run in three ways:

- comparing the stored processed set with the human-annotated set;

- comparing the current processed set with the human-annotated set;

- (default mode) comparing the stored processed set with the current processed set and the human-annotated set.

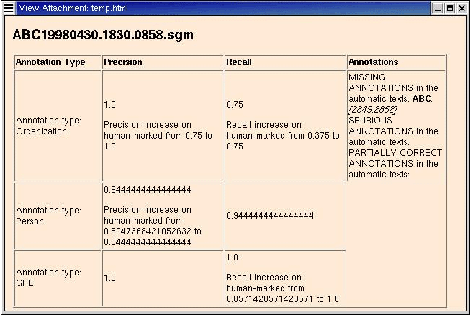

In each case, performance statistics will be output for each text in the set, and overall statistics for the entire set. In the default mode, information is also provided about whether the figures have increased or decreased in comparison with the annotated set. The processed set can be updated at any time by rerunning the tool in generation mode with the latest version of the system resources. Furthermore, the system can be run in verbose mode, where for each P and R figure below a certain threshold (set by the user), the non-coextensive annotations (and their corresponding text) will be displayed. The output of the tool is written to an HTML file in tabular form, for easy viewing of the results (see Figure 13.2).

13.4 Metrics for Evaluation in Information Extraction [#]

Much of the research in IE in the last decade has been connected with the MUC competitions, and so it is unsurprising that the MUC evaluation metrics of precision, recall and F-measure [Chinchor 92] also tend to be used, along with slight variations. These metrics have a very long-standing tradition in the field of IR [van Rijsbergen 79] (see also [Manning & Schütze 99, Frakes & Baeza-Yates 92]).

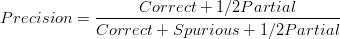

Precision measures the number of correctly identified items as a percentage of the number of items identified. In other words, it measures how many of the items that the system identified were actually correct, regardless of whether it also failed to retrieve correct items. The higher the precision, the better the system is at ensuring that what is identified is correct.

Error rate is the inverse of precision, and measures the number of incorrectly identified items as a percentage of the items identified. It is sometimes used as an alternative to precision.

Recall measures the number of correctly identified items as a percentage of the total number of correct items. In other words, it measures how many of the items that should have been identified actually were identified, regardless of how many spurious identifications were made. The higher the recall rate, the better the system is at not missing correct items.

Clearly, there must be a tradeoff between precision and recall, for a system can easily be made to achieve 100% precision by identifying nothing (and so making no mistakes in what it identifies), or 100% recall by identifying everything (and so not missing anything). The F-measure [van Rijsbergen 79] is often used in conjunction with Precision and Recall, as a weighted average of the two. False positives are a useful metric when dealing with a wide variety of text types, because it is not dependent on relative document richness in the same way that precision is. By this we mean the relative number of entities of each type to be found in a set of documents.

When comparing different systems on the same document set, relative document richness is unimportant, because it is equal for all systems. When comparing a single system’s performance on different documents, however, it is much more crucial, because if a particular document type has a significantly different number of any type of entity, the results for that entity type can become skewed. Compare the impact on precision of one error where the total number of correct entities = 1, and one error where the total = 100. Assuming the document length is the same, then the false positive score for each text, on the other hand, should be identical.

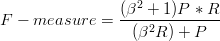

Common metrics for evaluation of IE systems are defined as follows:

| (13.1) |

| (13.2) |

| (13.3) |

where β reflects the weighting of P vs. R. If β is set to 1, the two are weighted equally.

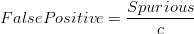

| (13.4) |

where c is some constant independent from document richness, e.g. the number of tokens or sentences in the document.

Note that we consider annotations to be partially correct if the entity type is correct and the spans are overlapping but not identical. Partially correct responses are normally allocated a half weight.

13.5 Metrics for Evaluation of Inter-Annotator Agreement [#]

When we evaluate the performance of a processing resource such as tokeniser, POS tagger, or a whole application, we usually have a human-authored ”gold standard” against which to compare our software. However, it is not always easy or obvious what this gold standard should be, as different people may have different opinions about what is correct. Typically, we solve this problem by using more than one human annotator, and comparing their annotations. We do this by calculating inter-annotator agreement (IAA), also known as inter-rater reliability.

IAA can be used to assess how difficult a task is. This is based on the argument that if two humans cannot come to agreement on some annotation, it is unlikely that a computer could ever do the same annotation ”correctly”. Thus, IAA can be used to find the ceiling for computer performance.

There are many possible metrics for reporting IAA, such as Cohen’s Kappa, prevalence, and bias [Eugenio & Glass 04]. Kappa is the best metric for IAA when all the annotators have identical exhaustive sets of questions on which they might agree or disagree. This could be a task like ”read over this text and mark up all telephone numbers”. However, sometimes there is disagreement about the set of questions, e.g. when the annotators themselves determine which text spans they ought to annotate. That could be a task like ”read over this text and mark up all references to politics”. When annotators determine their own sets of questions, it is appropriate to use precision, recall, and F-measure to report IAA. The following demonstrates best practices for calculating IAA in this way.

Let’s assume we have two annotators, Ann1 and Ann2. We want to measure how well Ann1 annotates compared with Ann2, and vice versa. Note that P(Ann1 vs Ann2) == R(Ann2 vs Ann1), and, similarly, P(Ann2 vs Ann1) == R(Ann1 vs Ann2).

This means that we can simply run an Annotation Diff with Ann1 as the key, and Ann2 as the response, and then do the reverse: Ann1 as the response, and Ann2 as the key.

We then report Precision and F-measure from both runs, as well as the average of precision from both runs, i.e., [Prec(Ann1 vs Ann2) + Prec(Ann2 vs Ann1)] / 2. This latter number is the average precision of your annotators.

13.6 A Plugin for Computing Inter-Annotator Agreement [#]

The IAA plugin computes different IAA measures for different tasks. For named entity annotations, it computes the F-measures, namely Precision, Recall, and F1 from two or more annotation sets. For text classification tasks, it computes the Cohen’s kappa and some other IAA measures which are more suitable than the F-measures for the task. In the following subsections we will describe those measures and the output results from the plugin. But first we explain how to load the plugin, and the input to and the parameters of the plugin.

First you need load the plugin with name “iaaPlugin” into GATE using the tool Manage CREOLE Plugins, if the GATE you are using haven’t done it yet. Then you can create a PR for the plugin from the “IAA Computation” in the existing PR list. After that you can put the PR into a Corpus Pipeline to use it.

The corpus pipeline needs a corpus containing the documents, each of which should have two or more annotation sets for computing the IAA measures. One requirement of the plugin is that each document has two or more annotation sets, which may be produced by two or more annotators making the annotation for the same type, or may correspond to one gold standard set and one set from system’s output respectively. The annotation set produced by one annotator should have the same name in all the documents. And one annotation type in different annotation sets should have the same name too. For example, suppose that we ask three annotators to annotate person names in two documents Doc1 and Doc2. Then the Doc1 should have three annotation sets, each of which contains the annotations from one annotator, e.g. the annotation sets Ann1, Ann2 and Ann3, and each of which contains an annotation type Per for the person name annotations. The Doc2 should have the three annotation sets with the same names and the same annotation types. Then one can compute the IAA measures for the three annotation sets on the two documents by specifying the running parameters for the IAA plugin, as explained next.

The IAA plugin has two running parameters annSetsForIaa and annTypesAndFeats for specifying the annotation sets and the annotation types and features, respectively. For the above example, you can set the value of annSetsForIaa as “Ann1;Ann2;Ann3” and the value of annTypesAndFeats as “Per” to compute the IAA for the three annotation sets on the annotation type Per. Note that the names of annotation sets are separated by “;”. You can also specify more than one annotation types and separate them by “;” too, and optionally specify one annotation feature for one type by attaching a “->” followed by feature name to the end of the annotation name. For example, “Per->label;Org” specifies two annotation types Per and Org and also a feature name label for the type Per. If you specify one annotation feature for one annotation type, then two annotations of the same type from two different annotation sets in the same document will be regarded as being different if they have different values of that feature, even if the two annotations occupy exactly the same position in the document. On the other hand, if you do not specify any annotation feature for one annotation type, then the two annotations of the type will be regarded as the same if they occupy the same position in the document.

The plugin has another parameter problemT specifying the problem types. There are two problem types. If the annotations are for named entities, then the parameter should use the value “ENTITYRecognition” and the F-measure will be computed as IAA measures. If the annotations are for classification (e.g. sentence classification), the parameter should have the value “CLASSIFICATION” and then the Cohen’s Kappa and observed agreement will be computed.

Another parameter verbosity specifies the verbosity level of the plugin’s output. Level 2 displays the most detailed output, including the IAA measures on each document and the macro-averaged results over all documents. Level 1 only displays the IAA measures averaged over all documents. Level 0 does not have any output. In the following we will explain the outputs in detail.

13.6.1 IAA for Classification Task

IAA has been used mainly in the classification tasks, where two or more annotators are given a set of instances and are asked to classify those instances into some pre-defined categories. IAA measures the agreements among the annotators on the class labels assigned to the instances by the annotators. Text classification tasks include document classification, sentence classification(e.g. opinionated sentence recognition), and token classification (e.g. POS tagging).

The three commonly used IAA measures are observed agreement, specific agreement, and Kappa (κ) [Hripcsak & Heitjan 02]. See the Appendix F for the detailed explanations of those measures. The IAA plugin computes and displays those IAA measures for classification task. In the following we will explain the output of the plugin for classification task.

At the verbosity level 2, the output of the plugin is the most detailed. It first prints out a list of the names of the annotation sets used for IAA computation. In the rest part of the results, the first annotation set is denoted as annotator 0, and the second annotation set is denoted as annotator 1, etc. Then the plugin outputs the IAA results for each document in the corpus.

For one document, it displays one annotation type and optionally an annotation feature if specified, and then the results for that type and that feature. Note that the IAA computations are based on the pairwise of annotators. In another word, we compute the IAA for each pair of annotators. The first results for one document and one annotation type are the macro-averaged ones over all pairs of annotators, which have three numbers for the three types of IAA measures, namely Observed agreement, Cohen’s kappa and Scott’s pi, respectively. Then for each pair of annotator, it outputs the three types of measures, a confusion matrix (or contingency table), and the specific agreements for each label. The labels are obtained from the annotations of that particular type. For one annotation type, if one feature is specified, then the label are the values of the feature in the annotations. Please note that two specific terms may be added to the label list: one is the empty one obtained from those annotations which have the annotation feature but do not have a value for the feature; another one is the “Non-cat” corresponding to those annotations not having the feature at all. If no feature is specified, then two labels are used: “Anns” corresponding to the annotations of that type, and “Non-cat” corresponding to those annotations which are annotated by one annotator but are not annotated by another annotator.

After displaying the results for each document, the plugin prints out the macro-averaged results over all documents. First for each annotation type, it prints out the results for each pair of annotators, and the macro-averaged results over all pairs of annotators. At last it prints out the macro-averaged results over all pair of annotators, all types and all documents.

13.6.2 IAA For Named Entity Annotation

The commonly used IAA measures such as Kappa and other statistical measures have not been used in the text mark-up tasks such as named entity recognition and information extraction, due to the reason explained in Section 13.5 (also see [Hripcsak & Rothschild 05]). Instead, the F-measures such as Precision, Recall, and F1 have been widely used in information extraction evaluations such as MUC, ACE and TERN for measuring IAA. That’s because the computation of the F-measures does not need the number of non-entity examples. Another reason is that F-measures are commonly used for evaluating information extraction systems. Hence IAA F-measures can be directly compared with system’s results.

For computing F-measure between two annotation sets, one can use one annotation set as golden standard and another set as system’s output and compute the F-measures such as Precision, Recall and F1. One can switch the roles of the two annotation sets. The Precision and Recall in the former case become Recall and Precision in the latter, respectively. But the F1 remains the same in both cases. For more than two annotators, we first compute F-measures between any two annotators and use the mean of the pair-wise F-measures as an overall measure. The computation of the F-measures (e.g. Precision, Recall and F1) are shown in Section 13.5. As noted in [Hripcsak & Rothschild 05], the F1 computed for two annotators for one specific category is equivalent to the positive specific agreement of the category.

The outputs of the IAA plugins for named entity annotation are similar with those for classification. But the outputs are the F-measures such as Precision, Recall and F1, instead of the agreements and Kappas. It first prints out the results for each document. For one document, it prints out the results for each annotation type, macro-averaged over all pairs of annotators, then the results for each pair of annotators. In the last part, the macro-averaged results over all documents are displayed. Note that the results are reported in both the strict measure and the lenient measure, as defined in Section 13.1.

Please note that, for computing the F-measures for the named entity annotations, the IAA plugin carries out the same computation as the Benchmarking tool. The IAA plugin is simpler than the Benchmarking tool in the sense that the former needs only one set of documents with two or more annotation sets, whereas the latter needs three sets of the same documents, one without any annotation, another with one annotation set, and the third one with another annotation set. Additionally, the IAA plugin can deal with more than two annotation sets but the Benchmarking tool can only deal with two annotation sets.