Developing Language Processing

Components with GATE

Version 6 (a User Guide)

For GATE version 6.0-beta1

(built August 21, 2010)

Hamish Cunningham

Diana Maynard

Kalina Bontcheva

Valentin Tablan

Niraj Aswani

Ian Roberts

Genevieve Gorrell

Adam Funk

Angus Roberts

Danica Damljanovic

Thomas Heitz

Mark Greenwood

Horacio Saggion

Johann Petrak

Yaoyong Li

Wim Peters

et al

ⒸThe University of Sheffield 2001-2010

http://gate.ac.uk/

Work on GATE has been partly supported by EPSRC grants GR/K25267 (Large-Scale Information Extraction), GR/M31699 (GATE 2), RA007940 (EMILLE), GR/N15764/01 (AKT) and GR/R85150/01 (MIAKT), AHRB grant APN16396 (ETCSL/GATE), Matrixware, the Information Retrieval Facility and several EU-funded projects (SEKT, TAO, NeOn, MediaCampaign, MUSING, KnowledgeWeb, PrestoSpace, h-TechSight, enIRaF).

Developing Language Processing Components with GATE Version 5.2

Ⓒ2010 The University of Sheffield

Department of Computer Science

Regent Court

211 Portobello

Sheffield

S1 4DP

http://gate.ac.uk

This work is licenced under the Creative Commons Attribution-No Derivative Licence. You are free to copy, distribute, display, and perform the work under the following conditions:

- Attribution You must give the original author credit.

- No Derivative Works You may not alter, transform, or build upon this work.

With the understanding that:

- Waiver Any of the above conditions can be waived if you get permission from the copyright holder.

- Other Rights In no way are any of the following rights affected by the license: your fair dealing or fair use rights; the author’s moral rights; rights other persons may have either in the work itself or in how the work is used, such as publicity or privacy rights.

- Notice For any reuse or distribution, you must make clear to others the licence terms of this work.

For more information about the Creative Commons Attribution-No Derivative License, please visit this web address: http://creativecommons.org/licenses/by-nd/2.0/uk/

ISBN: TBA

Contents

1 Introduction

1.1 How to Use this Text

1.2 Context

1.3 Overview

1.3.1 Developing and Deploying Language Processing Facilities

1.3.2 Built-In Components

1.3.3 Additional Facilities

1.3.4 An Example

1.4 Some Evaluations

1.5 Changes in this Version

1.5.1 Version 6.0-beta1 (August 2010)

1.6 Further Reading

2 Installing and Running GATE

2.1 Downloading GATE

2.2 Installing and Running GATE

2.2.1 The Easy Way

2.2.2 The Hard Way (1)

2.2.3 The Hard Way (2): Subversion

2.3 Using System Properties with GATE

2.4 Configuring GATE

2.5 Building GATE

2.5.1 Using GATE with Maven

2.6 Uninstalling GATE

2.7 Troubleshooting

2.7.1 I don’t see the Java console messages under Windows

2.7.2 When I execute GATE, nothing happens

2.7.3 On Ubuntu, GATE is very slow or doesn’t start

2.7.4 How to use GATE on a 64 bit system?

2.7.5 I got the error: Could not reserve enough space for object heap

2.7.6 From Eclipse, I got the error: java.lang.OutOfMemoryError: Java heap space

2.7.7 On MacOS, I got the error: java.lang.OutOfMemoryError: Java heap space

2.7.8 I got the error: log4j:WARN No appenders could be found for logger...

2.7.9 Text is incorrectly refreshed after scrolling and become unreadable

2.7.10 An error occurred when running the TreeTagger plugin

2.7.11 I got the error: HighlightData cannot be cast to ...HighlightInfo

3 Using GATE Developer

3.1 The GATE Developer Main Window

3.2 Loading and Viewing Documents

3.3 Creating and Viewing Corpora

3.4 Working with Annotations

3.4.1 The Annotation Sets View

3.4.2 The Annotations List View

3.4.3 The Annotations Stack View

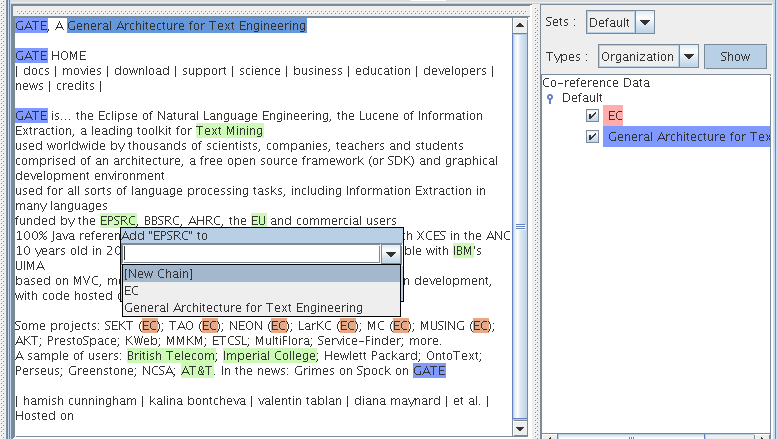

3.4.4 The Co-reference Editor

3.4.5 Creating and Editing Annotations

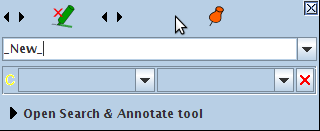

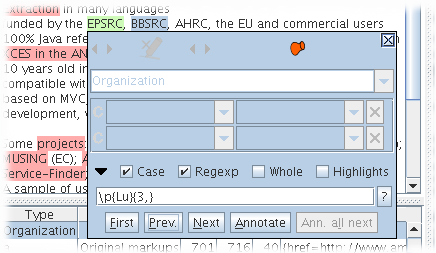

3.4.6 Schema-Driven Editing

3.4.7 Printing Text with Annotations

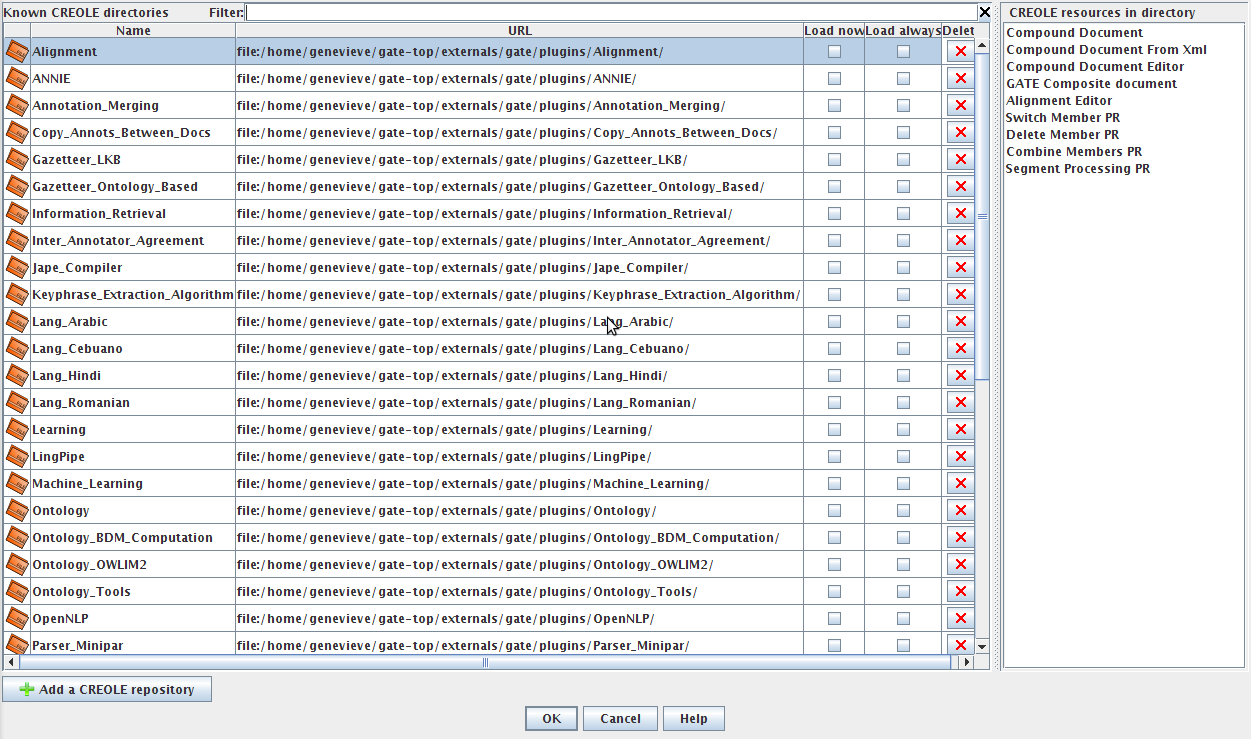

3.5 Using CREOLE Plugins

3.6 Loading and Using Processing Resources

3.7 Creating and Running an Application

3.7.1 Running an Application on a Datastore

3.7.2 Running PRs Conditionally on Document Features

3.7.3 Doing Information Extraction with ANNIE

3.7.4 Modifying ANNIE

3.8 Saving Applications and Language Resources

3.8.1 Saving Documents to File

3.8.2 Saving and Restoring LRs in Datastores

3.8.3 Saving Application States to a File

3.8.4 Saving an Application with its Resources (e.g. GATE Teamware)

3.9 Keyboard Shortcuts

3.10 Miscellaneous

3.10.1 Stopping GATE from Restoring Developer Sessions/Options

3.10.2 Working with Unicode

4 CREOLE: the GATE Component Model

4.1 The Web and CREOLE

4.2 The GATE Framework

4.3 The Lifecycle of a CREOLE Resource

4.4 Processing Resources and Applications

4.5 Language Resources and Datastores

4.6 Built-in CREOLE Resources

4.7 CREOLE Resource Configuration

4.7.1 Configuration with XML

4.7.2 Configuring Resources using Annotations

4.7.3 Mixing the Configuration Styles

4.8 Tools: How to Add Utilities to GATE Developer

4.8.1 Putting your tools in a sub-menu

5 Language Resources: Corpora, Documents and Annotations

5.1 Features: Simple Attribute/Value Data

5.2 Corpora: Sets of Documents plus Features

5.3 Documents: Content plus Annotations plus Features

5.4 Annotations: Directed Acyclic Graphs

5.4.1 Annotation Schemas

5.4.2 Examples of Annotated Documents

5.4.3 Creating, Viewing and Editing Diverse Annotation Types

5.5 Document Formats

5.5.1 Detecting the Right Reader

5.5.2 XML

5.5.3 HTML

5.5.4 SGML

5.5.5 Plain text

5.5.6 RTF

5.5.7 Email

5.6 XML Input/Output

6 ANNIE: a Nearly-New Information Extraction System

6.1 Document Reset

6.2 Tokeniser

6.2.1 Tokeniser Rules

6.2.2 Token Types

6.2.3 English Tokeniser

6.3 Gazetteer

6.4 Sentence Splitter

6.5 RegEx Sentence Splitter

6.6 Part of Speech Tagger

6.7 Semantic Tagger

6.8 Orthographic Coreference (OrthoMatcher)

6.8.1 GATE Interface

6.8.2 Resources

6.8.3 Processing

6.9 Pronominal Coreference

6.9.1 Quoted Speech Submodule

6.9.2 Pleonastic It Submodule

6.9.3 Pronominal Resolution Submodule

6.9.4 Detailed Description of the Algorithm

6.10 A Walk-Through Example

6.10.1 Step 1 - Tokenisation

6.10.2 Step 2 - List Lookup

6.10.3 Step 3 - Grammar Rules

II GATE for Advanced Users

7 GATE Embedded

7.1 Quick Start with GATE Embedded

7.2 Resource Management in GATE Embedded

7.3 Using CREOLE Plugins

7.4 Language Resources

7.4.1 GATE Documents

7.4.2 Feature Maps

7.4.3 Annotation Sets

7.4.4 Annotations

7.4.5 GATE Corpora

7.5 Processing Resources

7.6 Controllers

7.7 Duplicating a Resource

7.8 Persistent Applications

7.9 Ontologies

7.10 Creating a New Annotation Schema

7.11 Creating a New CREOLE Resource

7.12 Adding Support for a New Document Format

7.13 Using GATE Embedded in a Multithreaded Environment

7.14 Using GATE Embedded within a Spring Application

7.14.1 Duplication in Spring

7.14.2 Spring pooling

7.14.3 Further reading

7.15 Using GATE Embedded within a Tomcat Web Application

7.15.1 Recommended Directory Structure

7.15.2 Configuration Files

7.15.3 Initialization Code

7.16 Groovy for GATE

7.16.1 Groovy Scripting Console for GATE

7.16.2 Groovy scripting PR

7.16.3 The Scriptable Controller

7.16.4 Utility methods

7.17 Saving Config Data to gate.xml

7.18 Annotation merging through the API

8 JAPE: Regular Expressions over Annotations

8.1 The Left-Hand Side

8.1.1 Matching a Simple Text String

8.1.2 Matching Entire Annotation Types

8.1.3 Using Attributes and Values

8.1.4 Using Meta-Properties

8.1.5 Using Templates

8.1.6 Multiple Pattern/Action Pairs

8.1.7 LHS Macros

8.1.8 Using Context

8.1.9 Multi-Constraint Statements

8.1.10 Negation

8.1.11 Escaping Special Characters

8.2 LHS Operators in Detail

8.2.1 Compositional Operators

8.2.2 Matching Operators

8.3 The Right-Hand Side

8.3.1 A Simple Example

8.3.2 Copying Feature Values from the LHS to the RHS

8.3.3 RHS Macros

8.4 Use of Priority

8.5 Using Phases Sequentially

8.6 Using Java Code on the RHS

8.6.1 A More Complex Example

8.6.2 Adding a Feature to the Document

8.6.3 Finding the Tokens of a Matched Annotation

8.6.4 Using Named Blocks

8.6.5 Java RHS Overview

8.7 Optimising for Speed

8.8 Ontology Aware Grammar Transduction

8.9 Serializing JAPE Transducer

8.9.1 How to Serialize?

8.9.2 How to Use the Serialized Grammar File?

8.10 The JAPE Debugger

8.11 Notes for Montreal Transducer Users

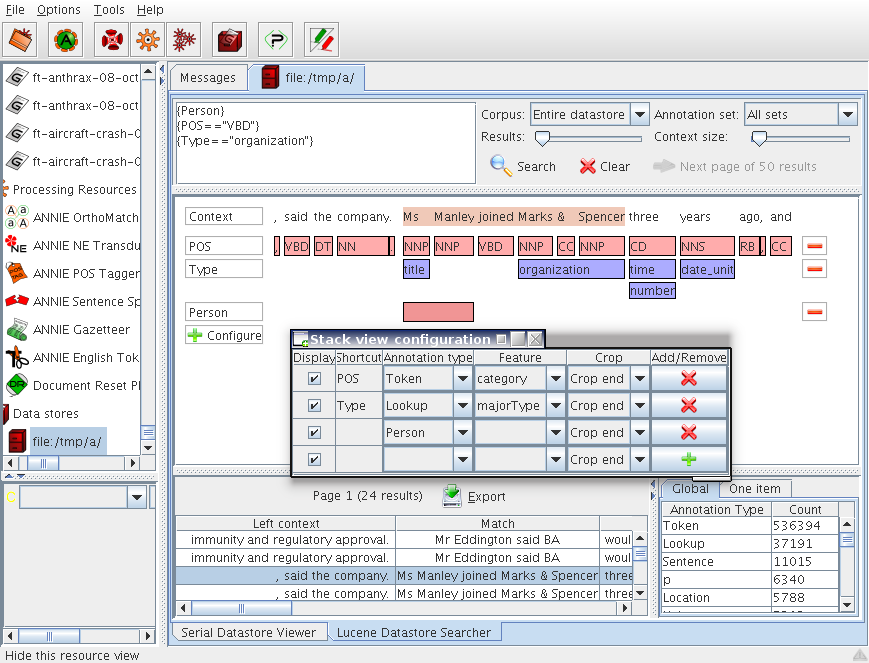

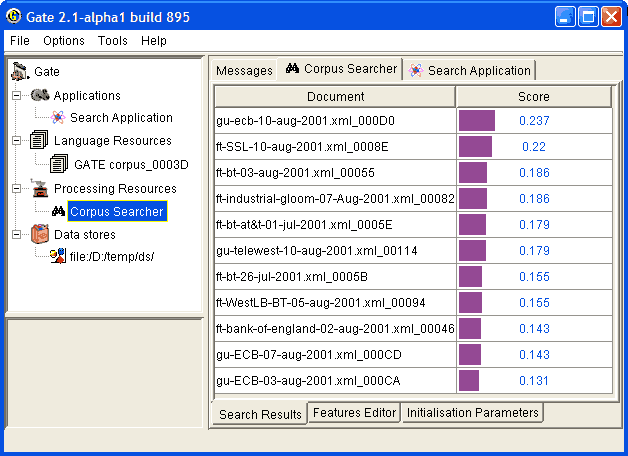

9 ANNIC: ANNotations-In-Context

9.1 Instantiating SSD

9.2 Search GUI

9.2.1 Overview

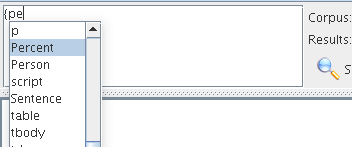

9.2.2 Syntax of Queries

9.2.3 Top Section

9.2.4 Central Section

9.2.5 Bottom Section

9.3 Using SSD from GATE Embedded

9.3.1 How to instantiate a searchabledatastore

9.3.2 How to search in this datastore

10 Performance Evaluation of Language Analysers

10.1 Metrics for Evaluation in Information Extraction

10.1.1 Annotation Relations

10.1.2 Cohen’s Kappa

10.1.3 Precision, Recall, F-Measure

10.1.4 Macro and Micro Averaging

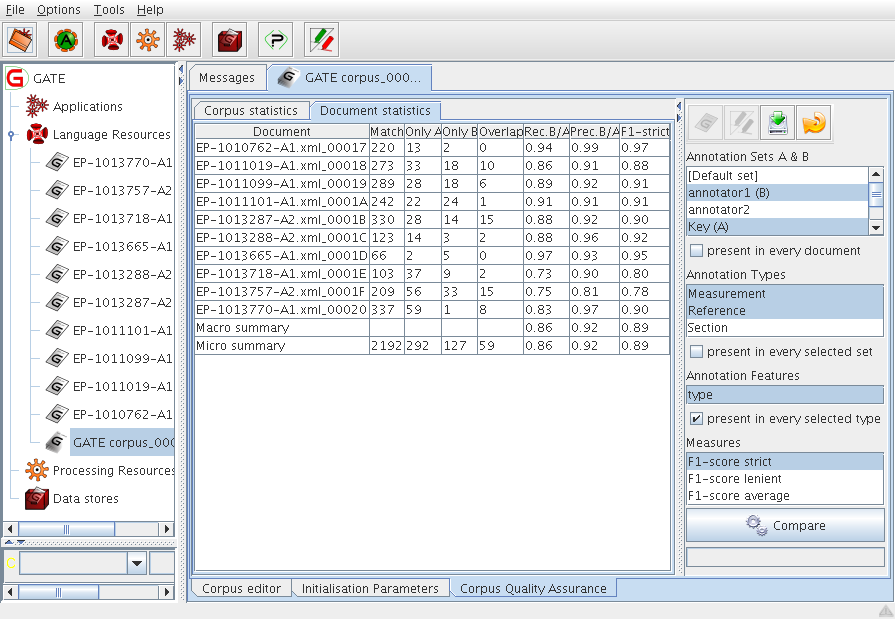

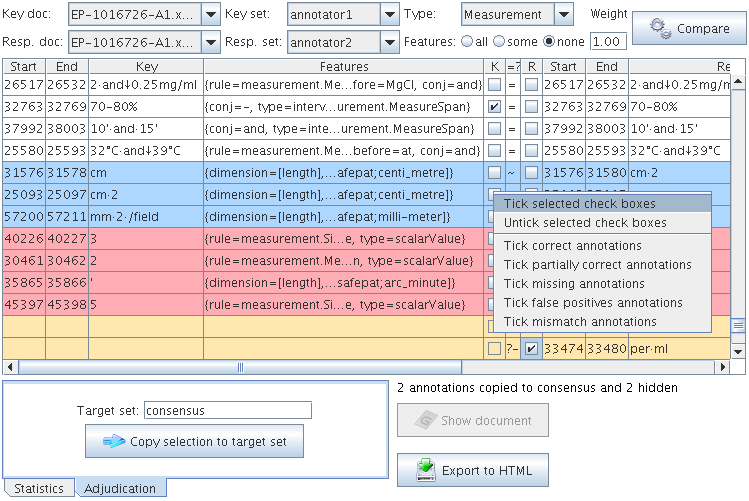

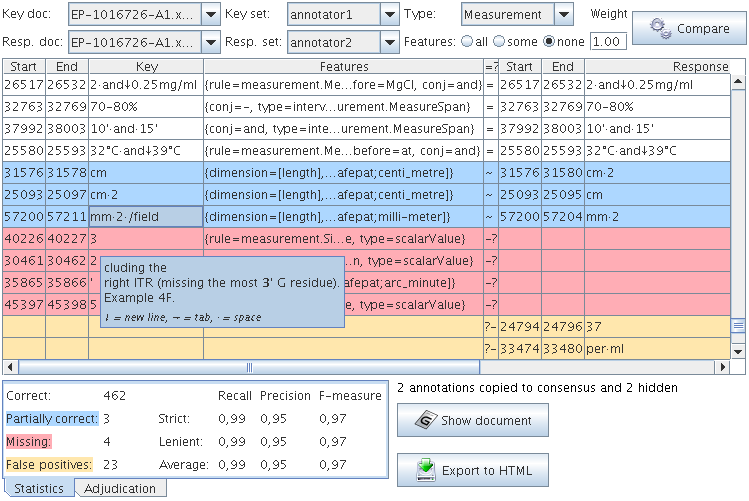

10.2 The Annotation Diff Tool

10.2.1 Performing Evaluation with the Annotation Diff Tool

10.3 Corpus Quality Assurance

10.3.1 Description of the interface

10.3.2 Step by step usage

10.3.3 Details of the Corpus statistics table

10.3.4 Details of the Document statistics table

10.3.5 GATE Embedded API for the measures

10.3.6 Quality Assurance PR

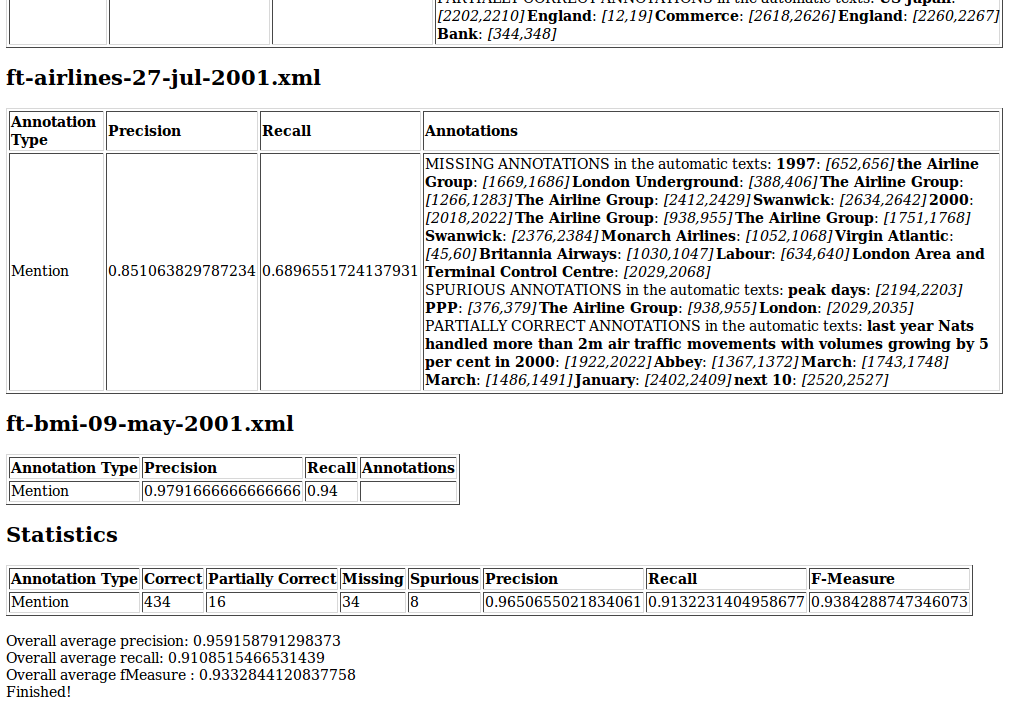

10.4 Corpus Benchmark Tool

10.4.1 Preparing the Corpora for Use

10.4.2 Defining Properties

10.4.3 Running the Tool

10.4.4 The Results

10.5 A Plugin Computing Inter-Annotator Agreement (IAA)

10.5.1 IAA for Classification

10.5.2 IAA For Named Entity Annotation

10.5.3 The BDM-Based IAA Scores

10.6 A Plugin Computing the BDM Scores for an Ontology

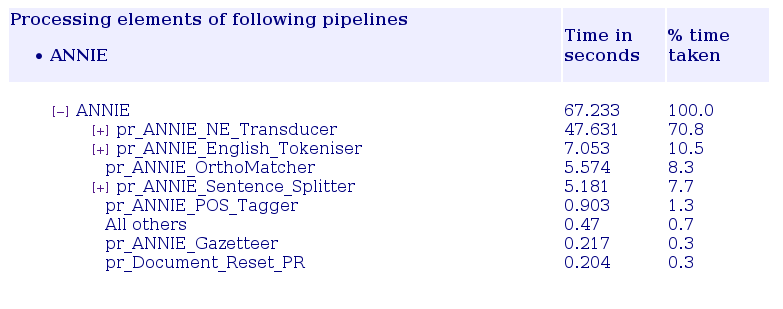

11 Profiling Processing Resources

11.1 Overview

11.1.1 Features

11.1.2 Limitations

11.2 Graphical User Interface

11.3 Command Line Interface

11.4 Application Programming Interface

11.4.1 Log4j.properties

11.4.2 Benchmark log format

11.4.3 Enabling profiling

11.4.4 Reporting tool

12 Developing GATE

12.1 Reporting Bugs and Requesting Features

12.2 Contributing Patches

12.3 Creating New Plugins

12.3.1 Where to Keep Plugins in the GATE Hierarchy

12.3.2 What to Call your Plugin

12.3.3 Writing a New PR

12.3.4 Writing a New VR

12.3.5 Adding Plugins to the Nightly Build

12.4 Updating this User Guide

12.4.1 Building the User Guide

12.4.2 Making Changes to the User Guide

III CREOLE Plugins

13 Gazetteers

13.1 Introduction to Gazetteers

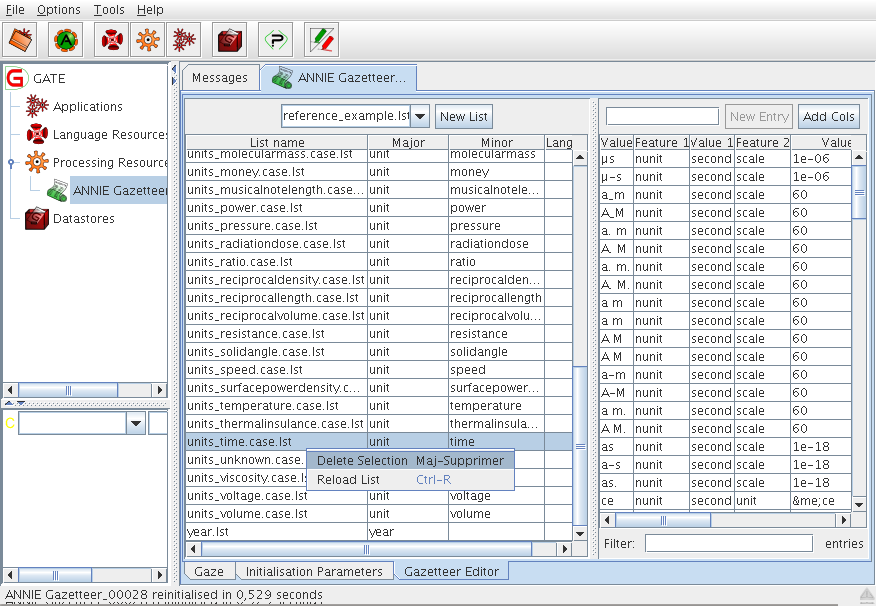

13.2 ANNIE Gazetteer

13.2.1 Creating and Modifying Gazetteer Lists

13.2.2 ANNIE Gazetteer Editor

13.3 Gazetteer Visual Resource - GAZE

13.3.1 Display Modes

13.3.2 Linear Definition Pane

13.3.3 Linear Definition Toolbar

13.3.4 Operations on Linear Definition Nodes

13.3.5 Gazetteer List Pane

13.3.6 Mapping Definition Pane

13.4 OntoGazetteer

13.5 Gaze Ontology Gazetteer Editor

13.5.1 The Gaze Gazetteer List and Mapping Editor

13.5.2 The Gaze Ontology Editor

13.6 Hash Gazetteer

13.6.1 Prerequisites

13.6.2 Parameters

13.7 Flexible Gazetteer

13.8 Gazetteer List Collector

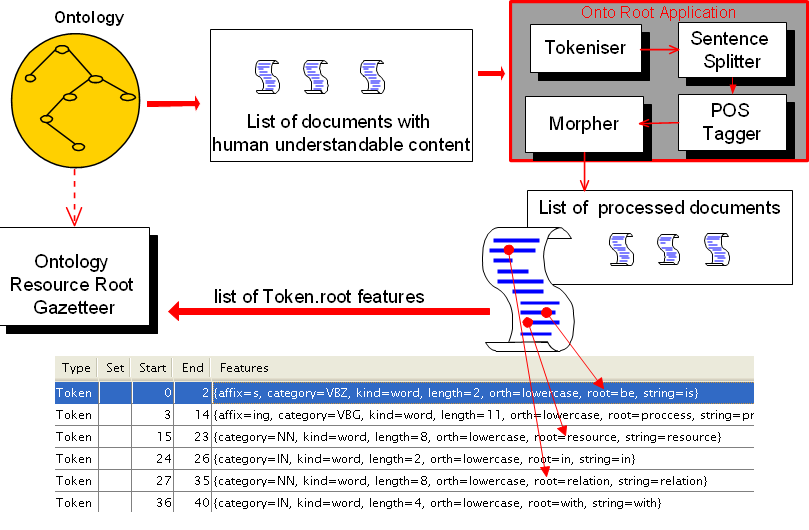

13.9 OntoRoot Gazetteer

13.9.1 How Does it Work?

13.9.2 Initialisation of OntoRoot Gazetteer

13.9.3 Simple steps to run OntoRoot Gazetteer

13.10 Large KB Gazetteer

13.10.1 Quick usage overview

13.10.2 Dictionary setup

13.10.3 Additional dictionary configuration

13.10.4 Processing Resource Configuration

13.10.5 Runtime configuration

13.10.6 Semantic Enrichment PR

13.11 The Shared Gazetteer for multithreaded processing

14 Working with Ontologies

14.1 Data Model for Ontologies

14.1.1 Hierarchies of Classes and Restrictions

14.1.2 Instances

14.1.3 Hierarchies of Properties

14.1.4 URIs

14.2 Ontology Event Model

14.2.1 What Happens when a Resource is Deleted?

14.3 The Ontology Plugin: Current Implementation

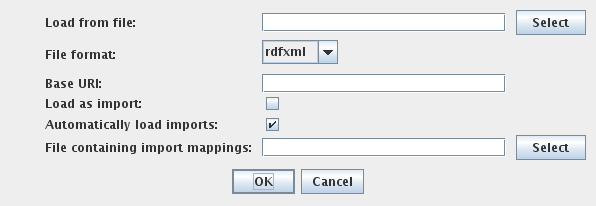

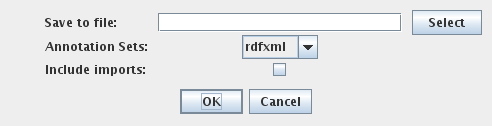

14.3.1 The OWLIMOntology Language Resource

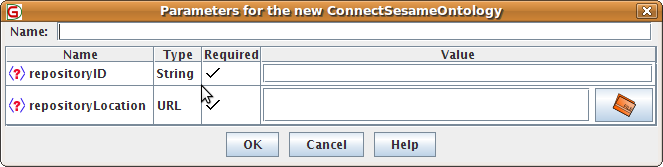

14.3.2 The ConnectSesameOntology Language Resource

14.3.3 The CreateSesameOntology Language Resource

14.3.4 The OWLIM2 Backwards-Compatible Language Resource

14.3.5 Using Ontology Import Mappings

14.3.6 Using BigOWLIM

14.4 The Ontology_OWLIM2 plugin: backwards-compatible implementation

14.4.1 The OWLIMOntologyLR Language Resource

14.5 GATE Ontology Editor

14.6 Ontology Annotation Tool

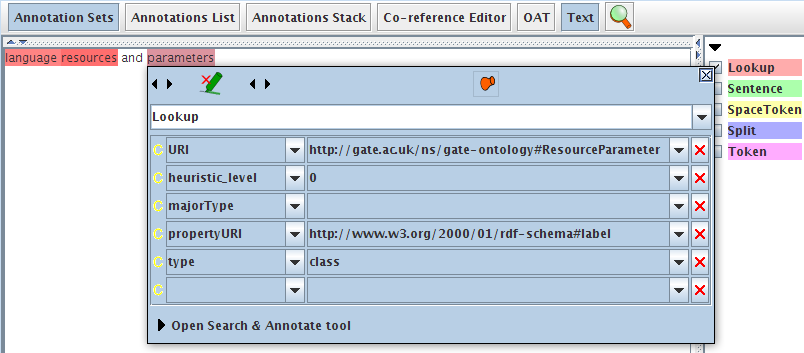

14.6.1 Viewing Annotated Text

14.6.2 Editing Existing Annotations

14.6.3 Adding New Annotations

14.6.4 Options

14.7 Relation Annotation Tool

14.7.1 Description of the two views

14.7.2 New annotation and instance from text selection

14.7.3 New annotation and add label to existing instance from text selection

14.7.4 Create and set properties for annotation relation

14.7.5 Delete instance, label or property

14.7.6 Differences with OAT and Ontology Editor

14.8 Using the ontology API

14.9 Using the ontology API (old version)

14.10 Ontology-Aware JAPE Transducer

14.11 Annotating Text with Ontological Information

14.12 Populating Ontologies

14.13 Ontology API and Implementation Changes

14.13.1 Differences between the implementation plugins

14.13.2 Changes in the Ontology API

15 Machine Learning

15.1 ML Generalities

15.1.1 Some Definitions

15.1.2 GATE-Specific Interpretation of the Above Definitions

15.2 Batch Learning PR

15.2.1 Batch Learning PR Configuration File Settings

15.2.2 Case Studies for the Three Learning Types

15.2.3 How to Use the Batch Learning PR in GATE Developer

15.2.4 Output of the Batch Learning PR

15.2.5 Using the Batch Learning PR from the API

15.3 Machine Learning PR

15.3.1 The DATASET Element

15.3.2 The ENGINE Element

15.3.3 The WEKA Wrapper

15.3.4 The MAXENT Wrapper

15.3.5 The SVM Light Wrapper

15.3.6 Example Configuration File

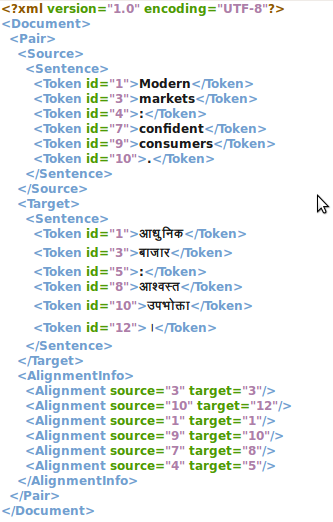

16 Tools for Alignment Tasks

16.1 Introduction

16.2 The Tools

16.2.1 Compound Document

16.2.2 CompoundDocumentFromXml

16.2.3 Compound Document Editor

16.2.4 Composite Document

16.2.5 DeleteMembersPR

16.2.6 SwitchMembersPR

16.2.7 Saving as XML

16.2.8 Alignment Editor

16.2.9 Saving Files and Alignments

16.2.10 Section-by-Section Processing

17 Parsers and Taggers

17.1 Verb Group Chunker

17.2 Noun Phrase Chunker

17.2.1 Differences from the Original

17.2.2 Using the Chunker

17.3 Tree Tagger

17.3.1 POS Tags

17.4 TaggerFramework

17.5 Chemistry Tagger

17.5.1 Using the Tagger

17.6 ABNER

17.7 Stemmer

17.7.1 Algorithms

17.8 GATE Morphological Analyzer

17.8.1 Rule File

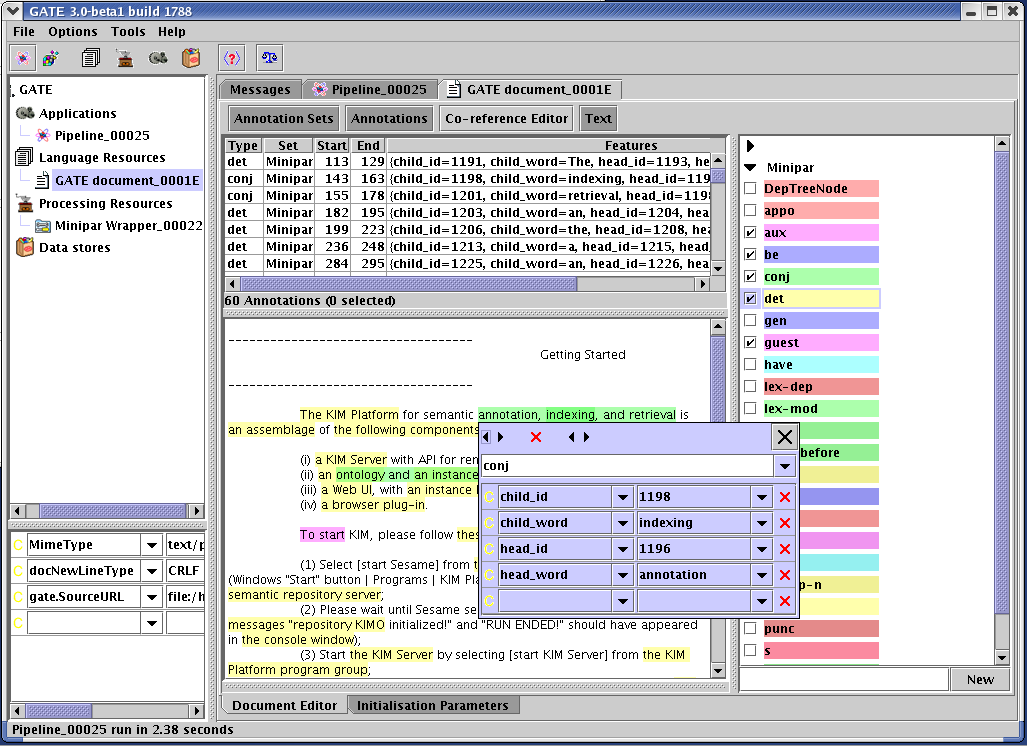

17.9 MiniPar Parser

17.9.1 Platform Supported

17.9.2 Resources

17.9.3 Parameters

17.9.4 Prerequisites

17.9.5 Grammatical Relationships

17.10 RASP Parser

17.11 SUPPLE Parser

17.11.1 Requirements

17.11.2 Building SUPPLE

17.11.3 Running the Parser in GATE

17.11.4 Viewing the Parse Tree

17.11.5 System Properties

17.11.6 Configuration Files

17.11.7 Parser and Grammar

17.11.8 Mapping Named Entities

17.11.9 Upgrading from BuChart to SUPPLE

17.12 Stanford Parser

17.12.1 Input Requirements

17.12.2 Initialization Parameters

17.12.3 Runtime Parameters

17.13 OpenCalais, LingPipe and OpenNLP

18 Combining GATE and UIMA

18.1 Embedding a UIMA AE in GATE

18.1.1 Mapping File Format

18.1.2 The UIMA Component Descriptor

18.1.3 Using the AnalysisEnginePR

18.2 Embedding a GATE CorpusController in UIMA

18.2.1 Mapping File Format

18.2.2 The GATE Application Definition

18.2.3 Configuring the GATEApplicationAnnotator

19 More (CREOLE) Plugins

19.1 Language Plugins

19.1.1 French Plugin

19.1.2 German Plugin

19.1.3 Romanian Plugin

19.1.4 Arabic Plugin

19.1.5 Chinese Plugin

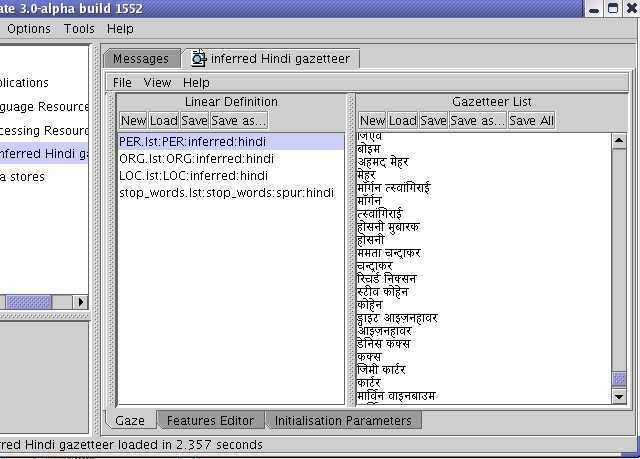

19.1.6 Hindi Plugin

19.2 Flexible Exporter

19.3 Annotation Set Transfer

19.4 Information Retrieval in GATE

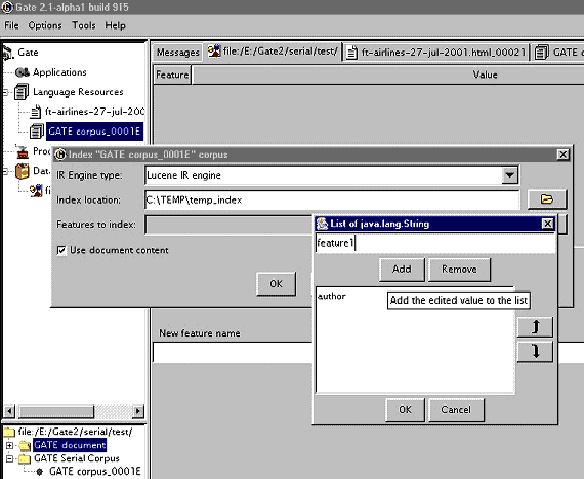

19.4.1 Using the IR Functionality in GATE

19.4.2 Using the IR API

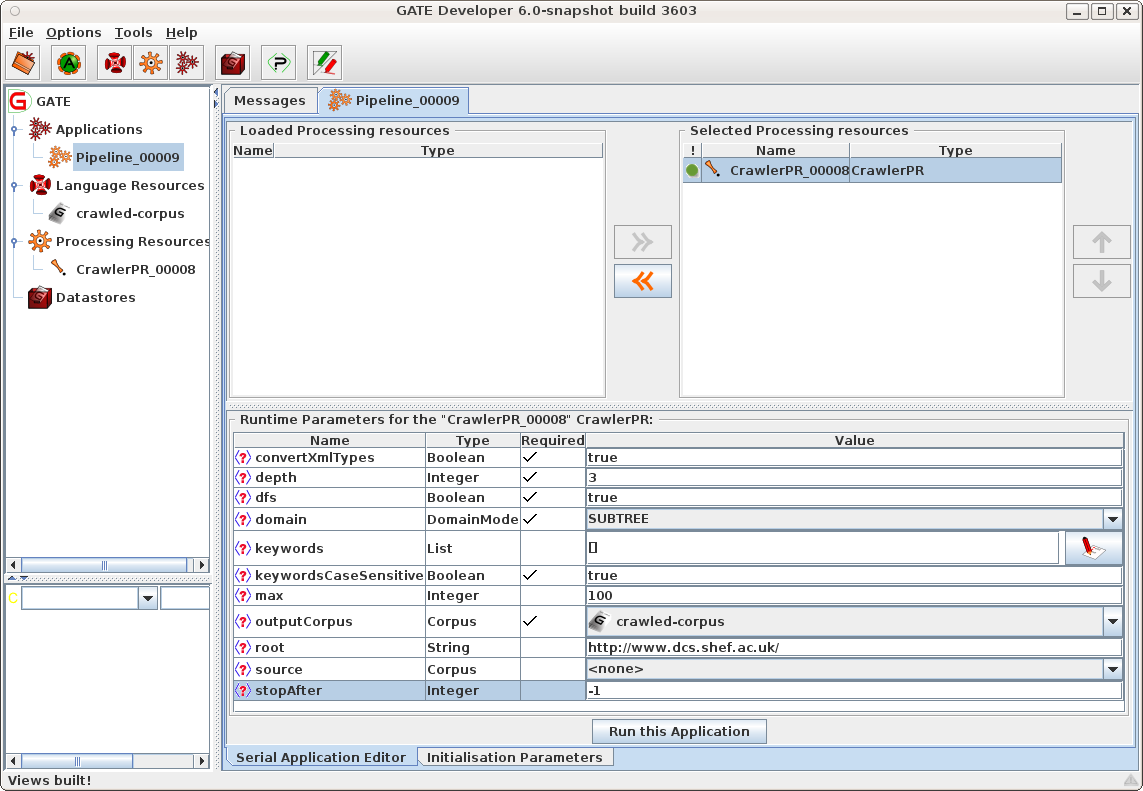

19.5 Websphinx Web Crawler

19.5.1 Using the Crawler PR

19.6 Google Plugin

19.7 Yahoo Plugin

19.7.1 Using the YahooPR

19.8 Google Translator PR

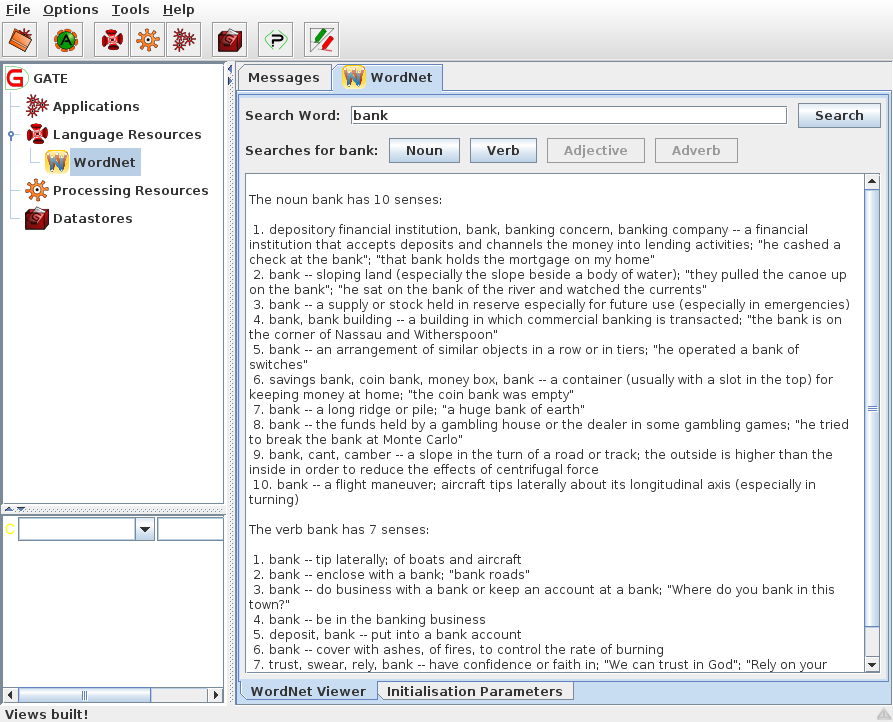

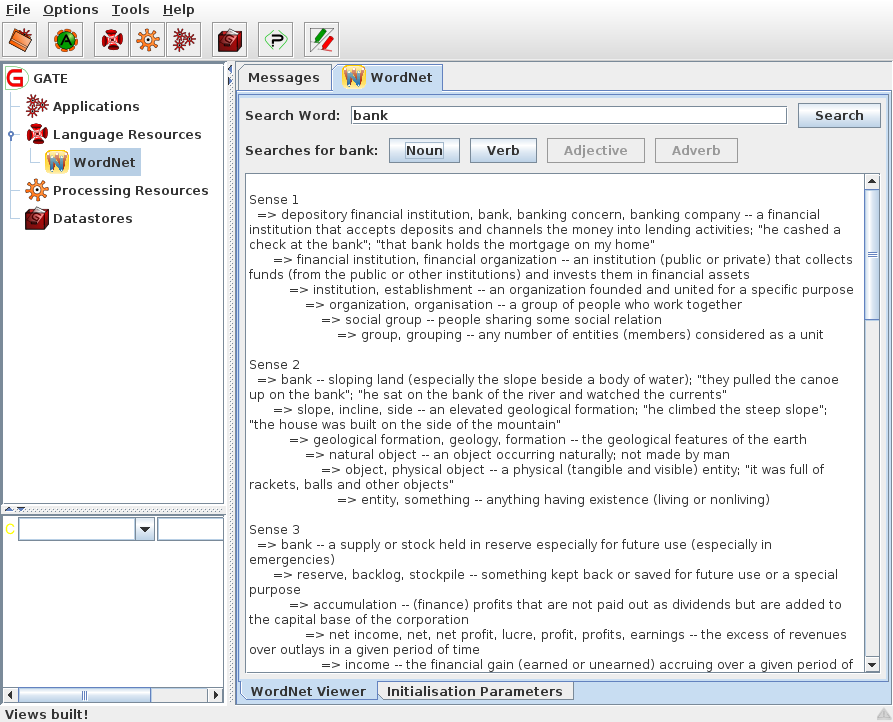

19.9 WordNet in GATE

19.9.1 The WordNet API

19.10 Kea - Automatic Keyphrase Detection

19.10.1 Using the ‘KEA Keyphrase Extractor’ PR

19.10.2 Using Kea Corpora

19.11 Ontotext JapeC Compiler

19.12 Annotation Merging Plugin

19.13 Chinese Word Segmentation

19.14 Copying Annotations between Documents

19.15 OpenCalais Plugin

19.16 LingPipe Plugin

19.16.1 LingPipe Tokenizer PR

19.16.2 LingPipe Sentence Splitter PR

19.16.3 LingPipe POS Tagger PR

19.16.4 LingPipe NER PR

19.16.5 LingPipe Language Identifier PR

19.17 OpenNLP Plugin

19.17.1 Parameters common to all PRs

19.17.2 OpenNLP PRs

19.17.3 Training new models

19.18 Tagger_MetaMap Plugin

19.18.1 Parameters

19.19 Inter Annotator Agreement

19.20 Balanced Distance Metric Computation

19.21 Schema Annotation Editor

Appendices

A Change Log

A.1 Version 6.0-beta1 (August 2010)

A.1.1 Major new features

A.1.2 Breaking changes

A.1.3 Other new features and bugfixes

A.2 Version 5.2.1 (May 2010)

A.3 Version 5.2 (April 2010)

A.3.1 JAPE and JAPE-related

A.3.2 Other Changes

A.4 Version 5.1 (December 2009)

A.4.1 New Features

A.4.2 JAPE improvements

A.4.3 Other improvements and bug fixes

A.5 Version 5.0 (May 2009)

A.5.1 Major New Features

A.5.2 Other New Features and Improvements

A.5.3 Specific Bug Fixes

A.6 Version 4.0 (July 2007)

A.6.1 Major New Features

A.6.2 Other New Features and Improvements

A.6.3 Bug Fixes and Optimizations

A.7 Version 3.1 (April 2006)

A.7.1 Major New Features

A.7.2 Other New Features and Improvements

A.7.3 Bug Fixes

A.8 January 2005

A.9 December 2004

A.10 September 2004

A.11 Version 3 Beta 1 (August 2004)

A.12 July 2004

A.13 June 2004

A.14 April 2004

A.15 March 2004

A.16 Version 2.2 – August 2003

A.17 Version 2.1 – February 2003

A.18 June 2002

B Version 5.1 Plugins Name Map

C Design Notes

C.1 Patterns

C.1.1 Components

C.1.2 Model, view, controller

C.1.3 Interfaces

C.2 Exception Handling

D JAPE: Implementation

D.1 Formal Description of the JAPE Grammar

D.2 Relation to CPSL

D.3 Initialisation of a JAPE Grammar

D.4 Execution of JAPE Grammars

D.5 Using a Different Java Compiler

E Ant Tasks for GATE

E.1 Declaring the Tasks

E.2 The packagegapp task - bundling an application with its dependencies

E.2.1 Introduction

E.2.2 Basic Usage

E.2.3 Handling Non-Plugin Resources

E.2.4 Streamlining your Plugins

E.2.5 Bundling Extra Resources

E.3 The expandcreoles Task - Merging Annotation-Driven Config into creole.xml

F Named-Entity State Machine Patterns

F.1 Main.jape

F.2 first.jape

F.3 firstname.jape

F.4 name.jape

F.4.1 Person

F.4.2 Location

F.4.3 Organization

F.4.4 Ambiguities

F.4.5 Contextual information

F.5 name_post.jape

F.6 date_pre.jape

F.7 date.jape

F.8 reldate.jape

F.9 number.jape

F.10 address.jape

F.11 url.jape

F.12 identifier.jape

F.13 jobtitle.jape

F.14 final.jape

F.15 unknown.jape

F.16 name_context.jape

F.17 org_context.jape

F.18 loc_context.jape

F.19 clean.jape

G Part-of-Speech Tags used in the Hepple Tagger

References

Part I

GATE Basics [#]

Chapter 1

Introduction [#]

Software documentation is like sex: when it is good, it is very, very good; and when it is bad, it is better than nothing. (Anonymous.)

There are two ways of constructing a software design: one way is to make it so simple that there are obviously no deficiencies; the other way is to make it so complicated that there are no obvious deficiencies. (C.A.R. Hoare)

A computer language is not just a way of getting a computer to perform operations but rather that it is a novel formal medium for expressing ideas about methodology. Thus, programs must be written for people to read, and only incidentally for machines to execute. (The Structure and Interpretation of Computer Programs, H. Abelson, G. Sussman and J. Sussman, 1985.)

If you try to make something beautiful, it is often ugly. If you try to make something useful, it is often beautiful. (Oscar Wilde)1

GATE2 is an infrastructure for developing and deploying software components that process human language. It is nearly 15 years old and is in active use for all types of computational task involving human language. GATE excels at text analysis of all shapes and sizes. From large corporations to small startups, from €multi-million research consortia to undergraduate projects, our user community is the largest and most diverse of any system of this type, and is spread across all but one of the continents3.

GATE is open source free software; users can obtain free support from the user and developer community via GATE.ac.uk or on a commercial basis from our industrial partners. We are the biggest open source language processing project with a development team more than double the size of the largest comparable projects (many of which are integrated with GATE4). More than €5 million has been invested in GATE development5; our objective is to make sure that this continues to be money well spent for all GATE’s users.

GATE has grown over the years to include a desktop client for developers, a workflow-based web application, a Java library, an architecture and a process. GATE is:

- an IDE, GATE Developer6: an integrated development environment for language processing components bundled with a very widely used Information Extraction system and a comprehensive set of other plugins

- a web app, GATE Teamware: a collaborative annotation environment for factory-style semantic annotation projects built around a workflow engine and a heavily-optimised backend service infrastructure

- a framework, GATE Embedded: an object library optimised for inclusion in diverse applications giving access to all the services used by GATE Developer and more

- an architecture: a high-level organisational picture of how language processing software composition

- a process for the creation of robust and maintainable services.

We also develop:

- a wiki/CMS, GATE Wiki (http://gatewiki.sf.net/), mainly to host our own websites and as a testbed for some of our experiments

- a cloud computing solution for hosted large-scale text processing, GATE Cloud (http://gatecloud.net/)

For more information see the family pages.

One of our original motivations was to remove the necessity for solving common engineering problems before doing useful research, or re-engineering before deploying research results into applications. Core functions of GATE take care of the lion’s share of the engineering:

- modelling and persistence of specialised data structures

- measurement, evaluation, benchmarking (never believe a computing researcher who hasn’t measured their results in a repeatable and open setting!)

- visualisation and editing of annotations, ontologies, parse trees, etc.

- a finite state transduction language for rapid prototyping and efficient implementation of shallow analysis methods (JAPE)

- extraction of training instances for machine learning

- pluggable machine learning implementations (Weka, SVM Light, ...)

On top of the core functions GATE includes components for diverse language processing tasks, e.g. parsers, morphology, tagging, Information Retrieval tools, Information Extraction components for various languages, and many others. GATE Developer and Embedded are supplied with an Information Extraction system (ANNIE) which has been adapted and evaluated very widely (numerous industrial systems, research systems evaluated in MUC, TREC, ACE, DUC, Pascal, NTCIR, etc.). ANNIE is often used to create RDF or OWL (metadata) for unstructured content (semantic annotation).

GATE version 1 was written in the mid-1990s; at the turn of the new millennium we completely rewrote the system in Java; version 5 was released in June 2009. We believe that GATE is the leading system of its type, but as scientists we have to advise you not to take our word for it; that’s why we’ve measured our software in many of the competitive evaluations over the last decade-and-a-half (MUC, TREC, ACE, DUC and more; see Section 1.4 for details). We invite you to give it a try, to get involved with the GATE community, and to contribute to human language science, engineering and development.

This book describes how to use GATE to develop language processing components, test their performance and deploy them as parts of other applications. In the rest of this chapter:

- Section 1.1 describes the best way to use this book;

- Section 1.2 briefly notes that the context of GATE is applied language processing, or Language Engineering;

- Section 1.3 gives an overview of developing using GATE;

- Section 1.4 lists publications describing GATE performance in evaluations;

- Section 1.5 outlines what is new in the current version of GATE;

- Section 1.6 lists other publications about GATE.

Note: if you don’t see the component you need in this document, or if we mention a component that you can’t see in the software, contact gate-users@lists.sourceforge.net7 – various components are developed by our collaborators, who we will be happy to put you in contact with. (Often the process of getting a new component is as simple as typing the URL into GATE Developer; the system will do the rest.)

1.1 How to Use this Text [#]

The material presented in this book ranges from the conceptual (e.g. ‘what is software architecture?’) to practical instructions for programmers (e.g. how to deal with GATE exceptions) and linguists (e.g. how to write a pattern grammar). Furthermore, GATE’s highly extensible nature means that new functionality is constantly being added in the form of new plugins. Important functionality is as likely to be located in a plugin as it is to be integrated into the GATE core. This presents something of an organisational challenge. Our (no doubt imperfect) solution is to divide this book into three parts. Part I covers installation, using the GATE Developer GUI and using ANNIE, as well as providing some background and theory. We recommend the new user to begin with Part I. Part II covers the more advanced of the core GATE functionality; the GATE Embedded API and JAPE pattern language among other things. Part III provides a reference for the numerous plugins that have been created for GATE. Although ANNIE provides a good starting point, the user will soon wish to explore other resources, and so will need to consult this part of the text. We recommend that Part III be used as a reference, to be dipped into as necessary. In Part III, plugins are grouped into broad areas of functionality.

1.2 Context [#]

GATE can be thought of as a Software Architecture for Language Engineering [Cunningham 00].

‘Software Architecture’ is used rather loosely here to mean computer infrastructure for software development, including development environments and frameworks, as well as the more usual use of the term to denote a macro-level organisational structure for software systems [Shaw & Garlan 96].

Language Engineering (LE) may be defined as:

…the discipline or act of engineering software systems that perform tasks involving processing human language. Both the construction process and its outputs are measurable and predictable. The literature of the field relates to both application of relevant scientific results and a body of practice. [Cunningham 99a]

The relevant scientific results in this case are the outputs of Computational Linguistics, Natural Language Processing and Artificial Intelligence in general. Unlike these other disciplines, LE, as an engineering discipline, entails predictability, both of the process of constructing LE-based software and of the performance of that software after its completion and deployment in applications.

Some working definitions:

- Computational Linguistics (CL): science of language that uses computation as an investigative tool.

- Natural Language Processing (NLP): science of computation whose subject matter is data structures and algorithms for computer processing of human language.

- Language Engineering (LE): building NLP systems whose cost and outputs are measurable and predictable.

- Software Architecture: macro-level organisational principles for families of systems. In this context is also used as infrastructure.

- Software Architecture for Language Engineering (SALE): software infrastructure, architecture and development tools for applied CL, NLP and LE.

(Of course the practice of these fields is broader and more complex than these definitions.)

In the scientific endeavours of NLP and CL, GATE’s role is to support experimentation. In this context GATE’s significant features include support for automated measurement (see Chapter 10), providing a ‘level playing field’ where results can easily be repeated across different sites and environments, and reducing research overheads in various ways.

1.3 Overview [#]

1.3.1 Developing and Deploying Language Processing Facilities [#]

GATE as an architecture suggests that the elements of software systems that process natural language can usefully be broken down into various types of component, known as resources8. Components are reusable software chunks with well-defined interfaces, and are a popular architectural form, used in Sun’s Java Beans and Microsoft’s .Net, for example. GATE components are specialised types of Java Bean, and come in three flavours:

- LanguageResources (LRs) represent entities such as lexicons, corpora or ontologies;

- ProcessingResources (PRs) represent entities that are primarily algorithmic, such as parsers, generators or ngram modellers;

- VisualResources (VRs) represent visualisation and editing components that participate in GUIs.

These definitions can be blurred in practice as necessary.

Collectively, the set of resources integrated with GATE is known as CREOLE: a Collection of REusable Objects for Language Engineering. All the resources are packaged as Java Archive (or ‘JAR’) files, plus some XML configuration data. The JAR and XML files are made available to GATE by putting them on a web server, or simply placing them in the local file space. Section 1.3.2 introduces GATE’s built-in resource set.

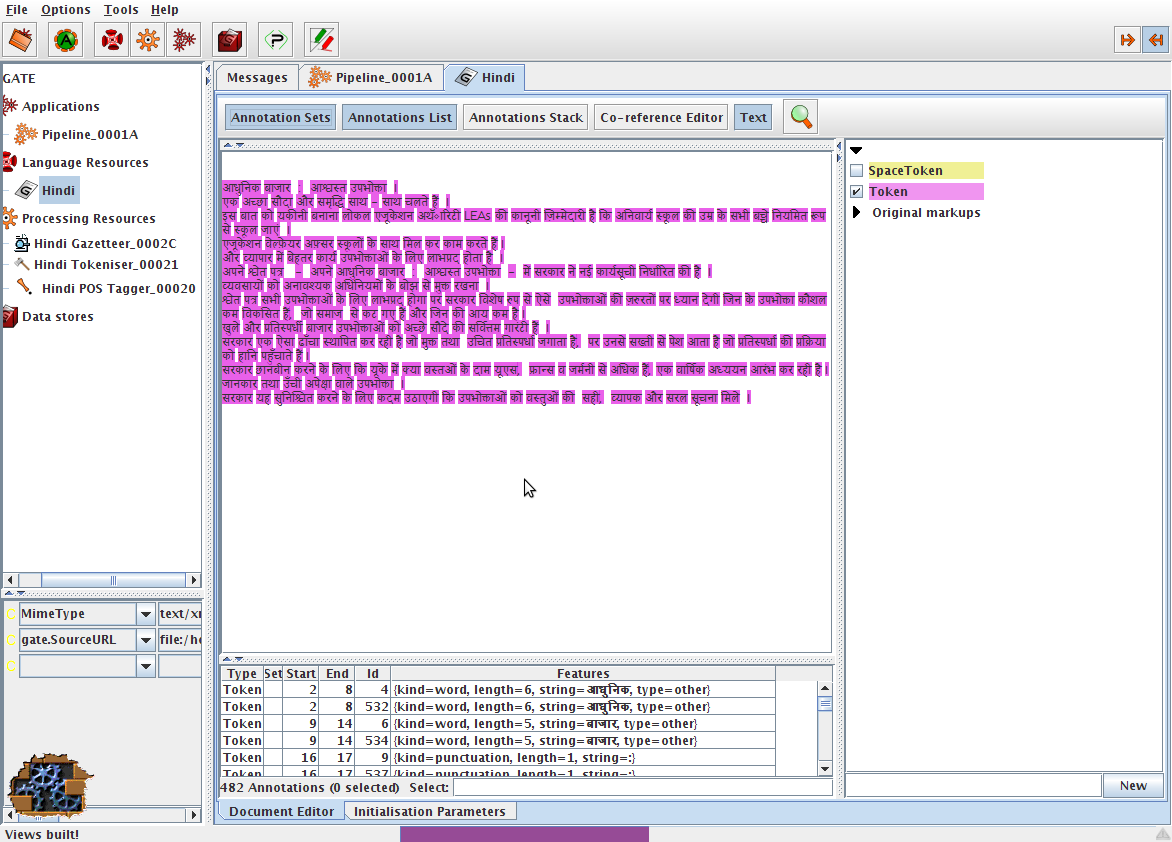

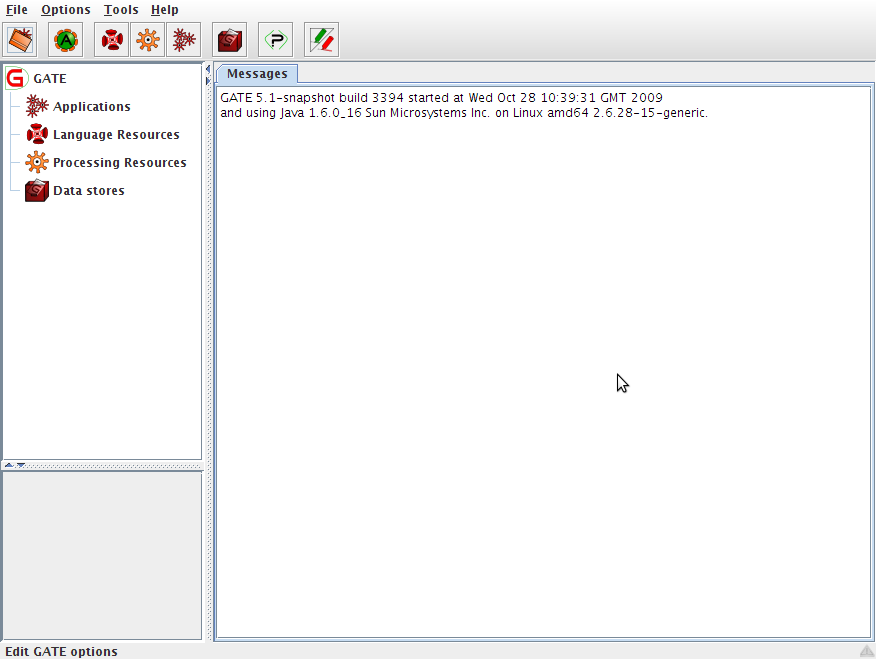

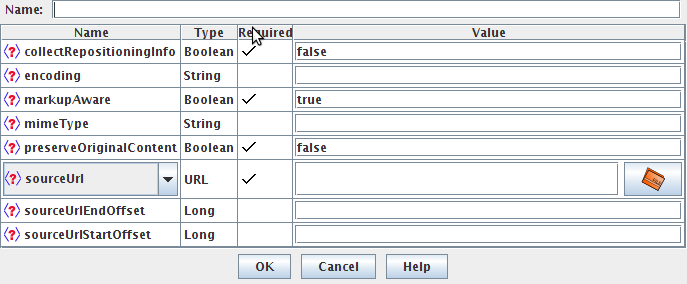

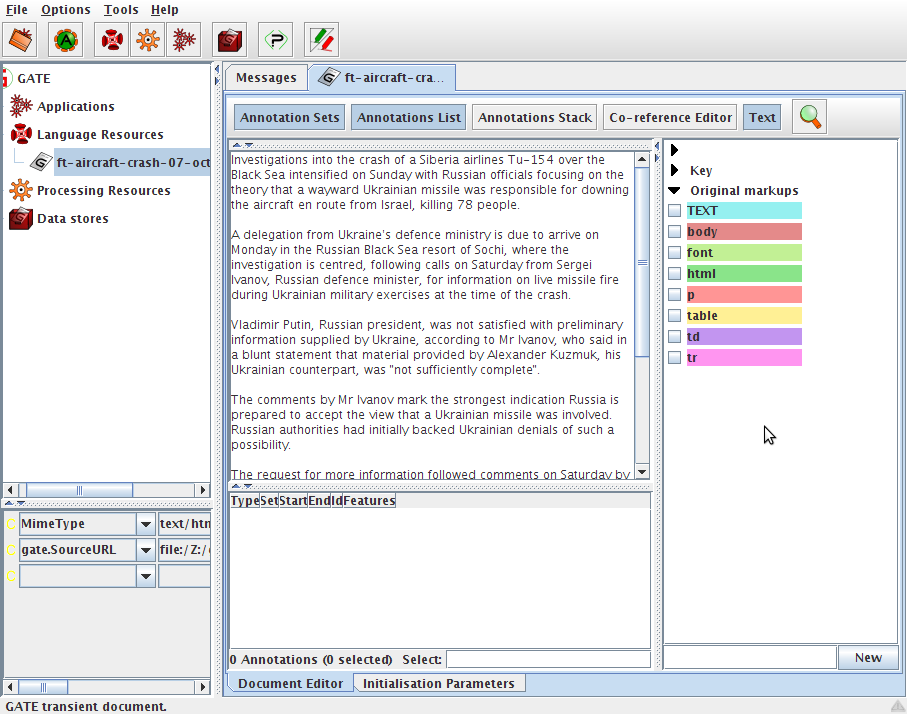

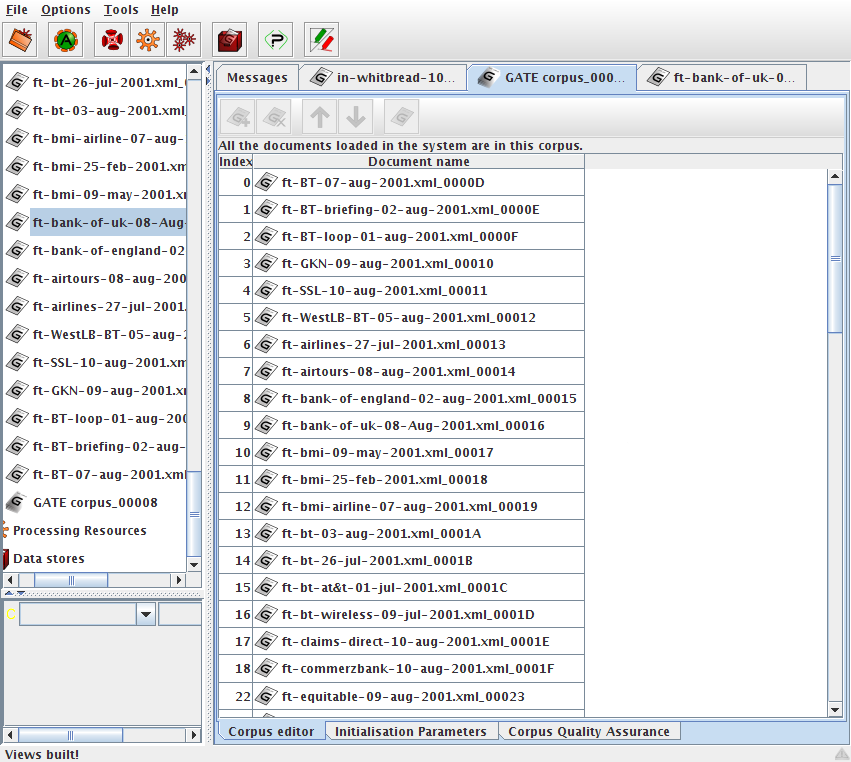

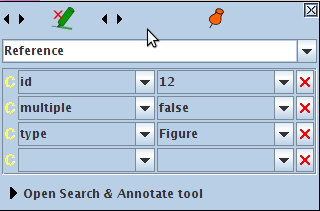

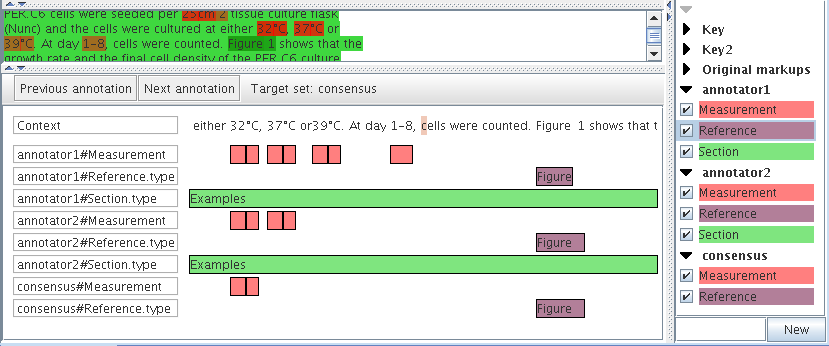

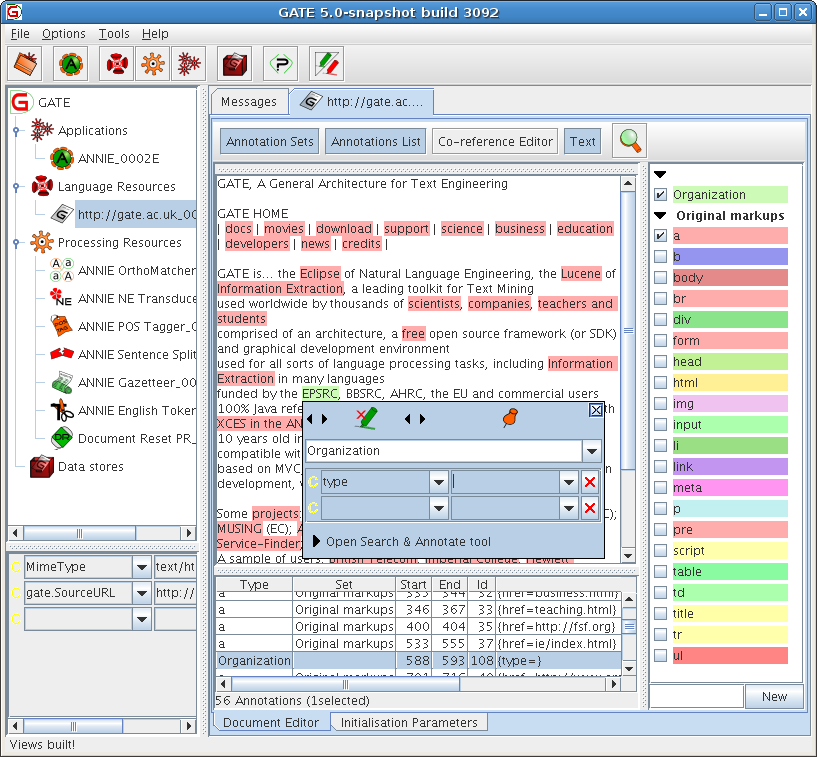

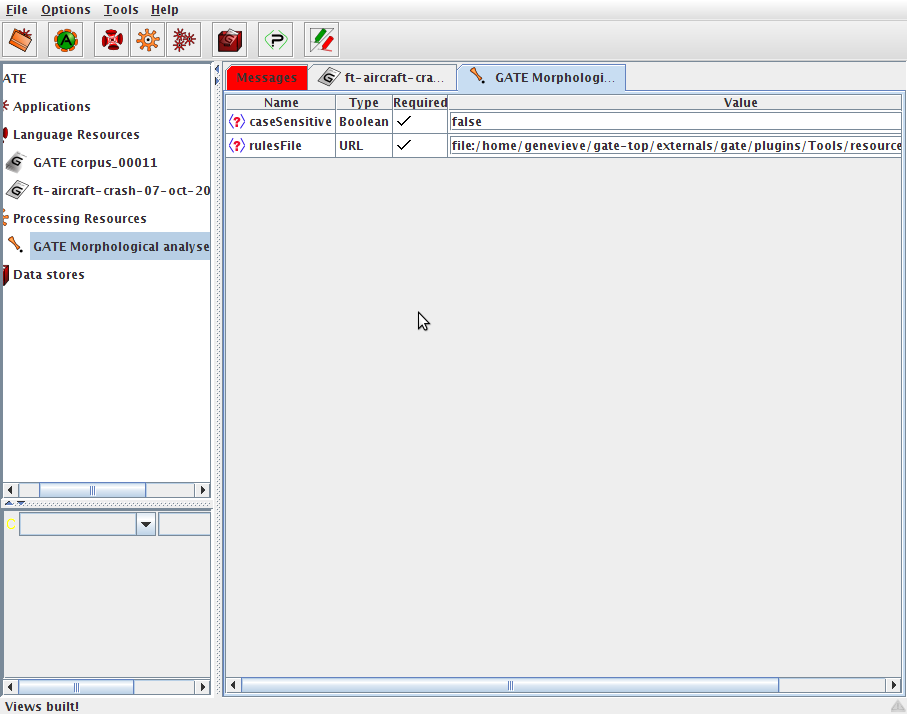

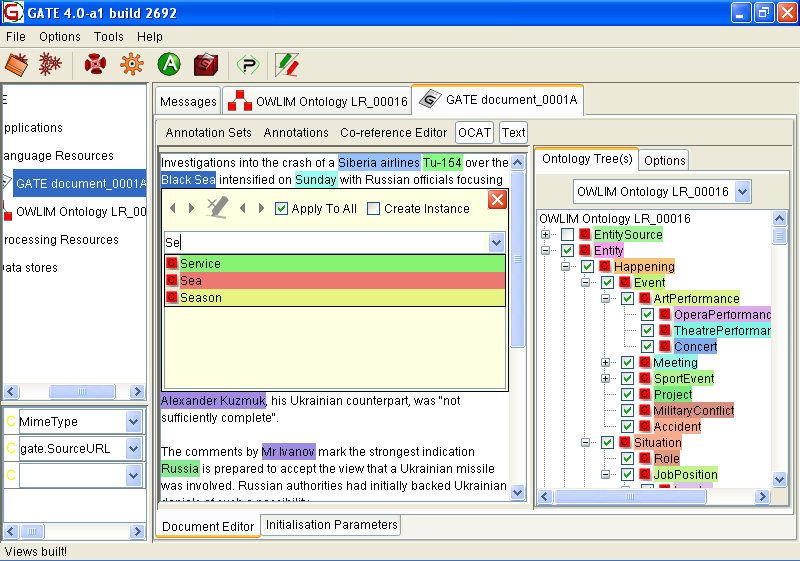

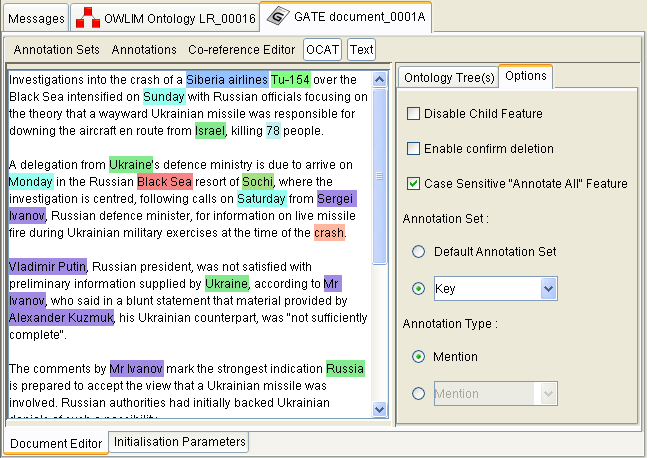

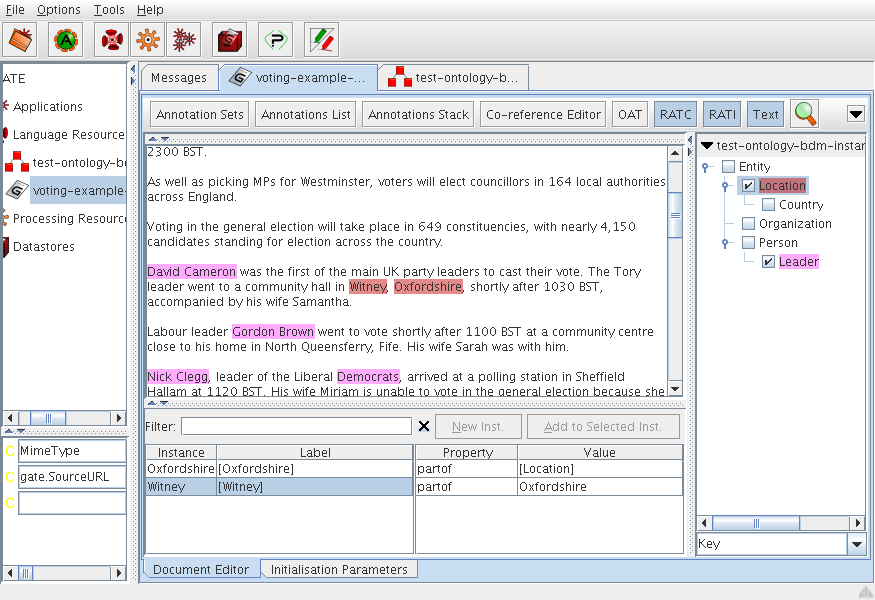

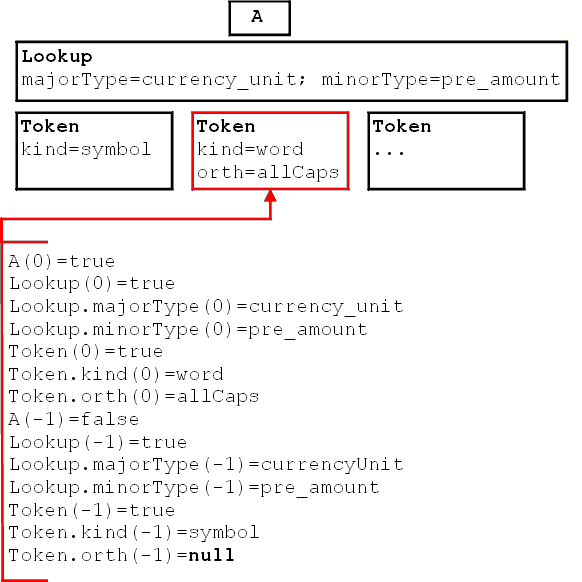

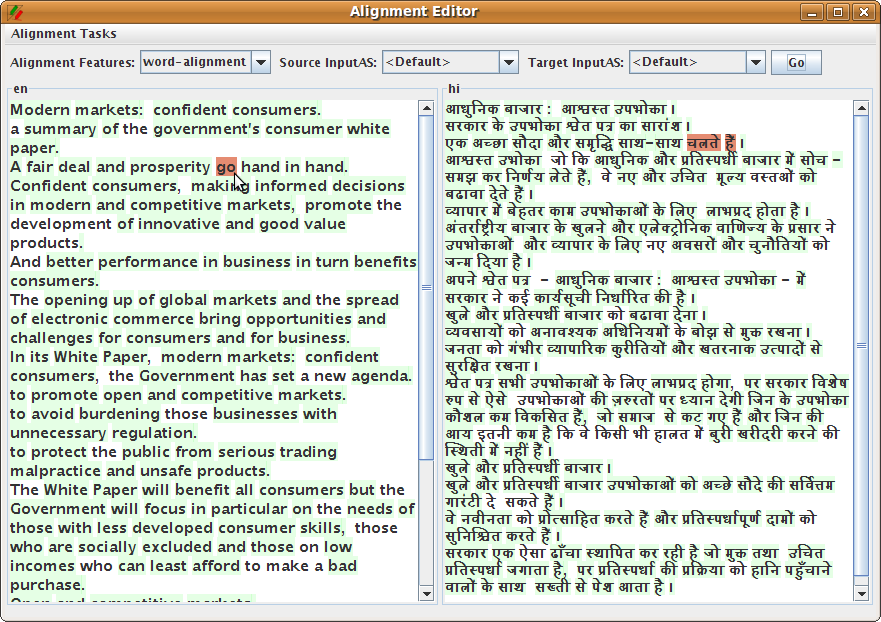

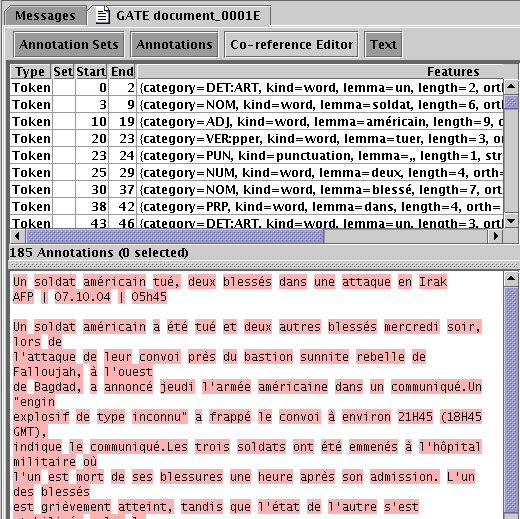

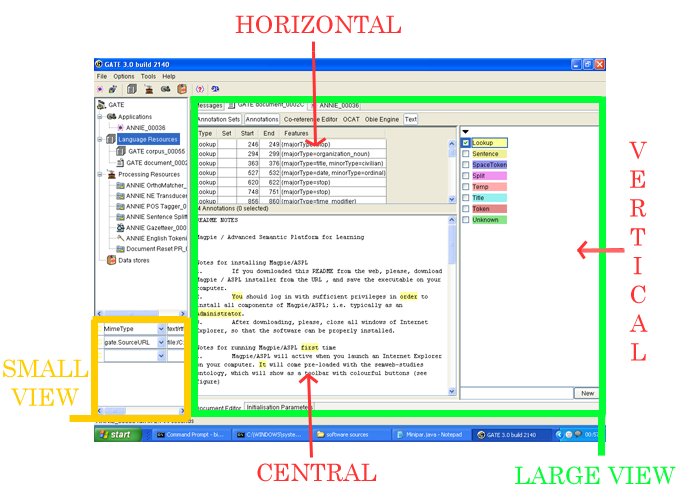

When using GATE to develop language processing functionality for an application, the developer uses GATE Developer and GATE Embedded to construct resources of the three types. This may involve programming, or the development of Language Resources such as grammars that are used by existing Processing Resources, or a mixture of both. GATE Developer is used for visualisation of the data structures produced and consumed during processing, and for debugging, performance measurement and so on. For example, figure 1.1 is a screenshot of one of the visualisation tools.

GATE Developer is analogous to systems like Mathematica for Mathematicians, or JBuilder for Java programmers: it provides a convenient graphical environment for research and development of language processing software.

When an appropriate set of resources have been developed, they can then be embedded in the target client application using GATE Embedded. GATE Embedded is supplied as a series of JAR files.9 To embed GATE-based language processing facilities in an application, these JAR files are all that is needed, along with JAR files and XML configuration files for the various resources that make up the new facilities.

1.3.2 Built-In Components [#]

GATE includes resources for common LE data structures and algorithms, including documents, corpora and various annotation types, a set of language analysis components for Information Extraction and a range of data visualisation and editing components.

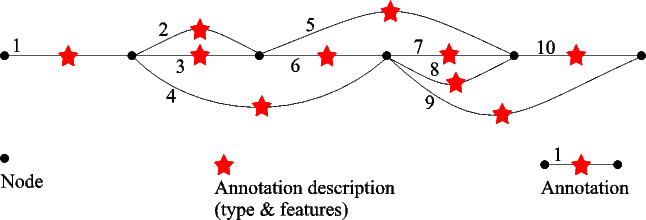

GATE supports documents in a variety of formats including XML, RTF, email, HTML, SGML and plain text. In all cases the format is analysed and converted into a single unified model of annotation. The annotation format is a modified form of the TIPSTER format [Grishman 97] which has been made largely compatible with the Atlas format [Bird & Liberman 99], and uses the now standard mechanism of ‘stand-off markup’. GATE documents, corpora and annotations are stored in databases of various sorts, visualised via the development environment, and accessed at code level via the framework. See Chapter 5 for more details of corpora etc.

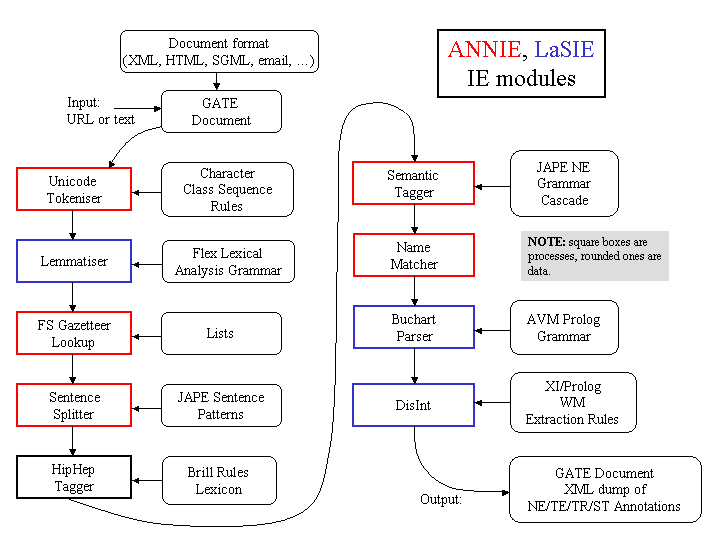

A family of Processing Resources for language analysis is included in the shape of ANNIE, A Nearly-New Information Extraction system. These components use finite state techniques to implement various tasks from tokenisation to semantic tagging or verb phrase chunking. All ANNIE components communicate exclusively via GATE’s document and annotation resources. See Chapter 6 for more details. Other CREOLE resources are described in Part III.

1.3.3 Additional Facilities [#]

Three other facilities in GATE deserve special mention:

- JAPE, a Java Annotation Patterns Engine, provides regular-expression based pattern/action rules over annotations – see Chapter 8.

- The ‘annotation diff’ tool in the development environment implements performance metrics such as precision and recall for comparing annotations. Typically a language analysis component developer will mark up some documents by hand and then use these along with the diff tool to automatically measure the performance of the components. See Chapter 10.

- GUK, the GATE Unicode Kit, fills in some of the gaps in the JDK’s10 support for Unicode, e.g. by adding input methods for various languages from Urdu to Chinese. See Section 3.10.2 for more details.

And by version 4 it will make a mean cup of tea.

1.3.4 An Example [#]

This section gives a very brief example of a typical use of GATE to develop and deploy language processing capabilities in an application, and to generate quantitative results for scientific publication.

Let’s imagine that a developer called Fatima is building an email client11 for Cyberdyne Systems’ large corporate Intranet. In this application she would like to have a language processing system that automatically spots the names of people in the corporation and transforms them into mailto hyperlinks.

A little investigation shows that GATE’s existing components can be tailored to this purpose. Fatima starts up GATE Developer, and creates a new document containing some example emails. She then loads some processing resources that will do named-entity recognition (a tokeniser, gazetteer and semantic tagger), and creates an application to run these components on the document in sequence. Having processed the emails, she can see the results in one of several viewers for annotations.

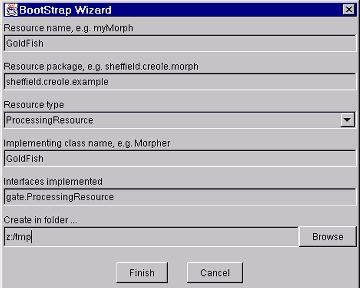

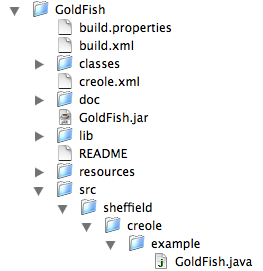

The GATE components are a decent start, but they need to be altered to deal specially with people from Cyberdyne’s personnel database. Therefore Fatima creates new ‘cyber-’ versions of the gazetteer and semantic tagger resources, using the ‘bootstrap’ tool. This tool creates a directory structure on disk that has some Java stub code, a Makefile and an XML configuration file. After several hours struggling with badly written documentation, Fatima manages to compile the stubs and create a JAR file containing the new resources. She tells GATE Developer the URL of these files12, and the system then allows her to load them in the same way that she loaded the built-in resources earlier on.

Fatima then creates a second copy of the email document, and uses the annotation editing facilities to mark up the results that she would like to see her system producing. She saves this and the version that she ran GATE on into her serial datastore. From now on she can follow this routine:

- Run her application on the email test corpus.

- Check the performance of the system by running the ‘annotation diff’ tool to compare her manual results with the system’s results. This gives her both percentage accuracy figures and a graphical display of the differences between the machine and human outputs.

- Make edits to the code, pattern grammars or gazetteer lists in her resources, and recompile where necessary.

- Tell GATE Developer to re-initialise the resources.

- Go to 1.

To make the alterations that she requires, Fatima re-implements the ANNIE gazetteer so that it regenerates itself from the local personnel data. She then alters the pattern grammar in the semantic tagger to prioritise recognition of names from that source. This latter job involves learning the JAPE language (see Chapter 8), but as this is based on regular expressions it isn’t too difficult.

Eventually the system is running nicely, and her accuracy is 93% (there are still some problem cases, e.g. when people use nicknames, but the performance is good enough for production use). Now Fatima stops using GATE Developer and works instead on embedding the new components in her email application using GATE Embedded. This application is written in Java, so embedding is very easy13: the GATE JAR files are added to the project CLASSPATH, the new components are placed on a web server, and with a little code to do initialisation, loading of components and so on, the job is finished in half a day – the code to talk to GATE takes up only around 150 lines of the eventual application, most of which is just copied from the example in the sheffield.examples.StandAloneAnnie class.

Because Fatima is worried about Cyberdyne’s unethical policy of developing Skynet to help the large corporates of the West strengthen their strangle-hold over the World, she wants to get a job as an academic instead (so that her conscience will only have to cope with the torture of students, as opposed to humanity). She takes the accuracy measures that she has attained for her system and writes a paper for the Journal of Nasturtium Logarithm Incitement describing the approach used and the results obtained. Because she used GATE for development, she can cite the repeatability of her experiments and offer access to example binary versions of her software by putting them on an external web server.

And everybody lived happily ever after.

1.4 Some Evaluations [#]

This section contains an incomplete list of publications describing systems that used GATE in competitive quantitative evaluation programmes. These programmes have had a significant impact on the language processing field and the widespread presence of GATE is some measure of the maturity of the system and of our understanding of its likely performance on diverse text processing tasks.

- [Li et al. 07d]

- describes the performance of an SVM-based learning system in the NTCIR-6 Patent Retrieval Task. The system achieved the best result on two of three measures used in the task evaluation, namely the R-Precision and F-measure. The system obtained close to the best result on the remaining measure (A-Precision).

- [Saggion 07]

- describes a cross-source coreference resolution system based on semantic clustering. It uses GATE for information extraction and the SUMMA system to create summaries and semantic representations of documents. One system configuration ranked 4th in the Web People Search 2007 evaluation.

- [Saggion 06]

- describes a cross-lingual summarization system which uses SUMMA components and the Arabic plugin available in GATE to produce summaries in English from a mixture of English and Arabic documents.

- Open-Domain Question Answering:

- The University of Sheffield has a long history of research into open-domain question answering. GATE has formed the basis of much of this research resulting in systems which have ranked highly during independent evaluations since 1999. The first successful question answering system developed at the University of Sheffield was evaluated as part of TREC 8 and used the LaSIE information extraction system (the forerunner of ANNIE) which was distributed with GATE [Humphreys et al. 99]. Further research was reported in [Scott & Gaizauskas. 00], [Greenwood et al. 02], [Gaizauskas et al. 03], [Gaizauskas et al. 04] and [Gaizauskas et al. 05]. In 2004 the system was ranked 9th out of 28 participating groups.

- [Saggion 04]

- describes techniques for answering definition questions. The system uses definition patterns manually implemented in GATE as well as learned JAPE patterns induced from a corpus. In 2004, the system was ranked 4th in the TREC/QA evaluations.

- [Saggion & Gaizauskas 04b]

- describes a multidocument summarization system implemented using summarization components compatible with GATE (the SUMMA system). The system was ranked 2nd in the Document Understanding Evaluation programmes.

- [Maynard et al. 03e] and [Maynard et al. 03d]

- describe participation in the TIDES surprise language program. ANNIE was adapted to Cebuano with four person days of effort, and achieved an F-measure of 77.5%. Unfortunately, ours was the only system participating!

- [Maynard et al. 02b] and [Maynard et al. 03b]

- describe results obtained on systems designed for the ACE task (Automatic Content Extraction). Although a comparison to other participating systems cannot be revealed due to the stipulations of ACE, results show 82%-86% precision and recall.

- [Humphreys et al. 98]

- describes the LaSIE-II system used in MUC-7.

- [Gaizauskas et al. 95]

- describes the LaSIE-II system used in MUC-6.

1.5 Changes in this Version [#]

This section logs changes in the latest version of GATE. Appendix A provides a complete change log.

1.5.1 Version 6.0-beta1 (August 2010) [#]

Major new features

Added an annotation tool for the document editor: the Relation Annotation Tool (RAT). It is designed to annotate a document with ontology instances and to create relations between annotations with ontology object properties. It is close and compatible with the Ontology Annotation Tool (OAT) but focus on relations between annotations. See section 14.7 for details.

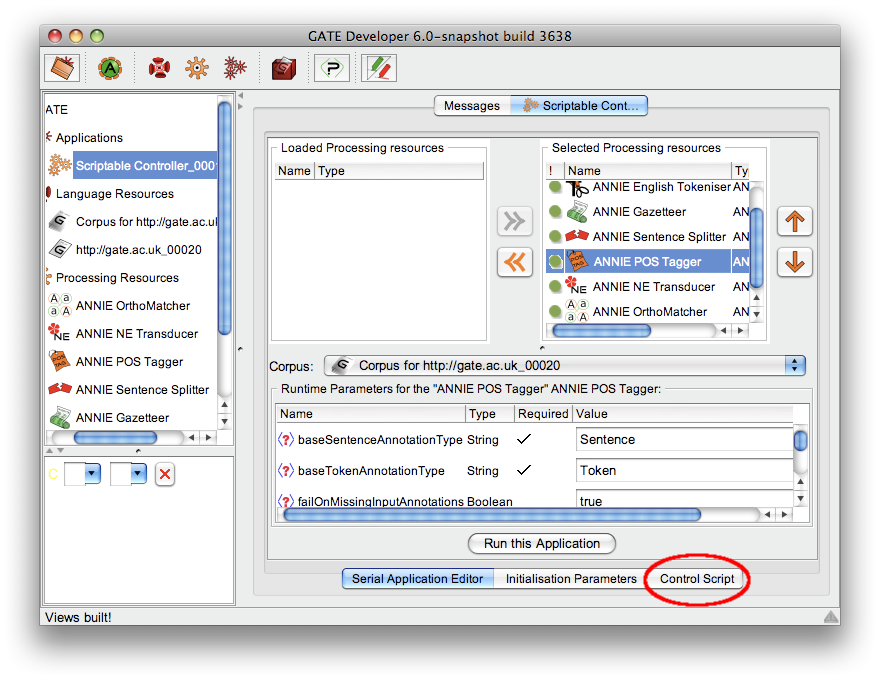

Added a new scriptable controller to the Groovy plugin, whose execution strategy is controlled by a simple Groovy DSL. This supports more powerful conditional execution than is possible with the standard conditional controllers (for example, based on the presence or absence of a particular annotation, or a combination of several document feature values), rich flow control using Groovy loops, etc. See section 7.16.3 for details.

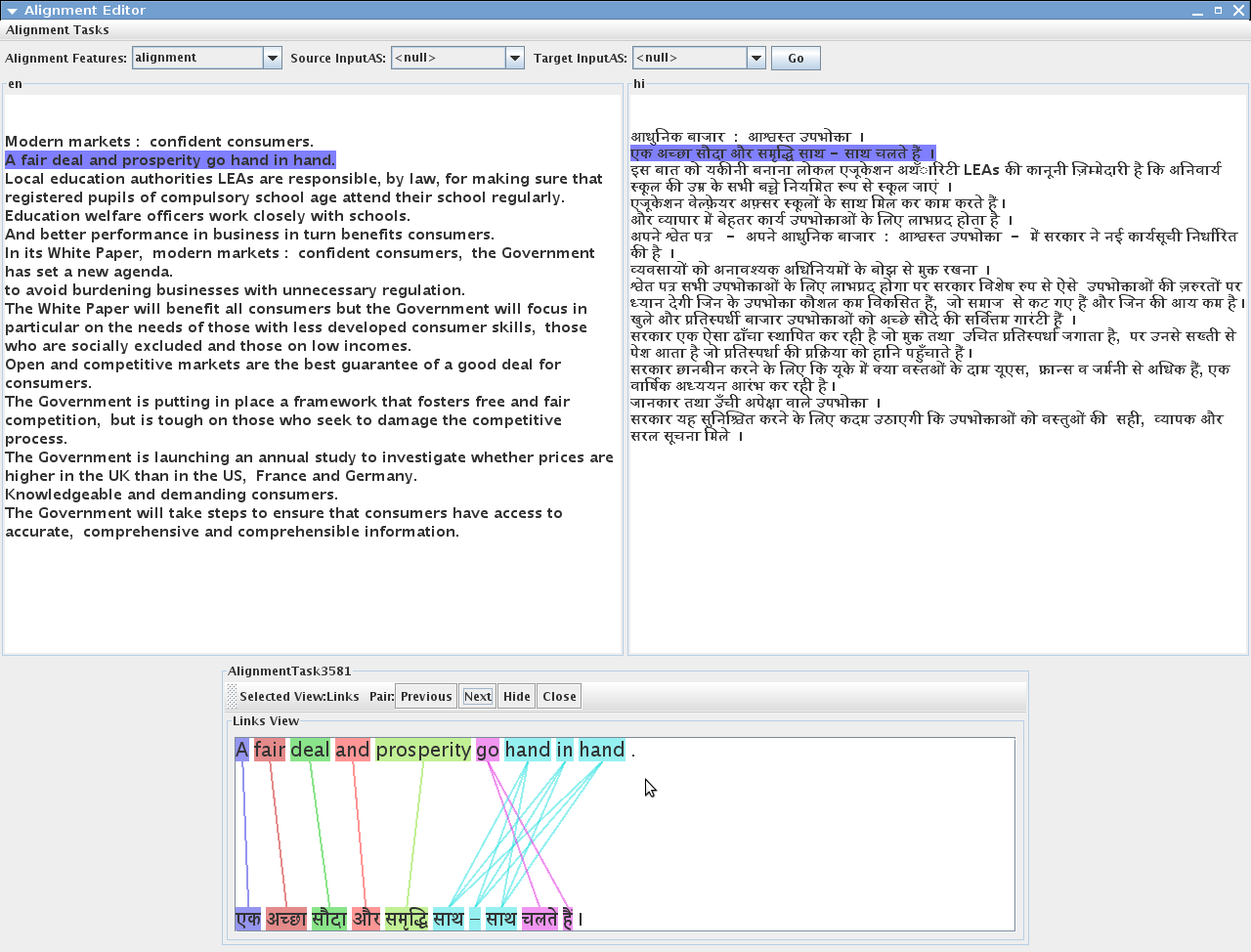

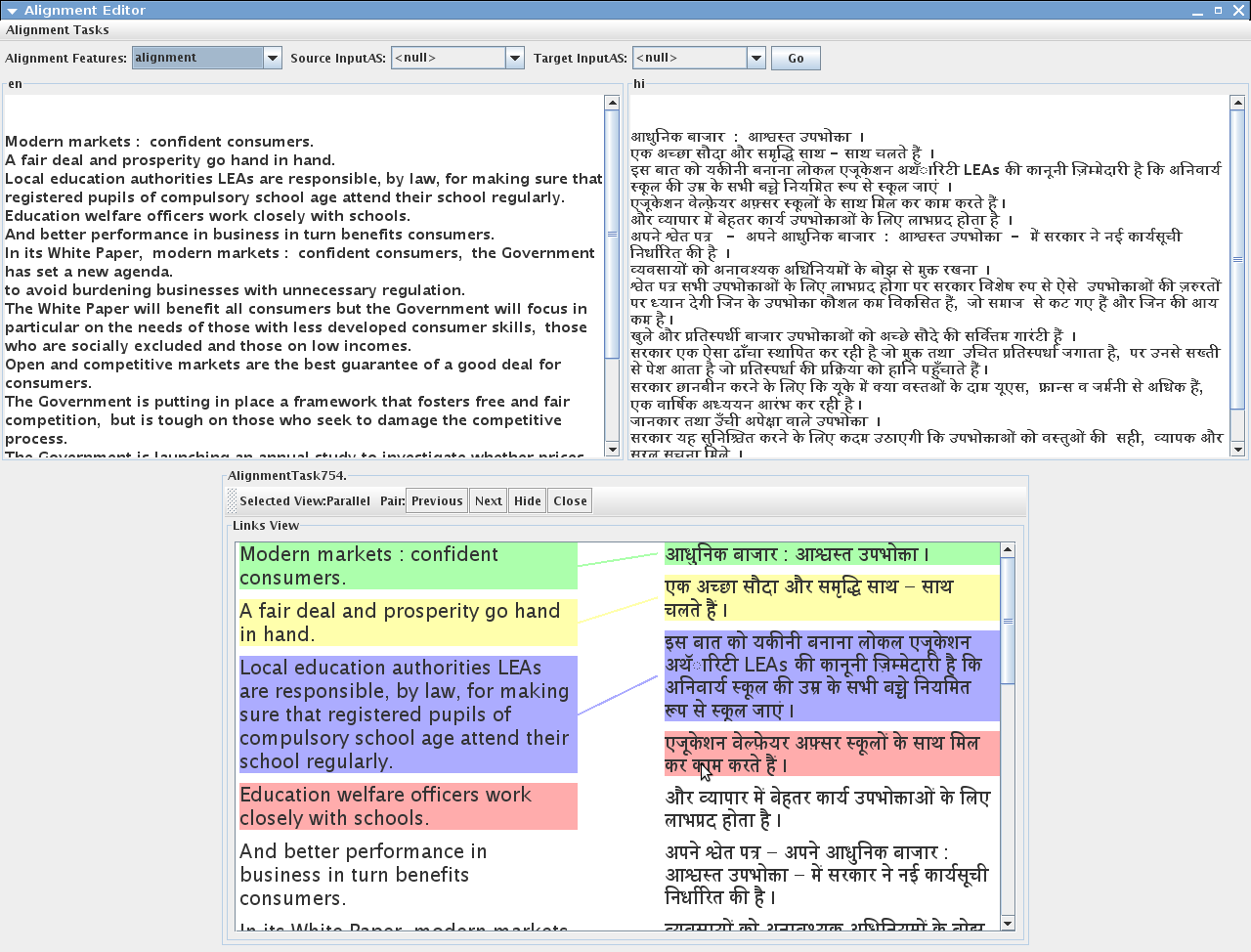

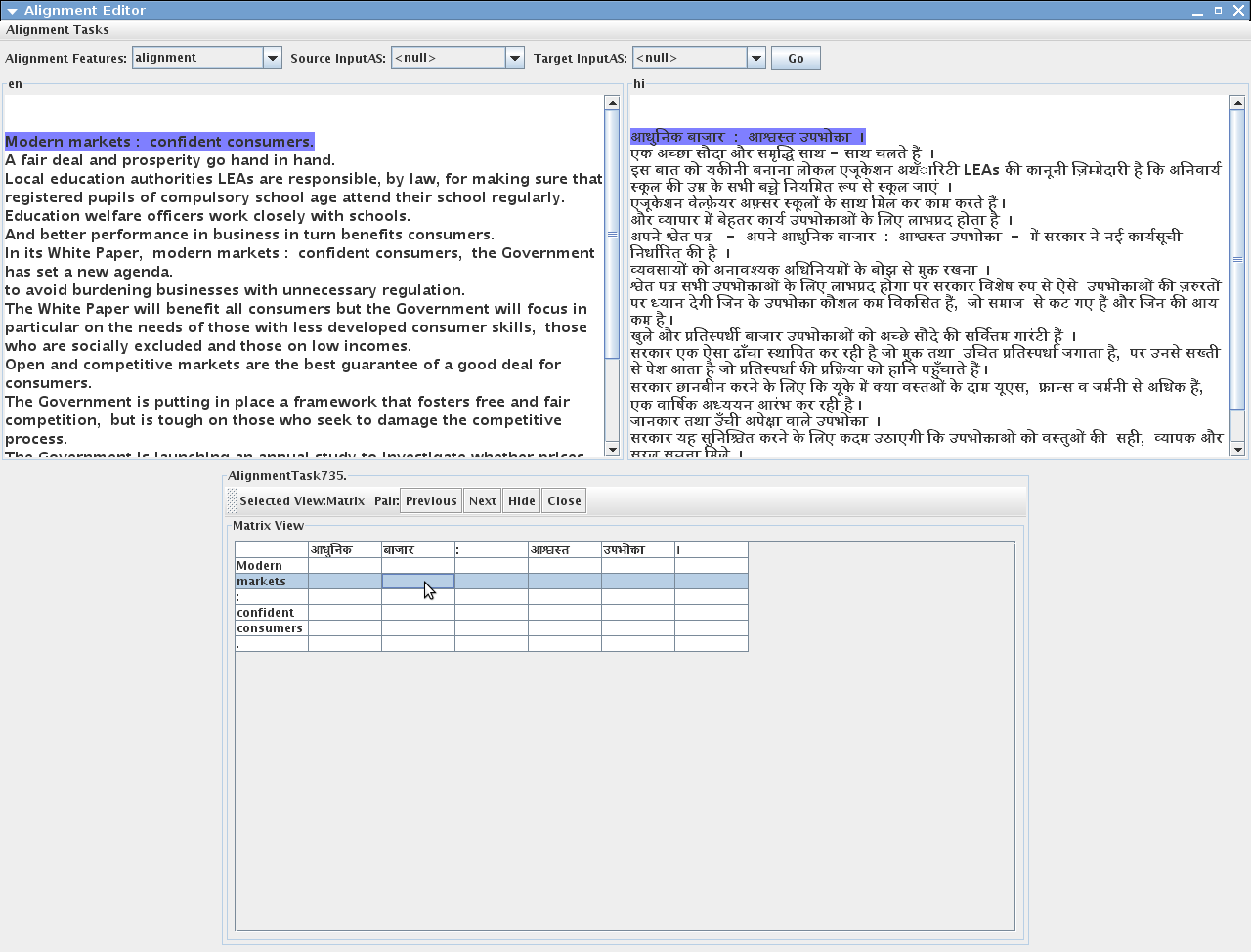

A new version of Alignment Editor has been added to the GATE distribution. It consists of several new features such as the new alignment viewer, ability to create alignment tasks and store in xml files, three different views to align the text (links view and matrix view - suitable for character, word and phrase alignments, parallel view - suitable for sentence or long text alignment), an alignment exporter and many more. See chapter 16 for more information.

MetaMap, from the National Library of Medicine (NLM), maps biomedical text to the UMLS Metathesaurus and allows Metathesaurus concepts to be discovered in a text corpus. The Tagger_MetaMap plugin for GATE wraps the MetaMap Java API client to allow GATE to communicate with a remote (or local) MetaMap PrologBeans mmserver and MetaMap distribution. This allows the content of specified annotations (or the entire document content) to be processed by MetaMap and the results converted to GATE annotations and features. See section 19.18 for details.

A new plugin called Web_Translate_Google has been added with a PR called Google Translator PR in it. It allows users to translate text using the Google translation services. See section 19.8 for more information.

New Gazetteer Editor for ANNIE Gazetteer that can be used instead of Gaze. It uses tables instead of text area to display the gazetteer definition and lists, allows sorting on any column, filtering of the lists, reloading a list, etc. See section 13.2.2.

Breaking changes

This release contains a few small changes that are not backwards-compatible:

- Changed the semantics of the ontology-aware matching mode in JAPE to take account of the default namespace in an ontology. Now class feature values that are not complete URIs will be treated as naming classes within the default namespace of the target ontology only, and not (as previously) any class whose URI ends with the specified name. This is more consistent with the way OWL normally works, as well as being much more efficient to execute. See section 14.10 for more details.

- Updated the WordNet plugin to support more recent releases of WordNet than 1.6. The format of the configuration file has changed, if you are using the previous WordNet 1.6 support you will need to update your configuration. See section 19.9 for details.

Other new features and bugfixes

The concept of templates has been introduced to JAPE. This is a way to declare named “variables” in a JAPE grammar that can contain placeholders that are filled in when the template is referenced. See section 8.1.5 for full details.

Added a new API to the CREOLE registry to permit plugins that live entirely on the classpath. CreoleRegister.registerComponent instructs the registry to scan a single java Class for annotations, adding it to the set of registered plugins. See section 7.3 for details.

Maven artifacts for GATE are now published to Ontotext’s public Maven repository. See section 2.5.1 for details.

Bugfix: DocumentImpl no longer changes its stringContent parameter value whenever the document’s content changes. Among other things, this means that saved application states will no longer contain the full text of the documents in their corpus, and documents containing XML or HTML tags that were originally created from string content (rather than a URL) can now safely be stored in saved application states and the GATE Developer saved session.

A processing resource called Quality Assurance PR has been added in the Tools plugin. The PR wraps the functionality of the Quality Assurance Tool (section 10.3).

A new section for using the Corpus Quality Assurance from GATE Embedded has been written. See section 10.3.

Added new parameters and options to the LingPipe Language Identifier PR. (section 19.16.5), and corrected the documentation for the LingPipe POS Tagger (section 19.16.3).

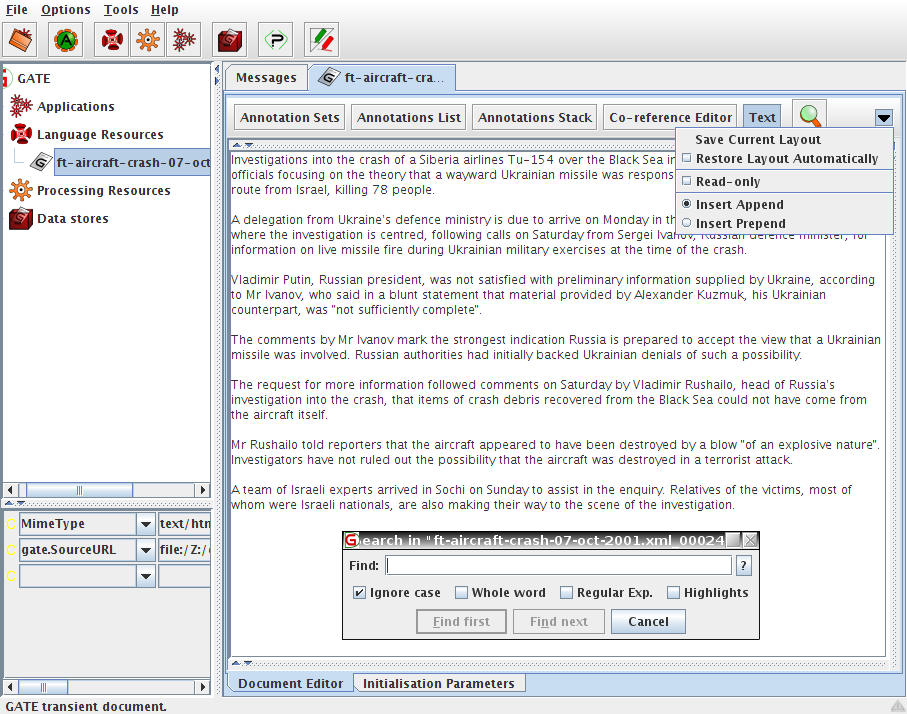

In the document editor, fixed several exceptions to make editing text with annotations highlighted working. So you should now be able to edit the text and the annotations should behave correctly that is to say move, expand or disappear according to the text insertions and deletions.

Options for document editor: read-only and insert append/prepend have been moved from the options dialogue to the document editor toolbar at the top right on the triangle icon that display a menu with the options. See section 3.2.

Added new parameters and options to the Crawl PR and document features to its output; see section 19.5 for details.

Fixed a bug where ontology-aware JAPE rules worked correctly when the target annotation’s class was a subclass of the class specified in the rule, but failed when the two class names matched exactly.

Added the current Corpus to the script binding for the Groovy Script PR, allowing a Groovy script to access and set corpus-level features. Also added callbacks that a Groovy script can implement to do additional pre- or post-processing before the first and after the last document in a corpus. See section 7.16 for details.

1.6 Further Reading [#]

Lots of documentation lives on the GATE web server, including:

- movies of the system in operation;

- the main system documentation tree;

- JavaDoc API documentation;

- HTML of the source code;

- parts of the requirements analysis that version 3 was based on.

For more details about Sheffield University’s work in human language processing see the NLP group pages or A Definition and Short History of Language Engineering ([Cunningham 99a]). For more details about Information Extraction see IE, a User Guide or the GATE IE pages.

A list of publications on GATE and projects that use it (some of which are available on-line):

2009

- [Bontcheva et al. 09]

- is the ‘Human Language Technologies’ chapter of ‘Semantic Knowledge Management’ (John Davies, Marko Grobelnik and Dunja Mladeni eds.)

- [Damljanovic et al. 09]

- - to appear.

- [Laclavik & Maynard 09]

- reviews the current state of the art in email processing and communication research, focusing on the roles played by email in information management, and commercial and research efforts to integrate a semantic-based approach to email.

- [Li et al. 09]

- investigates two techniques for making SVMs more suitable for language learning tasks. Firstly, an SVM with uneven margins (SVMUM) is proposed to deal with the problem of imbalanced training data. Secondly, SVM active learning is employed in order to alleviate the difficulty in obtaining labelled training data. The algorithms are presented and evaluated on several Information Extraction (IE) tasks.

2008

- [Agatonovic et al. 08]

- presents our approach to automatic patent enrichment, tested in large-scale, parallel experiments on USPTO and EPO documents.

- [Damljanovic et al. 08]

- presents Question-based Interface to Ontologies (QuestIO) - a tool for querying ontologies using unconstrained language-based queries.

- [Damljanovic & Bontcheva 08]

- presents a semantic-based prototype that is made for an open-source software engineering project with the goal of exploring methods for assisting open-source developers and software users to learn and maintain the system without major effort.

- [Della Valle et al. 08]

- presents ServiceFinder.

- [Li & Cunningham 08]

- describes our SVM-based system and several techniques we developed successfully to adapt SVM for the specific features of the F-term patent classification task.

- [Li & Bontcheva 08]

- reviews the recent developments in applying geometric and quantum mechanics methods for information retrieval and natural language processing.

- [Maynard 08]

- investigates the state of the art in automatic textual annotation tools, and examines the extent to which they are ready for use in the real world.

- [Maynard et al. 08a]

- discusses methods of measuring the performance of ontology-based information extraction systems, focusing particularly on the Balanced Distance Metric (BDM), a new metric we have proposed which aims to take into account the more flexible nature of ontologically-based applications.

- [Maynard et al. 08b]

- investigates NLP techniques for ontology population, using a combination of rule-based approaches and machine learning.

- [Tablan et al. 08]

- presents the QuestIO system a natural language interface for accessing structured information, that is domain independent and easy to use without training.

2007

- [Funk et al. 07a]

- describes an ontologically based approach to multi-source, multilingual information extraction.

- [Funk et al. 07b]

- presents a controlled language for ontology editing and a software implementation, based partly on standard NLP tools, for processing that language and manipulating an ontology.

- [Maynard et al. 07a]

- proposes a methodology to capture (1) the evolution of metadata induced by changes to the ontologies, and (2) the evolution of the ontology induced by changes to the underlying metadata.

- [Maynard et al. 07b]

- describes the development of a system for content mining using domain ontologies, which enables the extraction of relevant information to be fed into models for analysis of financial and operational risk and other business intelligence applications such as company intelligence, by means of the XBRL standard.

- [Saggion 07]

- describes experiments for the cross-document coreference task in SemEval 2007. Our cross-document coreference system uses an in-house agglomerative clustering implementation to group documents referring to the same entity.

- [Saggion et al. 07]

- describes the application of ontology-based extraction and merging in the context of a practical e-business application for the EU MUSING Project where the goal is to gather international company intelligence and country/region information.

- [Li et al. 07a]

- introduces a hierarchical learning approach for IE, which uses the target ontology as an essential part of the extraction process, by taking into account the relations between concepts.

- [Li et al. 07b]

- proposes some new evaluation measures based on relations among classification labels, which can be seen as the label relation sensitive version of important measures such as averaged precision and F-measure, and presents the results of applying the new evaluation measures to all submitted runs for the NTCIR-6 F-term patent classification task.

- [Li et al. 07c]

- describes the algorithms and linguistic features used in our participating system for the opinion analysis pilot task at NTCIR-6.

- [Li et al. 07d]

- describes our SVM-based system and the techniques we used to adapt the approach for the specifics of the F-term patent classification subtask at NTCIR-6 Patent Retrieval Task.

- [Li & Shawe-Taylor 07]

- studies Japanese-English cross-language patent retrieval using Kernel Canonical Correlation Analysis (KCCA), a method of correlating linear relationships between two variables in kernel defined feature spaces.

2006

- [Aswani et al. 06]

- (Proceedings of the 5th International Semantic Web Conference (ISWC2006)) In this paper the problem of disambiguating author instances in ontology is addressed. We describe a web-based approach that uses various features such as publication titles, abstract, initials and co-authorship information.

- [Bontcheva et al. 06a]

- ‘Semantic Annotation and Human Language Technology’, contribution to ‘Semantic Web Technology: Trends and Research’ (Davies, Studer and Warren, eds.)

- [Bontcheva et al. 06b]

- ‘Semantic Information Access’, contribution to ‘Semantic Web Technology: Trends and Research’ (Davies, Studer and Warren, eds.)

- [Bontcheva & Sabou 06]

- presents an ontology learning approach that 1) exploits a range of information sources associated with software projects and 2) relies on techniques that are portable across application domains.

- [Davis et al. 06]

- describes work in progress concerning the application of Controlled Language Information Extraction - CLIE to a Personal Semantic Wiki - Semper- Wiki, the goal being to permit users who have no specialist knowledge in ontology tools or languages to semi-automatically annotate their respective personal Wiki pages.

- [Li & Shawe-Taylor 06]

- studies a machine learning algorithm based on KCCA for cross-language information retrieval. The algorithm is applied to Japanese-English cross-language information retrieval.

- [Maynard et al. 06]

- discusses existing evaluation metrics, and proposes a new method for evaluating the ontology population task, which is general enough to be used in a variety of situation, yet more precise than many current metrics.

- [Tablan et al. 06a]

- describes an approach that allows users to create and edit ontologies simply by using a restricted version of the English language. The controlled language described is based on an open vocabulary and a restricted set of grammatical constructs.

- [Tablan et al. 06b]

- describes the creation of linguistic analysis and corpus search tools for Sumerian, as part of the development of the ETCSL.

- [Wang et al. 06]

- proposes an SVM based approach to hierarchical relation extraction, using features derived automatically from a number of GATE-based open-source language processing tools.

2005

- [Aswani et al. 05]

- (Proceedings of Fifth International Conference on Recent Advances in Natural Language Processing (RANLP2005)) It is a full-featured annotation indexing and search engine, developed as a part of the GATE. It is powered with Apache Lucene technology and indexes a variety of documents supported by the GATE.

- [Bontcheva 05]

- presents the ONTOSUM system which uses Natural Language Generation (NLG) techniques to produce textual summaries from Semantic Web ontologies.

- [Cunningham 05]

- is an overview of the field of Information Extraction for the 2nd Edition of the Encyclopaedia of Language and Linguistics.

- [Cunningham & Bontcheva 05]

- is an overview of the field of Software Architecture for Language Engineering for the 2nd Edition of the Encyclopaedia of Language and Linguistics.

- [Dowman et al. 05a]

- (Euro Interactive Television Conference Paper) A system which can use material from the Internet to augment television news broadcasts.

- [Dowman et al. 05b]

- (World Wide Web Conference Paper) The Web is used to assist the annotation and indexing of broadcast news.

- [Dowman et al. 05c]

- (Second European Semantic Web Conference Paper) A system that semantically annotates television news broadcasts using news websites as a resource to aid in the annotation process.

- [Li et al. 05a]

- (Proceedings of Sheffield Machine Learning Workshop) describe an SVM based IE system which uses the SVM with uneven margins as learning component and the GATE as NLP processing module.

- [Li et al. 05b]

- (Proceedings of Ninth Conference on Computational Natural Language Learning (CoNLL-2005)) uses the uneven margins versions of two popular learning algorithms SVM and Perceptron for IE to deal with the imbalanced classification problems derived from IE.

- [Li et al. 05c]

- (Proceedings of Fourth SIGHAN Workshop on Chinese Language processing (Sighan-05)) a system for Chinese word segmentation based on Perceptron learning, a simple, fast and effective learning algorithm.

- [Polajnar et al. 05]

- (University of Sheffield-Research Memorandum CS-05-10) User-Friendly Ontology Authoring Using a Controlled Language.

- [Saggion & Gaizauskas 05]

- describes experiments on content selection for producing biographical summaries from multiple documents.

- [Ursu et al. 05]

- (Proceedings of the 2nd European Workshop on the Integration of Knowledge, Semantic and Digital Media Technologies (EWIMT 2005))Digital Media Preservation and Access through Semantically Enhanced Web-Annotation.

- [Wang et al. 05]

- (Proceedings of the 2005 IEEE/WIC/ACM International Conference on Web Intelligence (WI 2005)) Extracting a Domain Ontology from Linguistic Resource Based on Relatedness Measurements.

2004

- [Bontcheva 04]

- (LREC 2004) describes lexical and ontological resources in GATE used for Natural Language Generation.

- [Bontcheva et al. 04]

- (JNLE) discusses developments in GATE in the early naughties.

- [Cunningham & Scott 04a]

- (JNLE) is the introduction to the above collection.

- [Cunningham & Scott 04b]

- (JNLE) is a collection of papers covering many important areas of Software Architecture for Language Engineering.

- [Dimitrov et al. 04]

- (Anaphora Processing) gives a lightweight method for named entity coreference resolution.

- [Li et al. 04]

- (Machine Learning Workshop 2004) describes an SVM based learning algorithm for IE using GATE.

- [Maynard et al. 04a]

- (LREC 2004) presents algorithms for the automatic induction of gazetteer lists from multi-language data.

- [Maynard et al. 04b]

- (ESWS 2004) discusses ontology-based IE in the hTechSight project.

- [Maynard et al. 04c]

- (AIMSA 2004) presents automatic creation and monitoring of semantic metadata in a dynamic knowledge portal.

- [Saggion & Gaizauskas 04a]

- describes an approach to mining definitions.

- [Saggion & Gaizauskas 04b]

- describes a sentence extraction system that produces two sorts of multi-document summaries; a general-purpose summary of a cluster of related documents and an entity-based summary of documents related to a particular person.

- [Wood et al. 04]

- (NLDB 2004) looks at ontology-based IE from parallel texts.

2003

- [Bontcheva et al. 03]

- (NLPXML-2003) looks at GATE for the semantic web.

- [Cunningham et al. 03]

- (Corpus Linguistics 2003) describes GATE as a tool for collaborative corpus annotation.

- [Kiryakov 03]

- (Technical Report) discusses semantic web technology in the context of multimedia indexing and search.

- [Manov et al. 03]

- (HLT-NAACL 2003) describes experiments with geographic knowledge for IE.

- [Maynard et al. 03a]

- (EACL 2003) looks at the distinction between information and content extraction.

- [Maynard et al. 03c]

- (Recent Advances in Natural Language Processing 2003) looks at semantics and named-entity extraction.

- [Maynard et al. 03e]

- (ACL Workshop 2003) describes NE extraction without training data on a language you don’t speak (!).

- [Saggion et al. 03a]

- (EACL 2003) discusses robust, generic and query-based summarisation.

- [Saggion et al. 03b]

- (Data and Knowledge Engineering) discusses multimedia indexing and search from multisource multilingual data.

- [Saggion et al. 03c]

- (EACL 2003) discusses event co-reference in the MUMIS project.

- [Tablan et al. 03]

- (HLT-NAACL 2003) presents the OLLIE on-line learning for IE system.

- [Wood et al. 03]

- (Recent Advances in Natural Language Processing 2003) discusses using parallel texts to improve IE recall.

2002

- [Baker et al. 02]

- (LREC 2002) report results from the EMILLE Indic languages corpus collection and processing project.

- [Bontcheva et al. 02a]

- (ACl 2002 Workshop) describes how GATE can be used as an environment for teaching NLP, with examples of and ideas for future student projects developed within GATE.

- [Bontcheva et al. 02b]

- (NLIS 2002) discusses how GATE can be used to create HLT modules for use in information systems.

- [Bontcheva et al. 02c], [Dimitrov 02a] and [Dimitrov 02b]

- (TALN 2002, DAARC 2002, MSc thesis) describe the shallow named entity coreference modules in GATE: the orthomatcher which resolves pronominal coreference, and the pronoun resolution module.

- [Cunningham 02]

- (Computers and the Humanities) describes the philosophy and motivation behind the system, describes GATE version 1 and how well it lived up to its design brief.

- [Cunningham et al. 02]

- (ACL 2002) describes the GATE framework and graphical development environment as a tool for robust NLP applications.

- [Dimitrov 02a, Dimitrov et al. 02]

- (DAARC 2002, MSc thesis) discuss lightweight coreference methods.

- [Lal 02]

- (Master Thesis) looks at text summarisation using GATE.

- [Lal & Ruger 02]

- (ACL 2002) looks at text summarisation using GATE.

- [Maynard et al. 02a]

- (ACL 2002 Summarisation Workshop) describes using GATE to build a portable IE-based summarisation system in the domain of health and safety.

- [Maynard et al. 02c]

- (AIMSA 2002) describes the adaptation of the core ANNIE modules within GATE to the ACE (Automatic Content Extraction) tasks.

- [Maynard et al. 02d]

- (Nordic Language Technology) describes various Named Entity recognition projects developed at Sheffield using GATE.

- [Maynard et al. 02e]

- (JNLE) describes robustness and predictability in LE systems, and presents GATE as an example of a system which contributes to robustness and to low overhead systems development.

- [Pastra et al. 02]

- (LREC 2002) discusses the feasibility of grammar reuse in applications using ANNIE modules.

- [Saggion et al. 02b] and [Saggion et al. 02a]

- (LREC 2002, SPLPT 2002) describes how ANNIE modules have been adapted to extract information for indexing multimedia material.

- [Tablan et al. 02]

- (LREC 2002) describes GATE’s enhanced Unicode support.

Older than 2002

- [Maynard et al. 01]

- (RANLP 2001) discusses a project using ANNIE for named-entity recognition across wide varieties of text type and genre.

- [Bontcheva et al. 00] and [Brugman et al. 99]

- (COLING 2000, technical report) describe a prototype of GATE version 2 that integrated with the EUDICO multimedia markup tool from the Max Planck Institute.

- [Cunningham 00]

- (PhD thesis) defines the field of Software Architecture for Language Engineering, reviews previous work in the area, presents a requirements analysis for such systems (which was used as the basis for designing GATE versions 2 and 3), and evaluates the strengths and weaknesses of GATE version 1.

- [Cunningham et al. 00a], [Cunningham et al. 98a] and [Peters et al. 98]

- (OntoLex 2000, LREC 1998) presents GATE’s model of Language Resources, their access and distribution.

- [Cunningham et al. 00b]

- (LREC 2000) taxonomises Language Engineering components and discusses the requirements analysis for GATE version 2.

- [Cunningham et al. 00c] and [Cunningham et al. 99]

- (COLING 2000, AISB 1999) summarise experiences with GATE version 1.

- [Cunningham et al. 00d] and [Cunningham 99b]

- (technical reports) document early versions of JAPE (superseded by the present document).

- [Gambäck & Olsson 00]

- (LREC 2000) discusses experiences in the Svensk project, which used GATE version 1 to develop a reusable toolbox of Swedish language processing components.

- [Maynard et al. 00]

- (technical report) surveys users of GATE up to mid-2000.

- [McEnery et al. 00]

- (Vivek) presents the EMILLE project in the context of which GATE’s Unicode support for Indic languages has been developed.

- [Cunningham 99a]

- (JNLE) reviewed and synthesised definitions of Language Engineering.

- [Stevenson et al. 98] and [Cunningham et al. 98b]

- (ECAI 1998, NeMLaP 1998) report work on implementing a word sense tagger in GATE version 1.

- [Cunningham et al. 97b]

- (ANLP 1997) presents motivation for GATE and GATE-like infrastructural systems for Language Engineering.

- [Cunningham et al. 96a]

- (manual) was the guide to developing CREOLE components for GATE version 1.

- [Cunningham et al. 96b]

- (TIPSTER) discusses a selection of projects in Sheffield using GATE version 1 and the TIPSTER architecture it implemented.

- [Cunningham et al. 96c, Cunningham et al. 96d, Cunningham et al. 95]

- (COLING 1996, AISB Workshop 1996, technical report) report early work on GATE version 1.

- [Gaizauskas et al. 96a]

- (manual) was the user guide for GATE version 1.

- [Gaizauskas et al. 96b, Cunningham et al. 97a, Cunningham et al. 96e]

- (ICTAI 1996, TIPSTER 1997, NeMLaP 1996) report work on GATE version 1.

- [Humphreys et al. 96]

- (manual) describes the language processing components distributed with GATE version 1.

- [Cunningham 94, Cunningham et al. 94]

- (NeMLaP 1994, technical report) argue that software engineering issues such as reuse, and framework construction, are important for language processing R&D.

Chapter 2

Installing and Running GATE [#]

2.1 Downloading GATE [#]

To download GATE point your web browser at http://gate.ac.uk/download/.

2.2 Installing and Running GATE [#]

GATE will run anywhere that supports Java 5 or later, including Solaris, Linux, Mac OS X and Windows platforms. We don’t run tests on other platforms, but have had reports of successful installs elsewhere.

2.2.1 The Easy Way [#]

The easy way to install is to use one of the platform-specific installers (created using the excellent IzPack). Download a ‘platform-specific installer’ and follow the instructions it gives you. Once the installation is complete, you can start GATE Developer using gate.exe (Windows) or GATE.app (Mac) in the top-level installation directory, or gate.sh in the bin directory (other platforms).

Note for Mac users: on 64-bit-capable systems, GATE.app will run as a 64-bit application. It will use the first listed 64-bit JVM in your Java Preferences, even if your highest priority JVM is a 32-bit one. Thus if you want to run using Java 5 rather than 6 you must ensure that “J2SE 5.0 64-bit” is listed ahead of “Java SE 6 64-bit”.

2.2.2 The Hard Way (1) [#]

Download the Java-only release package or the binary build snapshot, and follow the instructions below.

Prerequisites:

- A conforming Java 2 environment,

- version 1.4.2 or above for GATE 3.1

- version 5.0 for GATE 4.0 beta 1 or later.

available free from Sun Microsystems or from your UNIX supplier. (We test on various Sun JDKs on Solaris, Linux and Windows XP.)

- Binaries from the GATE distribution you downloaded: gate.jar, lib/ext/guk.jar

(Unicode editing support) and a suitable script to start Ant, e.g. ant.sh or ant.bat. These

are held in a directory called bin like this:

.../bin/

gate.jar

ant.sh

ant.batYou will also need the lib directory, containing various libraries that GATE depends on.

- An open mind and a sense of humour.

Using the binary distribution:

- Unpack the distribution, creating a directory containing jar files and scripts.

- To run GATE Developer: on Windows, start a Command Prompt window, change to the directory where you unpacked the GATE distribution and run ‘bin/ant.bat run’; on UNIX or mac open a terminal window and run ‘bin/ant run’.

- To embed GATE as a library (GATE Embedded), put gate.jar and all the libraries in the lib directory in your CLASSPATH and tell Java that guk.jar is an extension (-Djava.ext.dirs=path-to-guk.jar).

The Ant scripts that start GATE Developer (ant.bat or ant) require you to set the JAVA_HOME environment variable to point to the top level directory of your JAVA installation. The value of GATE_CONFIG is passed to the system by the scripts using either a -i command-line option, or the Java property gate.config.

2.2.3 The Hard Way (2): Subversion [#]

The GATE code is maintained in a Subversion repository. You can use a Subversion

client to check out the source code – the most up-to-date version of GATE is the trunk:

svn checkout https://gate.svn.sourceforge.net/svnroot/gate/gate/trunk gate

Once you have checked out the code you can build GATE using Ant (see Section 2.5)

You can browse the complete Subversion repository online at http://gate.svn.sourceforge.net/.

2.3 Using System Properties with GATE [#]

During initialisation, GATE reads several Java system properties in order to decide where to find its configuration files.

Here is a list of the properties used, their default values and their meanings:

- gate.home

- sets the location of the GATE install directory. This should point to the top level directory of your GATE installation. This is the only property that is required. If this is not set, the system will display an error message and them it will attempt to guess the correct value.

- gate.plugins.home

- points to the location of the directory containing installed plugins (a.k.a. CREOLE directories). If this is not set then the default value of {gate.home}/plugins is used.

- gate.site.config

- points to the location of the configuration file containing the site-wide options. If not set this will default to {gate.home}/gate.xml. The site configuration file must exist!

- gate.user.config

- points to the file containing the user’s options. If not specified, or if the specified file does not exist at startup time, the default value of gate.xml (.gate.xml on Unix platforms) in the user’s home directory is used.

- gate.user.session

- points to the file containing the user’s saved session. If not specified, the default value of gate.session (.gate.session on Unix) in the user’s home directory is used. When starting up GATE Developer, the session is reloaded from this file if it exists, and when exiting GATE Developer the session is saved to this file (unless the user has disabled ‘save session on exit’ in the configuration dialog). The session is not used when using GATE Embedded.

- load.plugin.path

- is a path-like structure, i.e. a list of URLs separated by ‘;’. All directories listed here will be loaded as CREOLE plugins during initialisation. This has similar functionality with the the -d command line option.

- gate.builtin.creole.dir

- is a URL pointing to the location of GATE’s built-in CREOLE directory. This is the location of the creole.xml file that defines the fundamental GATE resource types, such as documents, document format handlers, controllers and the basic visual resources that make up GATE. The default points to a location inside gate.jar and should not generally need to be overridden.

When using GATE Embedded, you can set the values for these properties before you call Gate.init(). Alternatively, you can set the values programmatically using the static methods setGateHome(), setPluginsHome(), setSiteConfigFile(), etc. before calling Gate.init(). See the Javadoc documentation for details. If you want to set these values from the command line you can use the following syntax for setting gate.home for example:

java -Dgate.home=/my/new/gate/home/directory -cp... gate.Main

When running GATE Developer, you can set the properties by creating a file build.properties in the top level GATE directory. In this file, any system properties which are prefixed with ‘run.’ will be passed to GATE. For example, to set an alternative user config file, put the following line in build.properties1:

run.gate.user.config=${user.home}/alternative-gate.xml

This facility is not limited to the GATE-specific properties listed above, for example the following line changes the default temporary directory for GATE (note the use of forward slashes, even on Windows platforms):

run.java.io.tmpdir=d:/bigtmp

2.4 Configuring GATE [#]

When GATE Developer is started, or when Gate.init() is called from GATE Embedded, GATE loads various sorts of configuration data stored as XML in files generally called something like gate.xml or .gate.xml. This data holds information such as:

- whether to save settings on exit;

- whether to save session on exit;

- what fonts GATE Developer should use;

- plugins to load at start;

- colours of the annotations;

- locations of files for the file chooser;

- and a lot of other GUI related options;

This type of data is stored at two levels (in order from general to specific):

- the site-wide level, which by default is located the gate.xml file in top level directory of the GATE installation (i.e. the GATE home. This location can be overridden by the Java system property gate.site.config;

- the user level, which lives in the user’s HOME directory on UNIX or their profile directory on Windows (note that parts of this file are overwritten when saving user settings). The default location for this file can be overridden by the Java system property gate.user.config.

Where configuration data appears on several different levels, the more specific ones overwrite the more general. This means that you can set defaults for all GATE users on your system, for example, and allow individual users to override those defaults without interfering with others.

Configuration data can be set from the GATE Developer GUI via the ‘Options’ menu then ‘Configuration’. The user can change the appearance of the GUI in the ‘Appearance’ tab, which includes the options of font and the ‘look and feel’. The ‘Advanced’ tab enables the user to include annotation features when saving the document and preserving its format, to save the selected Options automatically on exit, and to save the session automatically on exit. The ‘Input Methods’ submenu from the ‘Options’ menu enables the user to change the default language for input. These options are all stored in the user’s .gate.xml file.

When using GATE Embedded, you can also set the site config location using Gate.setSiteConfigFile(File) prior to calling Gate.init().

2.5 Building GATE [#]

Note that you don’t need to build GATE unless you’re doing development on the system itself.

Prerequisites:

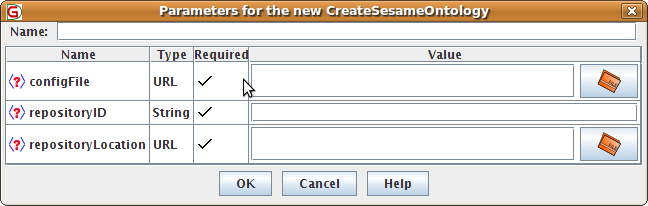

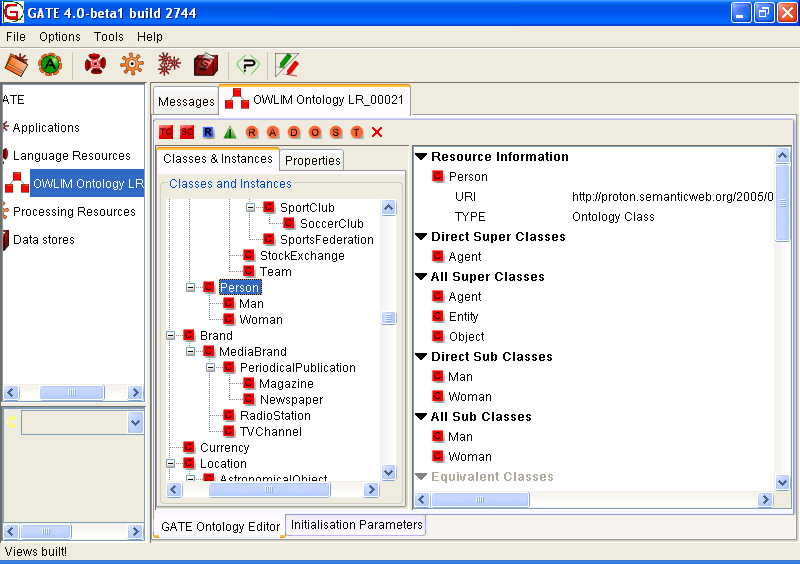

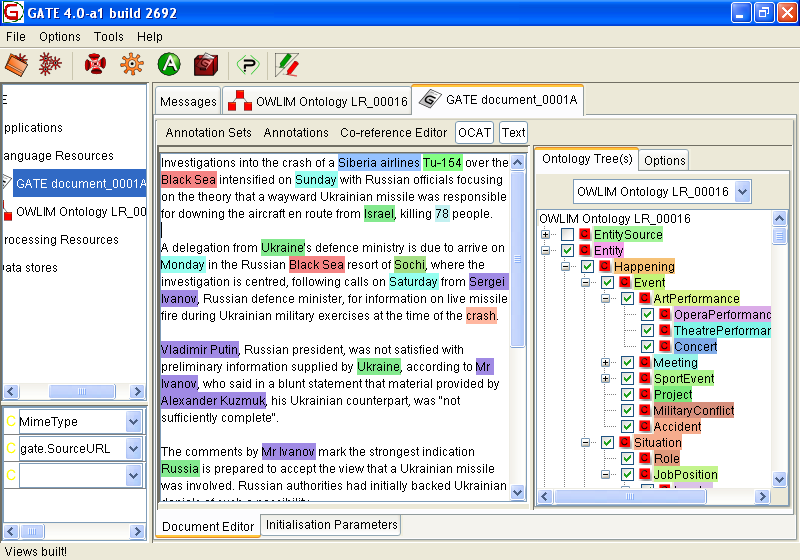

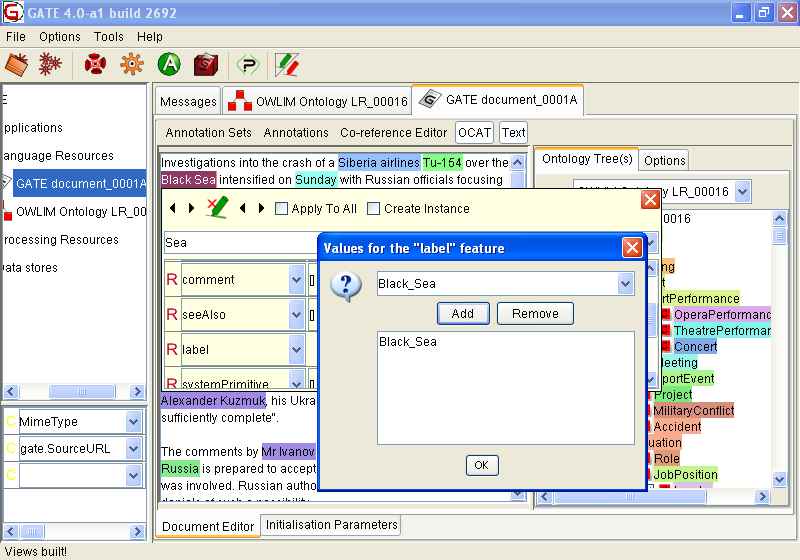

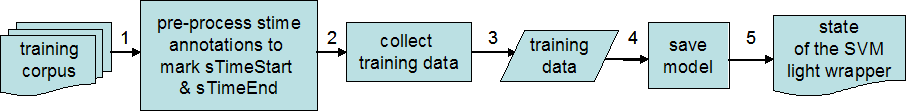

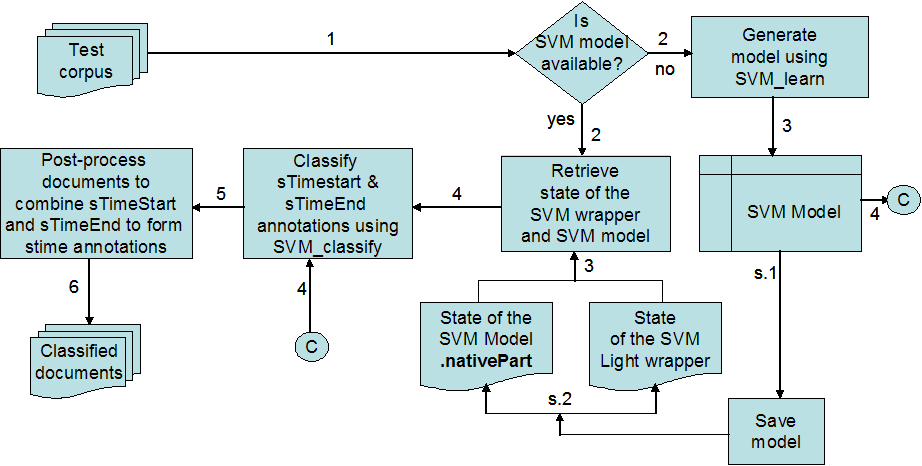

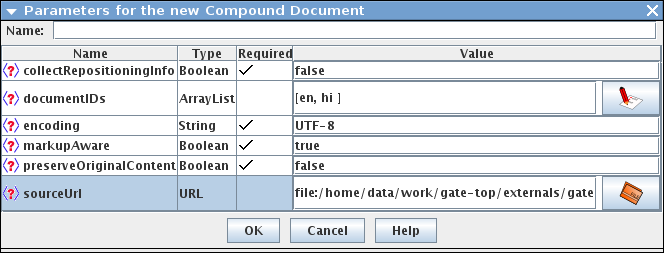

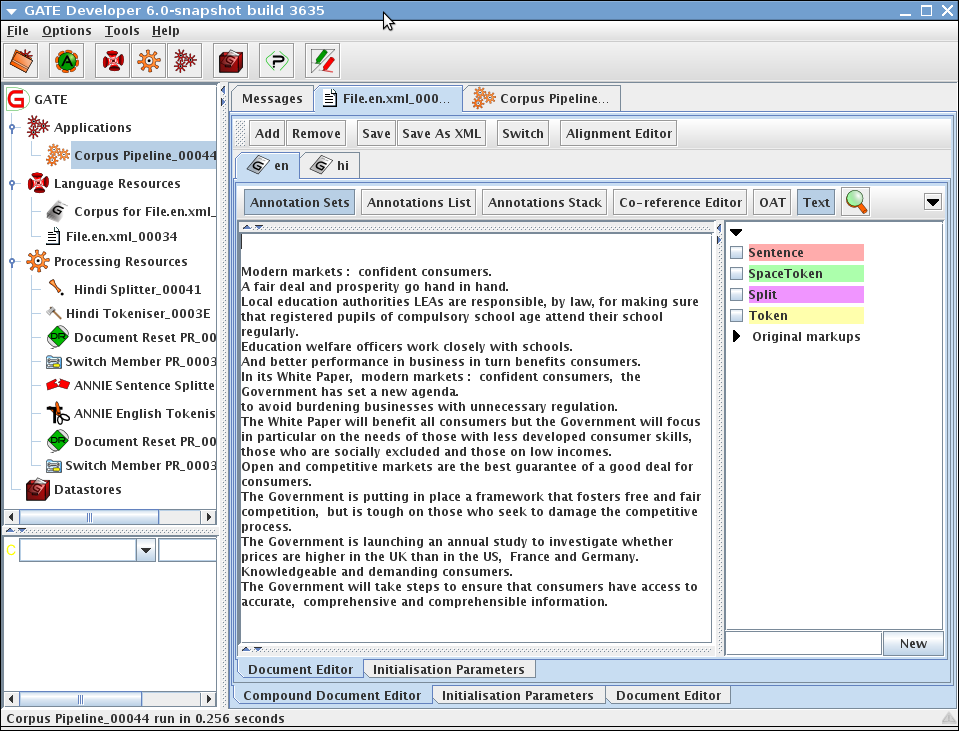

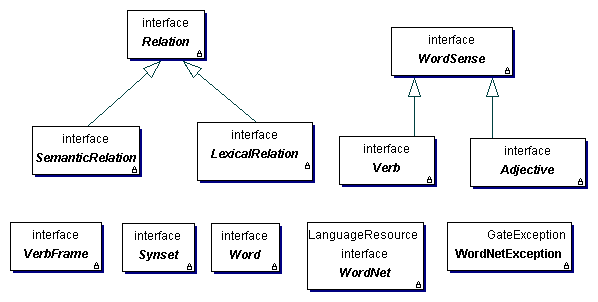

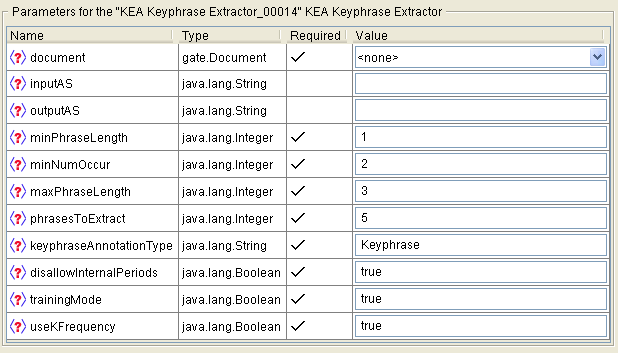

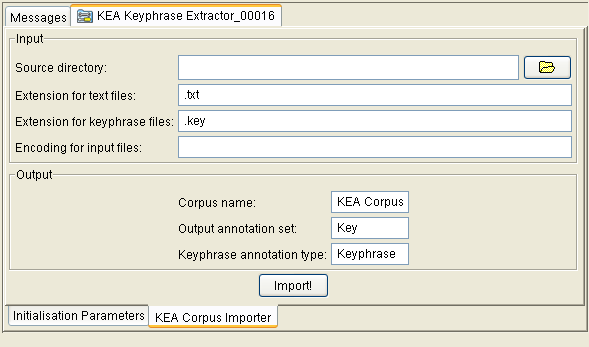

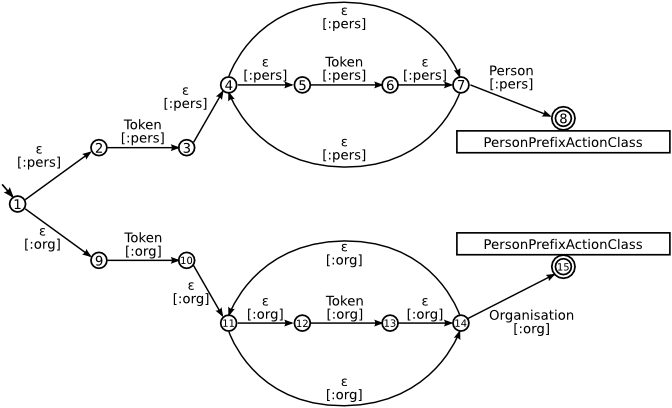

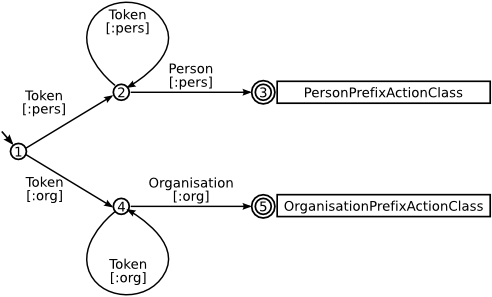

- A conforming Java environment as above.